Index-based reservoir-computing reminiscence

Within the “location-addressable” or “parameter-addressable” situation, the saved reminiscence states inside the neural community are activated by a selected location handle or an index parameter. The stimulus that triggers the system to change states might be completely unrelated to the content material of the activated state, and the pair linking the reminiscence states and stimuli might be arbitrarily outlined. For example, the stimulus might be an environmental situation such because the temperature, whereas the corresponding neural community state may symbolize particular behavioral patterns of an animal. An itinerancy amongst totally different states is thus potential, given a fluctuating environmental issue. (The “parameter-addressable” situation is totally different from the “content-addressable” situation that requires some correlated stimulus to activate a reminiscence state.)

Storage of complicated dynamical attractors

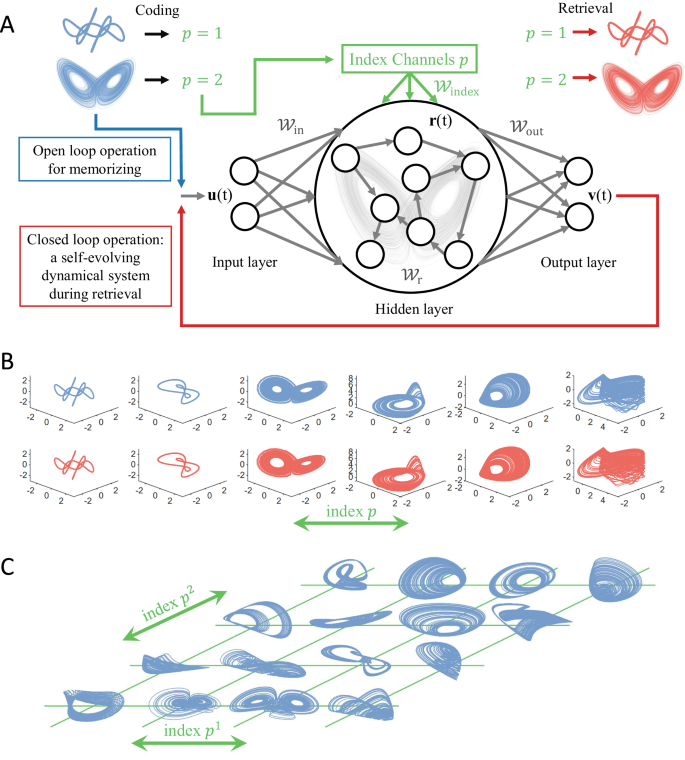

The structure of our index-based reservoir computing reminiscence consists of a typical reservoir pc (i.e., echo state community)11,12,13 however with one function particularly designed for location-addressable reminiscence: an index channel, as proven in Fig. 1(A). Throughout coaching, the time sequence from quite a few dynamical attractors to be memorized are offered because the enter sign u(t) to the reservoir machine, every is related to a selected worth of the index p in order that the attractors might be recalled after coaching utilizing the identical index worth. This index worth p modulates the dynamics of the RC community via an index enter matrix Windex that tasks p to your entire hidden layer with weights outlined by the matrix entries. The coaching course of is an open-loop operation with enter u(t) and the corresponding index worth p via the index channel. To retailer or memorize a distinct attractor, its dynamical trajectory turns into the enter, along with the index worth for this attractor. After coaching, the reservoir output is linked with its enter, producing closed-loop operation in order that the reservoir machine is now able to executing self-dynamical evolution ranging from a random preliminary situation, “managed” by the precise index worth. Extra particularly, to retrieve a desired attractor, we enter its index worth via the index channel, and the reservoir machine will generate a dynamical trajectory faithfully representing the attractor, as schematically illustrated in Fig. 1(A) with two examples: one periodic and one other chaotic attractor. The mathematical particulars of the coaching and retrieval processes are given in Supplementary Info (SI).

A A schematic illustration of index-based reservoir-computing reminiscence. B Retrieval of six memorized chaotic and periodic attractors utilizing a scalar index pi (one integer worth for every memorized dynamical attractor), the place the blue and pink trajectories within the prime and backside rows, respectively, symbolize the unique and retrieved attractors. Visually, there may be little distinction between the unique and memorized/retrieved attractors. C Memorizing 16 chaotic attractors with two indices. The efficiently retrieved attractors are offered in SI. All of the memorized attractors are dynamically steady states within the closed-loop reservoir dynamical system.

To display the workings of our reservoir computing reminiscence, we generate quite a few distinct attractors from three-dimensional nonlinear dynamical programs. We first conduct an experiment of memorizing and retrieving six attractors: two periodic and 4 chaotic attractors, as represented by the blue trajectories within the prime row of Fig. 1(B) (the bottom fact). The index values for these six attractors are merely chosen to be pi = i for i = 1, …, 6. Throughout coaching, the time sequence of every attractor is injected into the enter layer, and the reservoir community receives the precise index worth, labeling this attractor to be memorized in order that the reservoir-computing reminiscence learns the affiliation of the index worth with the attractor to be memorized. To retrieve a selected attractor, we set the index worth p to the one which labeled this attractor throughout coaching and permit the reservoir machine to execute a closed-loop operation to generate the specified attractor. For the six distinct dynamical attractors saved, the respective retrieved attractors are proven within the second row in Fig. 1(B). The recalled attractors carefully resemble the unique attractors and for any particular worth of the index, the reservoir-computing reminiscence is able to producing the dynamical trajectory for an arbitrarily lengthy time frame. The constancy of the recalled states, in the long term, might be assessed via the utmost Lyapunov exponent and the correlation dimension. Specifically, we practice an ensemble of 100 totally different reminiscence RCs, recall every of the reminiscence states, and generate outputs constantly for 200,000 steps. The 2 measures are calculated throughout this era course of and in contrast with the bottom fact. For example, for the Lorenz attractor with the true correlation dimension of two.05 ± 0.01 and largest Lyapunov exponent of about 0.906, 96% and 91% of the retrieved attractors have their correlation dimension values inside 2.05 ± 0.02 and exponent values in 0.906 ± 0.015, indicating that the reminiscence RC has discovered the long-term “local weather” of the attractors and is ready to reproduce them (see SI for extra outcomes).

For a small variety of dynamical patterns (attractors), a scalar index channel suffices for correct retrieval, as illustrated in Fig. 1(B). When the variety of attractors to be memorized turns into massive, it’s helpful to extend the dimension of the index channel. As an instance this, we generate 16 distinct chaotic attractors from three-dimensional dynamical programs whose velocity fields consist of various combos of power-series phrases53, as proven in Fig. 1(C). For every attractor, we use a two-dimensional index vector ({p}_{i}=({p}_{i}^{1},, {p}_{i}^{2})) to label it, the place ({p}_{i}^{1},, {p}_{i}^{2}in {1,2,3,4}). The attractors might be efficiently memorized and faithfully retrieved by our index-based reservoir pc, with detailed outcomes offered in SI.

Given quite a few dynamical patterns to be memorized, there might be many various methods of assigning the indices. Along with utilizing one and two indices to tell apart the patterns, as proven in Fig. 1(B) and (C), we studied two different index-assignment schemes: binary and one-hot code. For the binary task scheme, we retailer Okay dynamical patterns utilizing (ulcorner {log }_{2}Okay urcorner) variety of channels. The index worth in every channel can both be one of many two potential values. For the one-hot task scheme, the variety of index channels is similar because the quantity Okay of attractors to be memorized. The index worth in every channel continues to be one of many two potential values, as an illustration, both 0 or 1. For the one-hot task, every attractor is related to one channel, the place solely this channel can take the primary for the attractor, whereas the values related to all different channels are zero. Equally, for every channel, the index worth might be one if and provided that the attractor being skilled/recalled is the state with which it’s related. Arranging all of the index values as a matrix, one-hot task results in an identification matrix. For a given reservoir hidden-layer community, other ways of assigning indices lead to totally different index-input schemes to the reservoir machine and may thus have an effect on the reminiscence efficiency and capacities. The consequences are negligible if the variety of dynamical attractors to be memorized is small, e.g., a dozen or fewer. Nonetheless, the consequences might be pronounced if the quantity Okay of patterns turns into bigger. One method to decide the optimum task rule is to look at, underneath a given rule, how the reminiscence capability will depend on or scales with the dimensions of the reservoir neural community within the hidden layer, which we are going to focus on later.

Transition matrices amongst saved attractors

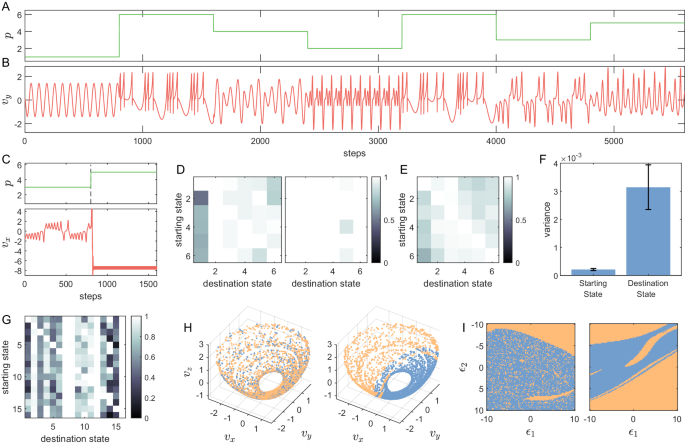

We examine the dynamics of reminiscence toggling among the many saved attractors. As every memorized state si is skilled to be related to a singular index worth pi, the reservoir community’s dynamical state amongst totally different attractors might be switched by merely altering the enter index worth. A number of examples are proven in Fig. 2(A) and (B), the place p is switched amongst varied values a number of instances. The dynamical state activated within the reservoir community switches among the many discovered attractors accordingly. For example, at step 800, for a change from p = 1 to p = 6, the reservoir state switches from a periodic Lissajous state to a chaotic Hindmarsh-Rose (HR) neuron state. Nonetheless, not all such transitions might be profitable – a failed instance is illustrated within the backside panel of Fig. 2(C). On this case, after the index switching, the reservoir system falls into an undesired state that doesn’t belong to any of the skilled states. The chance of failed switchings is small and uneven between the 2 states earlier than and after the switching. A weighted directed community might be outlined amongst all of the memorized states the place the weights are the success chances, which might be represented by a transition matrix. Making index switches at many random time steps and counting the profitable switches versus the failed switches, we numerically acquire the transition matrix, as exemplified in Fig. 2(D), from two totally different randomly generated listed RCs. Determine 2(E) reveals the common transition matrix of an ensemble of 25 listed RCs. Determine 2(F) reveals that the variance of the success fee throughout totally different columns (i.e., totally different vacation spot states) is far bigger than the variance throughout totally different rows (i.e., totally different beginning states), implying that the success fee is considerably extra depending on the vacation spot attractor than on the beginning attractor.

A, B By altering the index worth p as in panel (A), one can toggle amongst totally different states proven in panel (B) which were memorized as attractors within the listed reminiscence RC (Dimensions vx and vz are usually not proven). C An occasion of a failed change, the place the reminiscence RC evolves to an untrained state after altering p. D Two typical transition matrices the place six totally different states [as the ones in Fig. 1(B)] have been memorized. E The typical of transition matrices from 25 reminiscence RCs with totally different random seeds and the identical settings as in panel (D). F Variances within the success fee with respect to the beginning and vacation spot attractors, revealing a stronger dependence on the latter. The result’s from the ensemble of 25 RC networks, every skilled with ten totally different chaotic attractors. G A intentionally chosen reminiscence RC with 16 reminiscence states has comparatively low success charges amongst many states for visualizing the basin constructions proven beneath. H Attracting areas of two totally different vacation spot states on the manifold of a beginning state. The reminiscence RC initially operates on this shell-shaped beginning attractor, and the index p is toggled at totally different time steps when the reminiscence RC is at totally different positions on this attractor. Such a place can decide whether or not the switching is profitable, the place the profitable positions are marked blue and failed positions are marked lighter orange. I Native two-dimensional slices of the basin constructions of the 2 vacation spot states from panel (H) within the high-dimensional RC hidden house. Once more, the blue and lighter orange areas mark the basins of the memorized state and of some untrained states resulting in failed switches, respectively. The portions ϵ1 and ϵ2 outline the scales of perturbations to the RC hidden house in two randomly chosen perpendicular instructions. The ϵ1 = ϵ2 = 0 origin factors are the RC hidden states taken from random time steps whereas the reminiscence RC operates on the corresponding vacation spot states.

What’s the origin of the uneven dependence within the transition matrices and the dynamical mechanism behind the change failures? Two observations are useful. The primary issues the dynamic consequence of a change within the index worth p. Incorporating this time period of p into the reservoir pc is equal to including an adjustable bias time period to every neuron within the reservoir hidden layer. Completely different values of p thus straight lead to totally different bias values on the neurons and totally different dynamics. The identical listed RC underneath totally different p might be handled as totally different dynamical programs. Second, word that, throughout a change, one doesn’t straight intervene with the state enter u or the hidden state r of the RC community, however solely adjustments the worth of p. Consequently, a change in p as in Fig. 2(A) switches the dynamical equations of the RC community whereas conserving the RC states rfinal and the output vfinal on the final time step, which is handed from the earlier dynamical system to the brand new system after switching. This pair of rfinal and vfinal turns into the preliminary hidden state and preliminary enter underneath the brand new dynamic equation of RC.

The 2 observations counsel inspecting the basin of attraction of the skilled state within the reminiscence RC hidden house underneath the corresponding index worth, which usually doesn’t totally occupy your entire part house. Determine 2(I) reveals the basin construction of two arbitrary states in an listed RC skilled with 16 attractors. The blue areas, resulting in the skilled states, depart some house for the orange areas that result in untrained states and failed to change. If the RC states on the final time step earlier than switching lay exterior the blue area, the RC community will evolve to an undesirable state, and a change failure will happen. We additional plot the factors from the attractor earlier than switching in blue (profitable) and orange (unsuccessful), as proven in Fig. 2(H). They’re the projections of the basin construction of the brand new attractors onto the earlier attractor. This image gives an evidence for the robust dependence of success charges on the vacation spot states. Specifically, the success fee is decided by two elements: the relative measurement of the basin of the brand new state after switching and the diploma of overlap between the earlier attractor and the brand new basin. Whereas the diploma of overlap will depend on each the beginning and vacation spot states, the relative measurement of the brand new state basin is solely decided by the vacation spot state, ensuing a powerful dependency on the switching success fee of the vacation spot state. [Further illustrations of (i) the basin structure of the memorized states in an indexed RC, (ii) projections of the basin structure of the destination state back to the starting state attractor, and (iii) how switch success is strongly affected by the basin structure of the destination state are provided in SI.]

Management methods for reaching excessive reminiscence transition success charges

For a reminiscence system to be dependable, accessible, and virtually helpful, a excessive success fee of switching from one memorized attractor to a different upon recalling is crucial. As this switching failure concern is rooted in the issue of whether or not an preliminary situation is contained in the attracting basin of the vacation spot state, such sort of failure might be common for fashions for memorizing not precisely the (static) coaching knowledge however the dynamical guidelines of the goal states and re-generating the goal states by evolving the memorized dynamical guidelines. Even when one makes use of a sequence of networks to memorize the goal states individually, the issue of correctly initializing every reminiscence community throughout a change or a recall persists. A potential answer to this preliminary state drawback is to optimize the coaching scheme or the RNN structure to make the memorized state globally engaging in your entire hidden house – an unsolved drawback at current. Right here we give attention to noninvasive management methods to reinforce the recall or transition success charges with out altering the coaching method or the RC structure, assuming a reminiscence RC is already skilled and stuck. The established connections or weights won’t be modified, guaranteeing that the reminiscence attractors already in place are preserved.

The primary technique, named “tactical detour,” makes use of some profitable pathways within the transition matrix to construct oblique detours to reinforce the general transition success charges. As a substitute of switching from an preliminary attractor to a vacation spot attractor straight, going via a number of different intermediate states can lead to a excessive success fee, as exemplified in Fig. 3(A). This methodology might be comparatively easy to implement in lots of eventualities, as all wanted is switching the p worth a number of extra instances. Nonetheless, this technique has two limitations. First, it’s essential to know some details about the transition matrix to seek for an applicable detour and estimate the success fee. Second, the dynamical mechanism behind failed switching means that the success fee principally will depend on the relative measurement of the attracting basin of the vacation spot state, implying {that a} state that has a small attracting basin is troublesome to succeed in from most different states. Consequently, the advance of the success fee from a detour can fall beneath 100%. As exemplified in Fig. 3(B), the success fee with detours, which may considerably enhance the general success fee inside just some steps, can saturate at some ranges decrease than one.

A Attaining larger switching success charges utilizing detours, the place the switching from attractor 2 to attractor 6 through a detour via attractor 4 considerably will increase the success fee. The suitable panel illustrates the improved transition matrix with detours as in comparison with the unique one on the left. B Common success fee with totally different most detour lengths. A minimal detour is usually wanted – only one single step of detour can scale back greater than two-thirds of the failing chance, obtained from the identical ensemble of reminiscence RCs as in Fig. 2(F). C An occasion of suggestions management to right a failed change, following the scheme in panel (D). The change on the four-hundredth time step results in an untrained static state. For the reason that classifier RC can not acknowledge the reminiscence RC output as the specified state, a brief random sign is utilized to perturb the reminiscence RC, which adjusts the state of the reminiscence RC to realize a profitable retrieval. D Illustration of the managed reminiscence retrieval by a classifier RC and random alerts, the place a classifier RC is used to determine the output from the reminiscence RC. The result of this identification decides whether or not to use a random perturbation or to make use of the reminiscence RC output as the ultimate consequence. E The charges of efficiently corrected failed switches by the management mechanism illustrated in (C) and (D). The denominator of this correction success fee is the variety of failed switches, not all switches. Every perturbation is carried out by ten steps of normal Gaussian noise. Only one “perturb and classify” cycle can right about 80% of the failed switches. The outcomes of this method with totally different parameter decisions are proven in SI. F Efficiency of the cue-based methodology to right failed switches with respect to cue size. Inside 25 steps of the cue, the correction success fee reaches 99%. Panels (E) and (F) are generated from the identical ensemble of 25 reminiscence RCs, every skilled to memorize the six attractors as in Fig. 1.

Our second technique is of the suggestions management sort with a classifier reservoir pc and random alerts to perturb the reminiscence reservoir pc till the latter reaches the specified reminiscence state, as illustrated and defined in Fig. 3(C, D). This trial-and-error method is conceptually just like the random reminiscence search mannequin in psychology54. A working instance is proven in Fig. 3(C), the place the enter index p first prompts sure outputs from the reminiscence RC, that are fed to a classifier reservoir pc skilled to tell apart among the many goal states and between the goal states and non-target states. As detailed in SI, a easy coaching of this classifier RC can lead to a remarkably excessive accuracy. The output of the classifier RC is in contrast with the index worth. In the event that they agree, the output sign represents the right attractor; in any other case, a random sign is injected into the reminiscence RC to activate a distinct final result. The suggestions loop continues to perform till the right attractor is reached. This management technique can result in a virtually good change success fee, at the price of a presumably lengthy switching time. Let Tn be the time length that the random perturbation lasts, Tc be the time for making a choice concerning the output of the classifier, and ηi,j be the switching success fee from a beginning attractor si to a vacation spot attractor sj. The estimated time for such a suggestions management system to succeed in the specified state is Tattain,i,j = (Tn + Tc)/ηi,j, which defines an uneven distance between every pair of memorized attractors.

To offer a quantitative estimate of the efficiency of this technique, we run 90,000 switching in 25 totally different reminiscence RCs skilled with the six periodic and chaotic states as illustrated in Fig. 1(B). The dimensions of the reservoir community is 1000, and different hyperparameter values are listed in SI. Among the many 90,000 switchings, 8110 trials (about 9%) failed, which we used as a pool to check the management methods. To make the outcomes generic, we take a look at 12 totally different management settings with totally different parameters. We take a look at 4 totally different lengths of every noisy perturbation interval, together with 1 step, 3 steps, 10 steps, and 30 steps, with Gaussian white noise at three totally different ranges (normal deviations: σ = 0.3, σ = 1, and σ = 3). We discover that with an applicable selection of the management parameters, 10 durations of 10 steps of noise perturbations (so 100 steps of perturbations in whole) can get rid of 99% of the failed switchings, as illustrated in Fig. 3(E). The complete outcomes of the 12 totally different management settings are demonstrated and mentioned in SI.

Our third management technique can be motivated by day by day expertise: recalling an object or an occasion might be facilitated by some related, reminding cue alerts. We articulate utilizing a cue sign to “heat up” the reminiscence reservoir pc after switching the index p to that related to the specified attractor to be recalled. The benefit is that the reminiscence entry transition matrix is not wanted, however the success will depend on whether or not the cue is related and robust sufficient. The cue alerts are thus state-dependent: totally different attractors require totally different cues, so an extra reminiscence system could also be wanted to revive the warming alerts containing much less info than the attractors to be recalled. This results in a hierarchical construction of reminiscence retrieval: (a) a scalar or vector index p, (b) a brief warming sign, and (c) the entire memorized attractor, just like the workings of human reminiscence advised in ref. 55. We additionally look at if state-independent cues (uniform cues for all of the reminiscence states) might be useful, and discover that in lots of instances, a easy globally uniform cue could make most memorized attractors nearly randomly accessible, however there may be additionally a chance that this cue can nearly completely block a number of reminiscence states.

With this third management technique with the cues, we once more make use of the 8,110 failed switching pool to check the technique efficiency. As proven in Fig. 3(F), with the rise of the cue size, the success fee will increase practically exponentially. Nearly 90% of the failed switchings might be eradicated with simply 8 steps of cue, and 24 steps of cue can get rid of greater than 99% of the failed switchings.

Scaling regulation for reminiscence capability

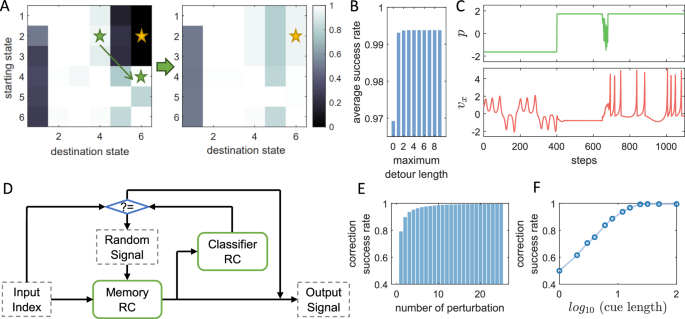

Intuitively, a bigger RNN within the hidden layer would allow extra dynamical attractors to be memorized. Quantitatively, such a relation might be characterised by a scaling regulation between the quantity Okay of attractors and the community measurement N. Our numerical methodology to uncover the scaling regulation is as follows. For a set pair of (Okay, N) values, we practice an ensemble of reservoir networks, every of measurement N, utilizing the Okay dynamical attractors. The fraction of the networks with validation errors lower than an empirical threshold offers the success fee of memorizing the patterns, which on the whole will increase with N. A important community measurement Nc might be outlined when the success fee is about 50%. (Right here, we use 50% as a threshold to attenuate the potential error in Nc as a result of random fluctuations. In SI, we offer outcomes with 80% because the success fee threshold. The conclusions stay the identical underneath this alteration.)

We use three several types of validation efficiency measures. The primary is the prediction horizon outlined by the prediction size earlier than the deviation of the prediction time sequence reaches 10% of the oscillation amplitude of the actual state (half of the space of the utmost worth minus the minimal worth in a sufficiently very long time sequence of that focus on state). The oscillation amplitude and the corresponding deviation fee are calculated for every dimension of the goal state, and the shortest prediction horizon is taken amongst all the size of the goal state. The prediction horizon is rescaled by the size of the interval of the goal system to facilitate comparability. For a chaotic state, we use the common interval outlined as the common shortest time between two native maximums in a sufficiently very long time sequence of that focus on state. The brink of this prediction horizon for a profitable recall is ready to be two durations of every reminiscence state. The second efficiency measure is the imply sq. root error (RMSE) with a validation time of 4 durations (or common durations). The third measure is outlined by the point earlier than the expected trajectories transcend a rectangular-shaped area surrounding the actual reminiscence state to an undesired area within the part house, which is ready to be 10% bigger than the oblong area outlined by the utmost and minimal values of the reminiscence state in every dimension. For these three measures, the thresholds for a profitable recall might be totally different throughout datasets however are at all times fastened inside the similar job (the identical scaling curve). The thresholds for the prediction-horizon-based measures and region-based measures are a number of durations of oscillation (or common durations for chaotic states) of the goal state.

Determine 4(A) reveals the ensuing scaling regulation for the assorted duties, with totally different datasets of states, totally different coaching approaches, and totally different coding schemes for the listed RCs. The primary dataset (dataset #1) consists of many 1000’s of various periodic trajectories. With index-based RCs, we use one-hot coding, binary coding, and two-dimensional coding with this dataset, as proven in Fig. 4(A). All of them result in related algebraic scaling legal guidelines Nc ∝ Okayγ which might be near a linear regulation: γ = 1.08 ± 0.01 for each the one-hot coding and binary coding, and γ = 1.17 ± 0.02 for the 2D coding. In all of the three instances, Nc all develop barely quicker than a linear regulation. A zoom-in image evaluating the three coding schemes is proven in Fig. 4(B). It may be seen that the one-hot coding is extra environment friendly than the opposite two. Determine 4(B) additionally demonstrates how the information factors within the scaling legal guidelines in Fig. 4(A) are gathered. Comparable success charges versus N curves are plotted for every Okay worth for every job and the information level from that curve which is closest to a 50% success fee is taken to be Nc.

A Algebraic scaling relation between the quantity Okay of dynamical patterns to be memorized and the required measurement Nc of the RC community for varied duties and totally different reminiscence coding schemes. Particulars of every curve/job are mentioned in the primary textual content. The “separate Wout” and “bifurcation” duties are proven with prediction-horizon-based measures, whereas all the opposite curves are plotted with the region-based measure. B Examples of how the success fee will increase with respect to N and comparisons amongst totally different coding schemes, the place the success fee of correct reminiscence recalling (utilizing the region-based efficiency measure) of three coding schemes on the dataset #1 with Okay = 64 is proven. All three curves have a sigmoid-like form between zero and one, the place the information factors closest to the 50% success fee threshold correspond to Nc. All knowledge factors within the scaling plots are generated from such a curve (success fee versus N) to extract Nc. The one-hot coding is essentially the most environment friendly coding of the three we examined for this job. C Comparisons amongst totally different recalling efficiency measures. The three totally different measures on the dataset #1 with a one-hot coding have indistinguishable scaling habits with solely small variations in a continuing issue.

Why is the one-hot coding extra environment friendly than the opposite coding scheme we take a look at? In our index-based method to memorizing dynamical attractors, an index worth pi is assigned for every attractor si and acts as a continuing enter via the index channel to the reservoir neural community, which successfully modulates the biases to the community. For the reason that index enter pi is multiplied by a random matrix earlier than coming into the community, every neuron receives a distinct random stage of bias modulation, affecting its dynamical habits via the nonlinear activation perform. Specifically, the hyperbolic tangent activation perform has quite a few distinct areas, together with an roughly linear area when the enter is small, and there are two practically fixed saturated areas for giant inputs. With one particular pi worth injected into the index channel, totally different neurons within the hidden layer might be distributed to/throughout totally different useful areas (detailed demonstration in SI). When the index worth is switched to a different one, the useful areas for every neuron are redistributed, resulting in a distinct dynamical habits of the reservoir pc. Among the many three coding schemes, the one-hot coding scheme makes use of one column of the random index enter matrix, so the bias distributions for various attractors are usually not associated to one another, yielding a minimal correlation among the many distributions of the useful areas of the neurons for various values of pi. For the opposite two coding schemes, there’s a sure diploma of overlap among the many enter matrix entries for various attractors, resulting in further correlations within the bias distributions, thereby lowering the reminiscence capability.

To make the saved states extra reasonable, we apply a dataset processed from the ALOI movies56. This dataset incorporates quick movies of rotating small on a regular basis objects, comparable to a toy duck or a pineapple. In every video, a single object rotates horizontally via a full 360-degree flip, returning to its preliminary angle. Repeating the video generates a periodic dynamical system. To scale back the computational hundreds because of the excessive spatial dimensionality of the video frames, we carry out dimension discount to the unique movies via the principal element evaluation, noting that almost all pixels are black backgrounds and lots of pixels of the thing are extremely correlated. We take the primary two principal elements of every video because the goal states. The outcomes are proven in Fig. 4(A) because the “ALOI movies” job. Much like the earlier three duties, we acquire an algebraic scaling regulation Nc ∝ Okayγ that’s barely quicker than a linear regulation with γ = 1.39 ± 0.01.

The “bifurcation” job is an instance to indicate the potential of our index-based method, the place Nc grows a lot slower than a linear scale with respect to Okay and may even lower in sure instances. It consists of 100 dynamical states gathered from the identical chaotic meals chain system however with totally different parameter values. We sweep an interval within the bifurcation diagram of the system with each periodic states of various durations and chaotic states with totally different common durations. For index coding, we use a one-dimensional index and assign the states by sorting the system parameter values used to generate these states. The index values are within the interval [− 2.5, 2.5], and are evenly separated on this interval. As proven in Fig. 4(A), the scaling habits of this “bifurcation” job is far slower than a linear regulation, suggesting that when the goal states are correlated, and the index values are assigned in a “significant” method, the reservoir pc can make the most of the similarities amongst goal states for extra environment friendly studying and storing. Furthermore, we discover that Nc may even lower in a sure interval of Okay, as the quantity of whole coaching knowledge will increase with bigger Okay, making it potential for the reservoir pc to succeed in an analogous efficiency with a smaller worth of Nc. In different duties with different coaching units, coaching knowledge from totally different goal states are impartial of one another. In abstract, coaching with the index-based method on this dataset is extra resource-efficient than having a sequence of separate reservoir computer systems skilled with every goal state and including one other choice mechanism to change the reservoir pc whereas working switching or itinerary behaviors.

One of the necessary options of reservoir computing is that the enter layer and the recurrent hidden layer are generated randomly and, thus, are primarily impartial of the goal state. One method to make the most of this handy function is to make use of the identical enter and hidden layer for all of the goal states however with a separate output layer skilled for every goal state. In such an method, an extra mechanism of choosing the right output layer (i.e., Wout) throughout retrieval is important, not like the opposite approaches studied with the identical built-in reservoir pc for all of the totally different goal states. Nonetheless, coaching with separate Wout can decrease the computational complexity in some eventualities, as proven by the “separate Wout” job in Fig. 4(A), the place Nc will increase as an influence regulation with Okay that’s a lot slower than a linear regulation. Nonetheless, coaching with separate Wout nonetheless makes the general mannequin complexity develop slower than linear, as the identical variety of impartial Wout is required because the variety of Okay. For a situation comparable to within the “bifurcation” job, the vanilla index-based method is extra environment friendly. Because the enter layer and hidden layer are shared by all of the totally different goal states, the difficulty of failed switching nonetheless exists, so management is required.

We additional display the effectivity of our index-based method in comparison with the index-free method. Within the “index-free” job proven in Fig. 4(A), dataset #1 was used. Whereas the index-based method has an nearly linear scaling, the scaling of the index-free method grows a lot quicker than an influence regulation. For example, the important community measurement Nc with Okay = 20 is about Nc = 2.4 × 104, which is nearly as massive because the Nc for Okay = 256 with the index-based reminiscence RC on the identical dataset. Furthermore, the important community measurement Nc for Okay = 32 is bigger than 105. The comparability of the 2 approaches on the identical job reveals that, whereas the index-free reminiscence RC has the benefit of getting content-addressable reminiscence, it’s computationally pricey in contrast with the index-based method, because of the extreme overlapping amongst totally different goal states for giant Okay values, thereby requiring a big reminiscence RC to tell apart totally different states.

To check the genericity of the scaling regulation, we examine the outcomes from the identical job (the “one-hot coding” job) with the three totally different measures, as proven in Fig. 4(C). All three curves have roughly the identical scaling habits, besides a minor distinction in a continuing issue.

Index-free reservoir-computing primarily based reminiscence – benefit of multistability

The RC neural community is a high-dimensional system with wealthy dynamical behaviors49,50,51,52,57,58, making it potential to use multistability for index-free reminiscence. Normally, within the high-dimensional part house, varied coexisting basins of attraction might be related to totally different attractors or dynamical patterns to be memorized. As we are going to present, this coexisting sample might be achieved with an analogous coaching (storing) course of to that of index-based reminiscence however with out an index. The saved attractors can then be recalled utilizing the content-addressable method with an applicable cue associated to the goal attractor. To retrieve a saved attractor, one can drive the networked dynamical system into the attracting basin of the specified memorized attractor utilizing the cues, mimicking a spontaneous dynamical response attribute of generalized synchronization between the driving cue and the responding RC50,59,60.

Whereas the opportunity of index-free reminiscence RC was identified beforehand50,51,52, a quantitative evaluation of the mechanism of the retrieval was missing. Such an evaluation ought to embrace an investigation into how the cue alerts have an effect on the success fee, what the basin construction of the reservoir pc is, and a dynamical understanding connecting these two. An issue is how one can effectively and precisely decide, from a brief section of RC output time sequence, which reminiscence state is recalled and if any goal reminiscence has been recalled in any respect. For this objective, short-term efficiency measures such because the RMSE and prediction horizon are usually not applicable, as the bottom fact of a selected trajectory of the corresponding attractor isn’t accessible, particularly for the chaotic states. Furthermore, a measure primarily based on calculating the utmost Lyapunov exponents or the correlation dimensions might not be appropriate both, because of the requirement of very long time sequence. In reasonable eventualities, persisting the reminiscence precisely for dozens of durations might be adequate, with out the requirement for long-term accuracy.

Our answer is to coach one other RC community (classifier RC) to carry out the short-term classification job to find out which memorized states have been recalled or, if no profitable recall has been made in any respect, with excessive classification accuracy. We have now examined its efficiency on greater than a thousand trials and located solely 6 trials out of 1,200 trials of deviation from a human labeler (particulars in SI), that are intrinsically ambiguous to categorise. The classifier RC can be used within the management scheme for index-based reminiscence RC, as proven in Fig. 3(D). The classifier RC gives a software to know the retrieval course of, e.g., the kind of driving alerts that can be utilized to recall a desired memorized attractor. The fundamental concept is that the cues function a form of reminder of the attractor to be recalled, so a brief interval of the time sequence from the attractor serves the aim. Different cue alerts are additionally potential, comparable to these primarily based on partial info of the specified attractor to recall the entire or utilizing noisy alerts for warming.

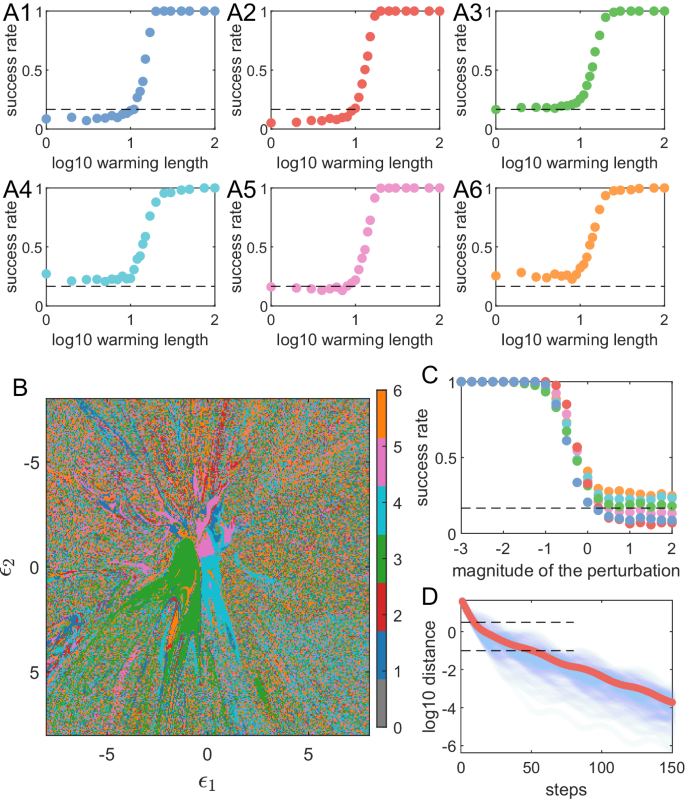

We use the six totally different attractors in Fig. 1(B) because the goal states, all situated in a three-dimensional part house. They don’t reside in distinct areas however have important overlaps. Nonetheless, the corresponding hidden states of the reservoir neural community, due to its a lot higher-dimensional part house, can presumably reside in distinct areas. Utilizing short-term time sequence of the goal attractor as a cue or warming sign to recollect it, we will obtain a close to 100% success fee of attractor recalling when the size of the warming sign exceeds some important worth, as proven in Fig. 5(A). Earlier than injecting a warming sign, the preliminary state of the neural community is ready to be random, so the success fee must be about 1/6 with out the cue. As a cue is utilized and its size will increase, a comparatively abrupt transition to close 100% success fee happens for all six saved attractors. The exceptional success fee of retrieval suggests that every one the six attractors might be skilled to efficiently coexist on this one high-dimensional dynamical system (i.e., the reminiscence RC) with every of its personal separate basin of attraction within the part house of the neural community hidden state r. The discovering that varied attractors, regardless of their very totally different properties—starting from being periodic or chaotic, with various durations or maximal Lyapunov exponents, amongst others – all share practically an identical thresholds for warming size, additionally means that this threshold is extra attribute of the reservoir pc itself somewhat than the goal states.

A1–A6 Success fee of reminiscence retrieval of the six attractors in Fig. 1(B) versus the size of the warming cue. Within the 3D part house of the unique dynamical programs, these attractors are situated in roughly the identical area and are overlapping. B A 2D projection of a typical basin construction of the reservoir dynamical community, the place totally different colours symbolize the preliminary situations resulting in totally different memorized attractors. The central areas have comparatively massive contiguous areas of uniform shade, whereas the basin construction is fragmented within the surrounding areas. C Success fee for the reminiscence reservoir community to maintain the specified attractor versus the magnitude of random perturbation. The 2 plateau areas with ~100% and 1/6 success charges correspond to the 2 typical basin options proven in panel (B). D Distance (averaged over an ensemble) between the dynamical state of the reservoir neural community and that of the goal attractor versus the cue length throughout warming. The cyan curves are obtained from 100 random trials, with the pink curve as the common. The 2 horizontal black dashed strains correspond to the perturbation magnitude of 100.5 and −1 in (C), and their intersection factors with the pink curve symbolize the warming lengths of 10 and 50-time steps, respectively, that are according to the transition areas in (A), suggesting a connection between the basin construction and the retrieval habits.

It’s potential to arbitrarily choose up a “menu” of the attractors and practice them to coexist in a reminiscence RC as one single dynamical system. A query is: what are the everyday constructions of the basins of attraction of the memorized attractors? An instance of the 2D projection of the basin construction is proven in Fig. 5(B). In a 2D aircraft, there are open areas of various colours, akin to the basins of various attractors, that are important for the soundness of the saved attractors. Extra particularly, when the basin of attraction of a memorized attractor incorporates an open set, it’s unlikely for a dynamical trajectory from the attractor to be “kicked” out of the basin by small perturbations, guaranteeing stability. Determine 5(C) reveals the chance (success fee) for a dynamical trajectory of the reservoir community to remain within the basin of a selected attractor after being “kicked” by a random perturbation. For a small perturbation, the chance is roughly one, indicating that the trajectory will at all times keep close to the unique (right) attractor, main primarily to zero retrieval error within the dynamical reminiscence. Solely when the perturbation is sufficiently massive will the chance lower to the worth of 1/6, signifying random transition among the many attractors and, consequently, failure of the system as a reminiscence. We word that there are fairly current works on basin constructions in a high-dimensional Kuramoto mannequin the place a lot of attractors coexist61, which bears related patterns to those noticed in our reminiscence RCs. Additional investigation is required to review if these patterns might be really generic throughout totally different dynamical programs.

The echo state property of a reservoir pc stipulates {that a} trajectory from an attractor in its unique part house corresponds to a singular trajectory within the high-dimensional part house of the RNN within the hidden layer11,62,63,64. That’s, the goal attractor is be embedded within the RC hidden house. Determine 5(D) reveals how the RC state approaches this embedded goal state through the warming by the cue. This panel reveals the distances (cyan curves) between every of the 100 trajectories of the reservoir neural community and the embedded memorized goal attractor versus the cue length throughout warming. (The small print of how this distance is calculated are given in SI.) The ensemble-averaged distance (the pink curve) decreases roughly exponentially with the cue size, indicating that the RC quickly approaches the reminiscence state’s embedding.

The leads to Fig. 5 counsel the next image. The basin construction in Fig. 5(B) signifies that, when a trajectory approaches the goal attractor, it often begins in a riddled-like area that incorporates factors belonging to the basins of various memorized attractors earlier than touchdown within the open space containing the goal attractor. The lead to Fig. 5(C) might be interpreted, as follows. The recall success fee when the RC is away from the open areas and nonetheless within the riddled-like area is nearly purely random (1/Okay). In an intermediate vary of distance the place two kinds of areas are blended (because the open areas don’t seem to have a uniform radius), the recall success fee will increase quickly earlier than coming into the pure open area and reaches 100% success fee. That is additional verified by Fig. 5(D), the place the 2 horizontal black dashed strains point out the 2 distances that equal the magnitudes of perturbation in Fig. 5(C) underneath which the speedy decline of success fee begins and ends. That’s, the interval between these two black dashed strains in Fig. 5(D) is the interval the place the RC dynamical state traverses the blended area. The cue lengths on the two ends of the curve in Fig. 5(D) on this interval are 10 and 50 time steps, which agree properly with the transition interval in Fig. 5(A). Taken collectively, through the warming by the cue, the reminiscence RC begins from the riddled-like area travels via a blended area and eventually reaches the open areas. This journey is mirrored within the adjustments in recall success fee in Fig. 5(A).

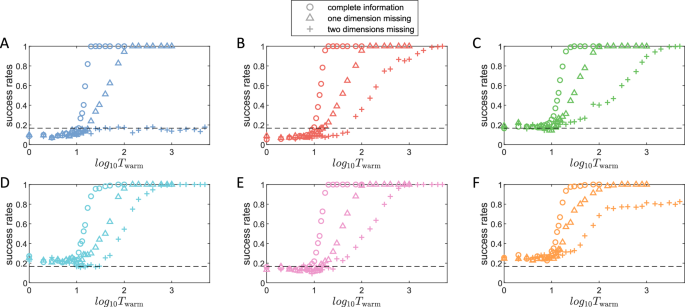

It’s value learning if partial info may efficiently recall memorized states in an index-free reminiscence RC. Specifically, for the leads to Fig. 5(A1–A6), the cue alerts used to retrieve any saved chaotic attractor have the complete dimensions as that of the unique dynamical system that generates the attractor. What if the cues are partial with some lacking dimension? For instance, if the attractor is from a 3D system, then a full cue sign has three elements. If one is lacking, the precise enter cue sign is 2D. Nonetheless, because the reservoir community nonetheless has three enter channels, the lacking element might be compensated by the corresponding output element of the reminiscence RC as a suggestions loop. (This rewiring scheme was advised beforehand50.) Determine 6 reveals, for the six attractors in Fig. 5(A1–A6), the success fee of retrieval versus the cue size or warming sign, for 3 distinct instances: full 3D cue alerts, partial 2D cue alerts with one lacking dimension, and partial 1D cue alerts with two lacking dimensions. It may be seen that 100% success charges can nonetheless be achieved in most eventualities, albeit longer alerts are required. The thresholds of cue size, the place the success fee begins to extend considerably bigger than a random recall (round 1/6), are postponed to an analogous diploma for all of the 2D instances amongst totally different attractors. These thresholds are additionally postponed to a different related diploma for all of the 1D instances. This consequence additional means that the brink of cue size is a function of the RC construction and properties somewhat than a function of the precise goal attractor, with the identical method of rewiring suggestions loops yielding the identical thresholds. (A dialogue bearing in mind all of the potential combos of missed dimensions is offered in SI.)

A–F Proven is the success fee of reminiscence retrieval of the six totally different chaotic attractors arising from 3D dynamical programs, respectively, with (i) full 3D cues, (ii) 2D cues with one dimension lacking, and (iii) 1D cues with two dimensions lacking. For 2D cues, an extended warming time is required to realize 100% success fee. For 1D cues, typically 100% success fee might be achieved with an extended warming time, however it may possibly additionally happen that the proper success fee can’t be achieved, e.g., (A), (F).

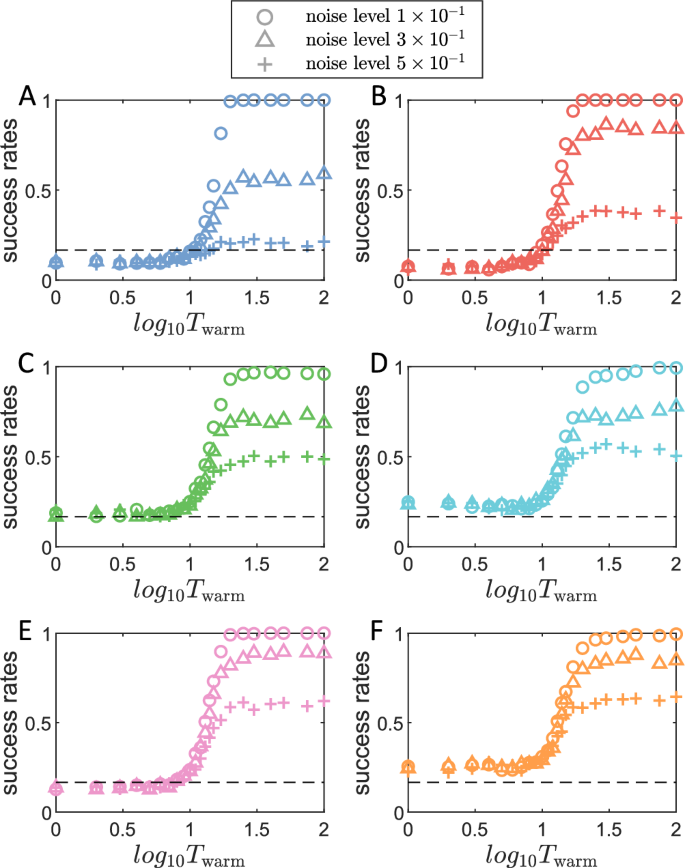

An extra query is: what if the warming alerts for the index-free reservoir-computing reminiscence include random errors or are noisy? Determine 7 reveals the retrieval success fee versus the cue size for 3 totally different ranges of Gaussian white noise within the 3D cue sign. For all six chaotic attractors, a 100% success fee can nonetheless be achieved for comparatively small noise, however the achievable success fee will lower because the noise stage will increase. Completely different from the eventualities with partial dimensionality, the brink of required cue size the place the success fee jumps considerably from random reminiscence retrieval (1/6) stays the identical with totally different noise ranges. We conclude that noisy cues can decrease the saturated success fee, whereas partial info (with rewired suggestions loops) can decelerate the transition course of from random retrieval to saturation in success fee and generally decrease the saturated success fee.

A–F The success retrieval fee of the six distinct chaotic attractors in Fig. 1(B) (from left to proper), respectively, versus the cue size.

Index-based reservoir-computing reminiscence

Within the “location-addressable” or “parameter-addressable” situation, the saved reminiscence states inside the neural community are activated by a selected location handle or an index parameter. The stimulus that triggers the system to change states might be completely unrelated to the content material of the activated state, and the pair linking the reminiscence states and stimuli might be arbitrarily outlined. For example, the stimulus might be an environmental situation such because the temperature, whereas the corresponding neural community state may symbolize particular behavioral patterns of an animal. An itinerancy amongst totally different states is thus potential, given a fluctuating environmental issue. (The “parameter-addressable” situation is totally different from the “content-addressable” situation that requires some correlated stimulus to activate a reminiscence state.)

Storage of complicated dynamical attractors

The structure of our index-based reservoir computing reminiscence consists of a typical reservoir pc (i.e., echo state community)11,12,13 however with one function particularly designed for location-addressable reminiscence: an index channel, as proven in Fig. 1(A). Throughout coaching, the time sequence from quite a few dynamical attractors to be memorized are offered because the enter sign u(t) to the reservoir machine, every is related to a selected worth of the index p in order that the attractors might be recalled after coaching utilizing the identical index worth. This index worth p modulates the dynamics of the RC community via an index enter matrix Windex that tasks p to your entire hidden layer with weights outlined by the matrix entries. The coaching course of is an open-loop operation with enter u(t) and the corresponding index worth p via the index channel. To retailer or memorize a distinct attractor, its dynamical trajectory turns into the enter, along with the index worth for this attractor. After coaching, the reservoir output is linked with its enter, producing closed-loop operation in order that the reservoir machine is now able to executing self-dynamical evolution ranging from a random preliminary situation, “managed” by the precise index worth. Extra particularly, to retrieve a desired attractor, we enter its index worth via the index channel, and the reservoir machine will generate a dynamical trajectory faithfully representing the attractor, as schematically illustrated in Fig. 1(A) with two examples: one periodic and one other chaotic attractor. The mathematical particulars of the coaching and retrieval processes are given in Supplementary Info (SI).

A A schematic illustration of index-based reservoir-computing reminiscence. B Retrieval of six memorized chaotic and periodic attractors utilizing a scalar index pi (one integer worth for every memorized dynamical attractor), the place the blue and pink trajectories within the prime and backside rows, respectively, symbolize the unique and retrieved attractors. Visually, there may be little distinction between the unique and memorized/retrieved attractors. C Memorizing 16 chaotic attractors with two indices. The efficiently retrieved attractors are offered in SI. All of the memorized attractors are dynamically steady states within the closed-loop reservoir dynamical system.

To display the workings of our reservoir computing reminiscence, we generate quite a few distinct attractors from three-dimensional nonlinear dynamical programs. We first conduct an experiment of memorizing and retrieving six attractors: two periodic and 4 chaotic attractors, as represented by the blue trajectories within the prime row of Fig. 1(B) (the bottom fact). The index values for these six attractors are merely chosen to be pi = i for i = 1, …, 6. Throughout coaching, the time sequence of every attractor is injected into the enter layer, and the reservoir community receives the precise index worth, labeling this attractor to be memorized in order that the reservoir-computing reminiscence learns the affiliation of the index worth with the attractor to be memorized. To retrieve a selected attractor, we set the index worth p to the one which labeled this attractor throughout coaching and permit the reservoir machine to execute a closed-loop operation to generate the specified attractor. For the six distinct dynamical attractors saved, the respective retrieved attractors are proven within the second row in Fig. 1(B). The recalled attractors carefully resemble the unique attractors and for any particular worth of the index, the reservoir-computing reminiscence is able to producing the dynamical trajectory for an arbitrarily lengthy time frame. The constancy of the recalled states, in the long term, might be assessed via the utmost Lyapunov exponent and the correlation dimension. Specifically, we practice an ensemble of 100 totally different reminiscence RCs, recall every of the reminiscence states, and generate outputs constantly for 200,000 steps. The 2 measures are calculated throughout this era course of and in contrast with the bottom fact. For example, for the Lorenz attractor with the true correlation dimension of two.05 ± 0.01 and largest Lyapunov exponent of about 0.906, 96% and 91% of the retrieved attractors have their correlation dimension values inside 2.05 ± 0.02 and exponent values in 0.906 ± 0.015, indicating that the reminiscence RC has discovered the long-term “local weather” of the attractors and is ready to reproduce them (see SI for extra outcomes).

For a small variety of dynamical patterns (attractors), a scalar index channel suffices for correct retrieval, as illustrated in Fig. 1(B). When the variety of attractors to be memorized turns into massive, it’s helpful to extend the dimension of the index channel. As an instance this, we generate 16 distinct chaotic attractors from three-dimensional dynamical programs whose velocity fields consist of various combos of power-series phrases53, as proven in Fig. 1(C). For every attractor, we use a two-dimensional index vector ({p}_{i}=({p}_{i}^{1},, {p}_{i}^{2})) to label it, the place ({p}_{i}^{1},, {p}_{i}^{2}in {1,2,3,4}). The attractors might be efficiently memorized and faithfully retrieved by our index-based reservoir pc, with detailed outcomes offered in SI.

Given quite a few dynamical patterns to be memorized, there might be many various methods of assigning the indices. Along with utilizing one and two indices to tell apart the patterns, as proven in Fig. 1(B) and (C), we studied two different index-assignment schemes: binary and one-hot code. For the binary task scheme, we retailer Okay dynamical patterns utilizing (ulcorner {log }_{2}Okay urcorner) variety of channels. The index worth in every channel can both be one of many two potential values. For the one-hot task scheme, the variety of index channels is similar because the quantity Okay of attractors to be memorized. The index worth in every channel continues to be one of many two potential values, as an illustration, both 0 or 1. For the one-hot task, every attractor is related to one channel, the place solely this channel can take the primary for the attractor, whereas the values related to all different channels are zero. Equally, for every channel, the index worth might be one if and provided that the attractor being skilled/recalled is the state with which it’s related. Arranging all of the index values as a matrix, one-hot task results in an identification matrix. For a given reservoir hidden-layer community, other ways of assigning indices lead to totally different index-input schemes to the reservoir machine and may thus have an effect on the reminiscence efficiency and capacities. The consequences are negligible if the variety of dynamical attractors to be memorized is small, e.g., a dozen or fewer. Nonetheless, the consequences might be pronounced if the quantity Okay of patterns turns into bigger. One method to decide the optimum task rule is to look at, underneath a given rule, how the reminiscence capability will depend on or scales with the dimensions of the reservoir neural community within the hidden layer, which we are going to focus on later.

Transition matrices amongst saved attractors

We examine the dynamics of reminiscence toggling among the many saved attractors. As every memorized state si is skilled to be related to a singular index worth pi, the reservoir community’s dynamical state amongst totally different attractors might be switched by merely altering the enter index worth. A number of examples are proven in Fig. 2(A) and (B), the place p is switched amongst varied values a number of instances. The dynamical state activated within the reservoir community switches among the many discovered attractors accordingly. For example, at step 800, for a change from p = 1 to p = 6, the reservoir state switches from a periodic Lissajous state to a chaotic Hindmarsh-Rose (HR) neuron state. Nonetheless, not all such transitions might be profitable – a failed instance is illustrated within the backside panel of Fig. 2(C). On this case, after the index switching, the reservoir system falls into an undesired state that doesn’t belong to any of the skilled states. The chance of failed switchings is small and uneven between the 2 states earlier than and after the switching. A weighted directed community might be outlined amongst all of the memorized states the place the weights are the success chances, which might be represented by a transition matrix. Making index switches at many random time steps and counting the profitable switches versus the failed switches, we numerically acquire the transition matrix, as exemplified in Fig. 2(D), from two totally different randomly generated listed RCs. Determine 2(E) reveals the common transition matrix of an ensemble of 25 listed RCs. Determine 2(F) reveals that the variance of the success fee throughout totally different columns (i.e., totally different vacation spot states) is far bigger than the variance throughout totally different rows (i.e., totally different beginning states), implying that the success fee is considerably extra depending on the vacation spot attractor than on the beginning attractor.

A, B By altering the index worth p as in panel (A), one can toggle amongst totally different states proven in panel (B) which were memorized as attractors within the listed reminiscence RC (Dimensions vx and vz are usually not proven). C An occasion of a failed change, the place the reminiscence RC evolves to an untrained state after altering p. D Two typical transition matrices the place six totally different states [as the ones in Fig. 1(B)] have been memorized. E The typical of transition matrices from 25 reminiscence RCs with totally different random seeds and the identical settings as in panel (D). F Variances within the success fee with respect to the beginning and vacation spot attractors, revealing a stronger dependence on the latter. The result’s from the ensemble of 25 RC networks, every skilled with ten totally different chaotic attractors. G A intentionally chosen reminiscence RC with 16 reminiscence states has comparatively low success charges amongst many states for visualizing the basin constructions proven beneath. H Attracting areas of two totally different vacation spot states on the manifold of a beginning state. The reminiscence RC initially operates on this shell-shaped beginning attractor, and the index p is toggled at totally different time steps when the reminiscence RC is at totally different positions on this attractor. Such a place can decide whether or not the switching is profitable, the place the profitable positions are marked blue and failed positions are marked lighter orange. I Native two-dimensional slices of the basin constructions of the 2 vacation spot states from panel (H) within the high-dimensional RC hidden house. Once more, the blue and lighter orange areas mark the basins of the memorized state and of some untrained states resulting in failed switches, respectively. The portions ϵ1 and ϵ2 outline the scales of perturbations to the RC hidden house in two randomly chosen perpendicular instructions. The ϵ1 = ϵ2 = 0 origin factors are the RC hidden states taken from random time steps whereas the reminiscence RC operates on the corresponding vacation spot states.

What’s the origin of the uneven dependence within the transition matrices and the dynamical mechanism behind the change failures? Two observations are useful. The primary issues the dynamic consequence of a change within the index worth p. Incorporating this time period of p into the reservoir pc is equal to including an adjustable bias time period to every neuron within the reservoir hidden layer. Completely different values of p thus straight lead to totally different bias values on the neurons and totally different dynamics. The identical listed RC underneath totally different p might be handled as totally different dynamical programs. Second, word that, throughout a change, one doesn’t straight intervene with the state enter u or the hidden state r of the RC community, however solely adjustments the worth of p. Consequently, a change in p as in Fig. 2(A) switches the dynamical equations of the RC community whereas conserving the RC states rfinal and the output vfinal on the final time step, which is handed from the earlier dynamical system to the brand new system after switching. This pair of rfinal and vfinal turns into the preliminary hidden state and preliminary enter underneath the brand new dynamic equation of RC.

The 2 observations counsel inspecting the basin of attraction of the skilled state within the reminiscence RC hidden house underneath the corresponding index worth, which usually doesn’t totally occupy your entire part house. Determine 2(I) reveals the basin construction of two arbitrary states in an listed RC skilled with 16 attractors. The blue areas, resulting in the skilled states, depart some house for the orange areas that result in untrained states and failed to change. If the RC states on the final time step earlier than switching lay exterior the blue area, the RC community will evolve to an undesirable state, and a change failure will happen. We additional plot the factors from the attractor earlier than switching in blue (profitable) and orange (unsuccessful), as proven in Fig. 2(H). They’re the projections of the basin construction of the brand new attractors onto the earlier attractor. This image gives an evidence for the robust dependence of success charges on the vacation spot states. Specifically, the success fee is decided by two elements: the relative measurement of the basin of the brand new state after switching and the diploma of overlap between the earlier attractor and the brand new basin. Whereas the diploma of overlap will depend on each the beginning and vacation spot states, the relative measurement of the brand new state basin is solely decided by the vacation spot state, ensuing a powerful dependency on the switching success fee of the vacation spot state. [Further illustrations of (i) the basin structure of the memorized states in an indexed RC, (ii) projections of the basin structure of the destination state back to the starting state attractor, and (iii) how switch success is strongly affected by the basin structure of the destination state are provided in SI.]

Management methods for reaching excessive reminiscence transition success charges

For a reminiscence system to be dependable, accessible, and virtually helpful, a excessive success fee of switching from one memorized attractor to a different upon recalling is crucial. As this switching failure concern is rooted in the issue of whether or not an preliminary situation is contained in the attracting basin of the vacation spot state, such sort of failure might be common for fashions for memorizing not precisely the (static) coaching knowledge however the dynamical guidelines of the goal states and re-generating the goal states by evolving the memorized dynamical guidelines. Even when one makes use of a sequence of networks to memorize the goal states individually, the issue of correctly initializing every reminiscence community throughout a change or a recall persists. A potential answer to this preliminary state drawback is to optimize the coaching scheme or the RNN structure to make the memorized state globally engaging in your entire hidden house – an unsolved drawback at current. Right here we give attention to noninvasive management methods to reinforce the recall or transition success charges with out altering the coaching method or the RC structure, assuming a reminiscence RC is already skilled and stuck. The established connections or weights won’t be modified, guaranteeing that the reminiscence attractors already in place are preserved.

The primary technique, named “tactical detour,” makes use of some profitable pathways within the transition matrix to construct oblique detours to reinforce the general transition success charges. As a substitute of switching from an preliminary attractor to a vacation spot attractor straight, going via a number of different intermediate states can lead to a excessive success fee, as exemplified in Fig. 3(A). This methodology might be comparatively easy to implement in lots of eventualities, as all wanted is switching the p worth a number of extra instances. Nonetheless, this technique has two limitations. First, it’s essential to know some details about the transition matrix to seek for an applicable detour and estimate the success fee. Second, the dynamical mechanism behind failed switching means that the success fee principally will depend on the relative measurement of the attracting basin of the vacation spot state, implying {that a} state that has a small attracting basin is troublesome to succeed in from most different states. Consequently, the advance of the success fee from a detour can fall beneath 100%. As exemplified in Fig. 3(B), the success fee with detours, which may considerably enhance the general success fee inside just some steps, can saturate at some ranges decrease than one.

A Attaining larger switching success charges utilizing detours, the place the switching from attractor 2 to attractor 6 through a detour via attractor 4 considerably will increase the success fee. The suitable panel illustrates the improved transition matrix with detours as in comparison with the unique one on the left. B Common success fee with totally different most detour lengths. A minimal detour is usually wanted – only one single step of detour can scale back greater than two-thirds of the failing chance, obtained from the identical ensemble of reminiscence RCs as in Fig. 2(F). C An occasion of suggestions management to right a failed change, following the scheme in panel (D). The change on the four-hundredth time step results in an untrained static state. For the reason that classifier RC can not acknowledge the reminiscence RC output as the specified state, a brief random sign is utilized to perturb the reminiscence RC, which adjusts the state of the reminiscence RC to realize a profitable retrieval. D Illustration of the managed reminiscence retrieval by a classifier RC and random alerts, the place a classifier RC is used to determine the output from the reminiscence RC. The result of this identification decides whether or not to use a random perturbation or to make use of the reminiscence RC output as the ultimate consequence. E The charges of efficiently corrected failed switches by the management mechanism illustrated in (C) and (D). The denominator of this correction success fee is the variety of failed switches, not all switches. Every perturbation is carried out by ten steps of normal Gaussian noise. Only one “perturb and classify” cycle can right about 80% of the failed switches. The outcomes of this method with totally different parameter decisions are proven in SI. F Efficiency of the cue-based methodology to right failed switches with respect to cue size. Inside 25 steps of the cue, the correction success fee reaches 99%. Panels (E) and (F) are generated from the identical ensemble of 25 reminiscence RCs, every skilled to memorize the six attractors as in Fig. 1.

Our second technique is of the suggestions management sort with a classifier reservoir pc and random alerts to perturb the reminiscence reservoir pc till the latter reaches the specified reminiscence state, as illustrated and defined in Fig. 3(C, D). This trial-and-error method is conceptually just like the random reminiscence search mannequin in psychology54. A working instance is proven in Fig. 3(C), the place the enter index p first prompts sure outputs from the reminiscence RC, that are fed to a classifier reservoir pc skilled to tell apart among the many goal states and between the goal states and non-target states. As detailed in SI, a easy coaching of this classifier RC can lead to a remarkably excessive accuracy. The output of the classifier RC is in contrast with the index worth. In the event that they agree, the output sign represents the right attractor; in any other case, a random sign is injected into the reminiscence RC to activate a distinct final result. The suggestions loop continues to perform till the right attractor is reached. This management technique can result in a virtually good change success fee, at the price of a presumably lengthy switching time. Let Tn be the time length that the random perturbation lasts, Tc be the time for making a choice concerning the output of the classifier, and ηi,j be the switching success fee from a beginning attractor si to a vacation spot attractor sj. The estimated time for such a suggestions management system to succeed in the specified state is Tattain,i,j = (Tn + Tc)/ηi,j, which defines an uneven distance between every pair of memorized attractors.

To offer a quantitative estimate of the efficiency of this technique, we run 90,000 switching in 25 totally different reminiscence RCs skilled with the six periodic and chaotic states as illustrated in Fig. 1(B). The dimensions of the reservoir community is 1000, and different hyperparameter values are listed in SI. Among the many 90,000 switchings, 8110 trials (about 9%) failed, which we used as a pool to check the management methods. To make the outcomes generic, we take a look at 12 totally different management settings with totally different parameters. We take a look at 4 totally different lengths of every noisy perturbation interval, together with 1 step, 3 steps, 10 steps, and 30 steps, with Gaussian white noise at three totally different ranges (normal deviations: σ = 0.3, σ = 1, and σ = 3). We discover that with an applicable selection of the management parameters, 10 durations of 10 steps of noise perturbations (so 100 steps of perturbations in whole) can get rid of 99% of the failed switchings, as illustrated in Fig. 3(E). The complete outcomes of the 12 totally different management settings are demonstrated and mentioned in SI.

Our third management technique can be motivated by day by day expertise: recalling an object or an occasion might be facilitated by some related, reminding cue alerts. We articulate utilizing a cue sign to “heat up” the reminiscence reservoir pc after switching the index p to that related to the specified attractor to be recalled. The benefit is that the reminiscence entry transition matrix is not wanted, however the success will depend on whether or not the cue is related and robust sufficient. The cue alerts are thus state-dependent: totally different attractors require totally different cues, so an extra reminiscence system could also be wanted to revive the warming alerts containing much less info than the attractors to be recalled. This results in a hierarchical construction of reminiscence retrieval: (a) a scalar or vector index p, (b) a brief warming sign, and (c) the entire memorized attractor, just like the workings of human reminiscence advised in ref. 55. We additionally look at if state-independent cues (uniform cues for all of the reminiscence states) might be useful, and discover that in lots of instances, a easy globally uniform cue could make most memorized attractors nearly randomly accessible, however there may be additionally a chance that this cue can nearly completely block a number of reminiscence states.

With this third management technique with the cues, we once more make use of the 8,110 failed switching pool to check the technique efficiency. As proven in Fig. 3(F), with the rise of the cue size, the success fee will increase practically exponentially. Nearly 90% of the failed switchings might be eradicated with simply 8 steps of cue, and 24 steps of cue can get rid of greater than 99% of the failed switchings.

Scaling regulation for reminiscence capability

Intuitively, a bigger RNN within the hidden layer would allow extra dynamical attractors to be memorized. Quantitatively, such a relation might be characterised by a scaling regulation between the quantity Okay of attractors and the community measurement N. Our numerical methodology to uncover the scaling regulation is as follows. For a set pair of (Okay, N) values, we practice an ensemble of reservoir networks, every of measurement N, utilizing the Okay dynamical attractors. The fraction of the networks with validation errors lower than an empirical threshold offers the success fee of memorizing the patterns, which on the whole will increase with N. A important community measurement Nc might be outlined when the success fee is about 50%. (Right here, we use 50% as a threshold to attenuate the potential error in Nc as a result of random fluctuations. In SI, we offer outcomes with 80% because the success fee threshold. The conclusions stay the identical underneath this alteration.)