A rising variety of instruments allow customers to make on-line knowledge representations, like charts, which are accessible for people who find themselves blind or have low imaginative and prescient. Nonetheless, most instruments require an present visible chart that may then be transformed into an accessible format.

This creates boundaries that stop blind and low-vision customers from constructing their very own customized knowledge representations, and it may restrict their skill to discover and analyze vital info.

A workforce of researchers from MIT and College Faculty London (UCL) needs to alter the best way individuals take into consideration accessible knowledge representations.

They created a software program system known as Umwelt (which suggests “atmosphere” in German) that may allow blind and low-vision customers to construct custom-made, multimodal knowledge representations while not having an preliminary visible chart.

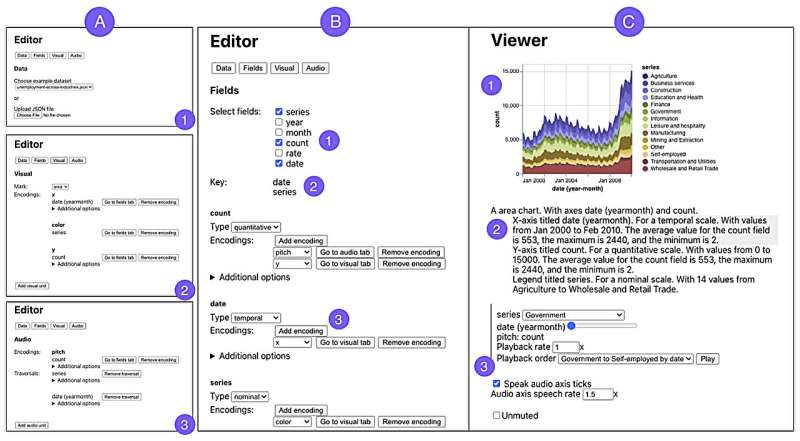

Umwelt, an authoring atmosphere designed for screen-reader customers, incorporates an editor that enables somebody to add a dataset and create a custom-made illustration, resembling a scatterplot, that may embody three modalities: visualization, textual description, and sonification. Sonification entails changing knowledge into nonspeech audio.

The system, which might signify a wide range of knowledge sorts, features a viewer that permits a blind or low-vision person to interactively discover an information illustration, seamlessly switching between every modality to work together with knowledge otherwise.

The researchers carried out a research with 5 knowledgeable screen-reader customers who discovered Umwelt to be helpful and simple to be taught. Along with providing an interface that empowered them to create knowledge representations—one thing they mentioned was sorely missing—the customers mentioned Umwelt may facilitate communication between individuals who depend on totally different senses.

“We’ve got to keep in mind that blind and low-vision individuals aren’t remoted. They exist in these contexts the place they wish to speak to different individuals about knowledge,” says Jonathan Zong, an electrical engineering and pc science (EECS) graduate pupil and lead creator of a paper introducing Umwelt.

“I’m hopeful that Umwelt helps shift the best way that researchers take into consideration accessible knowledge evaluation. Enabling the total participation of blind and low-vision individuals in knowledge evaluation entails seeing visualization as only one piece of this greater, multisensory puzzle.”

Becoming a member of Zong on the paper are fellow EECS graduate college students Isabella Pedraza Pineros and Mengzhu “Katie” Chen; Daniel Hajas, a UCL researcher who works with the World Incapacity Innovation Hub; and senior creator Arvind Satyanarayan, affiliate professor of pc science at MIT who leads the Visualization Group within the Laptop Science and Synthetic Intelligence Laboratory.

The paper can be introduced on the ACM Convention on Human Components in Computing (CHI 2024), HELD Could 11–16 in Honolulu. The findings are revealed on the arXiv preprint server.

De-centering visualization

The researchers beforehand developed interactive interfaces that present a richer expertise for display reader customers as they discover accessible knowledge representations. By that work, they realized most instruments for creating such representations contain changing present visible charts.

Aiming to decenter visible representations in knowledge evaluation, Zong and Hajas, who misplaced his sight at age 16, started co-designing Umwelt greater than a 12 months in the past.

On the outset, they realized they would wish to rethink learn how to signify the identical knowledge utilizing visible, auditory, and textual types.

“We needed to put a standard denominator behind the three modalities. By creating this new language for representations, and making the output and enter accessible, the entire is bigger than the sum of its components,” says Hajas.

To construct Umwelt, they first thought of what is exclusive about the best way individuals use every sense.

For example, a sighted person can see the general sample of a scatterplot and, on the similar time, transfer their eyes to give attention to totally different knowledge factors. However for somebody listening to a sonification, the expertise is linear since knowledge are transformed into tones that have to be performed again one after the other.

“If you’re solely desirous about straight translating visible options into nonvisual options, then you definately miss out on the distinctive strengths and weaknesses of every modality,” Zong provides.

They designed Umwelt to supply flexibility, enabling a person to change between modalities simply when one would higher swimsuit their activity at a given time.

To make use of the editor, one uploads a dataset to Umwelt, which employs heuristics to robotically creates default representations in every modality.

If the dataset accommodates inventory costs for corporations, Umwelt would possibly generate a multiseries line chart, a textual construction that teams knowledge by ticker image and date, and a sonification that makes use of tone size to signify the value for every date, organized by ticker image.

The default heuristics are meant to assist the person get began.

“In any type of inventive device, you may have a blank-slate impact the place it’s exhausting to know learn how to start. That’s compounded in a multimodal device as a result of it’s important to specify issues in three totally different representations,” Zong says.

The editor hyperlinks interactions throughout modalities, so if a person adjustments the textual description, that info is adjusted within the corresponding sonification. Somebody may make the most of the editor to construct a multimodal illustration, change to the viewer for an preliminary exploration, then return to the editor to make changes.

Serving to customers talk about knowledge

To check Umwelt, they created a various set of multimodal representations, from scatterplots to multiview charts, to make sure the system may successfully signify totally different knowledge sorts. Then they put the device within the palms of 5 knowledgeable display reader customers.

Examine contributors principally discovered Umwelt to be helpful for creating, exploring, and discussing knowledge representations. One person mentioned Umwelt was like an “enabler” that decreased the time it took them to investigate knowledge. The customers agreed that Umwelt may assist them talk about knowledge extra simply with sighted colleagues.

“What stands out about Umwelt is its core philosophy of de-emphasizing the visible in favor of a balanced, multisensory knowledge expertise. Usually, nonvisual knowledge representations are relegated to the standing of secondary concerns, mere add-ons to their visible counterparts. Nonetheless, visualization is merely one side of knowledge illustration.

“I admire their efforts in shifting this notion and embracing a extra inclusive strategy to knowledge science,” says JooYoung Web optimization, an assistant professor within the College of Data Sciences on the College of Illinois at Urbana-Champagne, who was not concerned with this work.

Shifting ahead, the researchers plan to create an open-source model of Umwelt that others can construct upon. Additionally they wish to combine tactile sensing into the software program system as an extra modality, enabling using instruments like refreshable tactile graphics shows.

“Along with its influence on finish customers, I’m hoping that Umwelt is usually a platform for asking scientific questions round how individuals use and understand multimodal representations, and the way we will enhance the design past this preliminary step,” says Zong.

Extra info:

Jonathan Zong et al, Umwelt: Accessible Structured Enhancing of Multimodal Information Representations, arXiv (2024). DOI: 10.48550/arxiv.2403.00106

This story is republished courtesy of MIT Information (internet.mit.edu/newsoffice/), a well-liked website that covers information about MIT analysis, innovation and educating.

Quotation:

New software program permits blind and low-vision customers to create interactive, accessible charts (2024, March 27)

retrieved 27 March 2024

from https://techxplore.com/information/2024-03-software-enables-vision-users-interactive.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is offered for info functions solely.