Google desires you to know that Gemini 2.0 Flash ought to be your favourite AI chatbot. The mannequin boasts larger velocity, larger brains, and extra widespread sense than its predecessor, Gemini 1.5 Flash. After placing Gemini Flash 2.0 via its paces in opposition to ChatGPT, I made a decision to see how Google’s new favourite mannequin compares to its older sibling.

As with the sooner matchup, I arrange the duel with just a few prompts constructed round widespread methods anybody would possibly make use of Gemini, together with myself. May Gemini 2.0 Flash provide higher recommendation for bettering my life, clarify a fancy topic I do know little about in a method I may perceive, or work out the reply to a fancy logic drawback and clarify the reasoning? This is how the check went.

Productive selections

If there’s one factor AI ought to have the ability to do, it’s give helpful recommendation. Not simply generic suggestions, however relevant and instantly useful concepts. So I requested each variations the identical query: “I wish to be extra productive but in addition have higher work-life steadiness. What adjustments ought to I make to my routine?”

Gemini 2.0 was noticeably faster to reply, even when it was solely a second or two quicker. As for the precise content material, each had some good recommendation. The 1.5 mannequin broke down 4 massive concepts with bullet factors, whereas 2.0 went for an extended record of 10 concepts defined briefly paragraphs.

I preferred among the extra particular strategies from 1.5, such because the Pareto Precept, however in addition to that, 1.5 felt like a variety of restating the preliminary idea, whereas 2.0 felt prefer it gave me extra nuanced life recommendation for every suggestion. If a buddy had been to ask me for recommendation on the topic, I would undoubtedly go along with 2.0’s reply.

What’s up with Wi-Fi?

An enormous a part of what makes an AI assistant helpful isn’t simply how a lot it is aware of – it’s how effectively it may clarify issues in a method that truly clicks. A superb rationalization isn’t nearly itemizing details; it’s about making one thing advanced really feel intuitive. For this check, I needed to see how each variations of Gemini dealt with breaking down a technical matter in a method that felt related to on a regular basis life. I requested: “Clarify how Wi-Fi works, however in a method that is sensible to somebody who simply desires to know why their web is sluggish.”

Gemini 1.5 went with evaluating Wi-Fi to radio, which is extra of an outline than the analogy it steered it was making. Calling the router the DJ is one thing of a stretch, too, although the recommendation about bettering the sign was no less than coherent.

Gemini 2.0 used a extra elaborate metaphor involving a water supply system with units like vegetation receiving water. The AI prolonged the metaphor to clarify what is perhaps inflicting points, akin to too many “vegetation” for the water accessible and clogged pipes representing supplier points. The “sprinkler interference” comparability was a lot weaker, however as with the 1.5 model, Gemini 2.0 had sensible recommendation for bettering the Wi-Fi sign. Regardless of being for much longer, 2.0’s reply emerged barely quicker.

Logic bomb

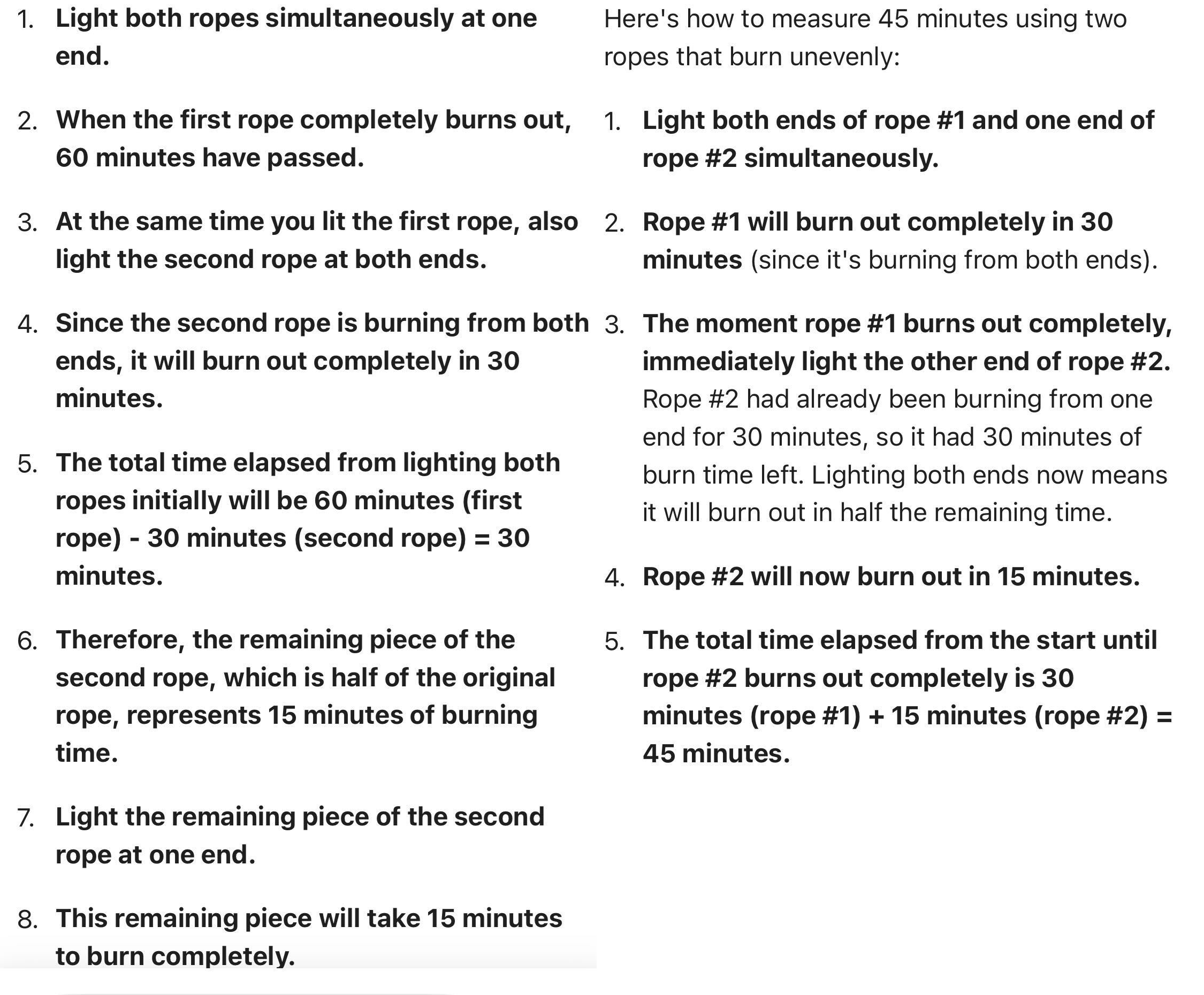

For the final check, I needed to see how effectively each variations dealt with logic and reasoning. AI fashions are purported to be good at puzzles, however it’s not nearly getting the reply proper – it’s about whether or not they can clarify why a solution is appropriate in a method that truly is sensible. I gave them a basic puzzle: “You’ve gotten two ropes. Every takes precisely one hour to burn, however they don’t burn at a constant charge. How do you measure precisely 45 minutes?”

Each fashions technically gave the proper reply about tips on how to measure the time however in about as completely different a method as is feasible inside the constraints of the puzzle and being appropriate. Gemini 2.0’s reply is shorter, ordered in a method that is simpler to know, and explains itself clearly regardless of its brevity. Gemini 1.5’s reply required extra cautious parsing, and the steps felt a bit of out of order. The phrasing was additionally complicated, particularly when it stated to mild the remaining rope “at one finish” when it meant the tip that it’s not at the moment lit.

For such a contained reply, Gemini 2.0 stood out as remarkably higher for fixing this type of logic puzzle.

Gemini 2.0 for velocity and readability

After testing the prompts, the variations between Gemini 1.5 Flash and Gemini 2.0 Flash had been clear. Although 1.5 wasn’t essentially ineffective, it did appear to wrestle with specificity and making helpful comparisons. The identical goes for its logic breakdown. Had been that utilized to pc code, you’d need to do a variety of cleanup for a functioning program.

Gemini 2.0 Flash was not solely quicker however extra inventive in its solutions. It appeared far more able to imaginative analogies and comparisons and much clearer in explaining its personal logic. That’s to not say it’s excellent. The water analogy fell aside a bit, and the productiveness recommendation may have used extra concrete examples or concepts.

That stated, it was very quick and will clear up these points with a little bit of back-and-forth dialog. Gemini 2.0 Flash is not the ultimate, excellent AI assistant, however it’s undoubtedly a step in the fitting route for Google because it strives to outdo itself and rivals like ChatGPT.