- Roblox has at all times been designed to guard our youngest customers; we are actually adapting to a rising viewers of older customers.

- With textual content, voice, visuals, 3D fashions, and code, Roblox is in a novel place to succeed with multimodal AI options.

- We enhance security throughout the {industry} wherever we are able to, by way of open supply, collaboration with companions, or assist for laws.

Security and civility have been foundational to Roblox since its inception practically 20 years in the past. On day one, we dedicated to constructing security options, instruments, and moderation capabilities into the design of our merchandise. Earlier than we launch any new function, we’ve already begun serious about find out how to maintain the neighborhood secure from potential harms. This strategy of designing options for security and civility from the outset, together with early testing to see how a brand new function is likely to be misused, helps us innovate. We frequently consider the newest analysis and expertise obtainable to maintain our insurance policies, instruments, and methods as correct and environment friendly as attainable.

With regards to security, Roblox is uniquely positioned. Most platforms started as a spot for adults and are actually retroactively working to construct in protections for teenagers and kids. However our platform was developed from the start as a secure, protecting house for youngsters to create and study, and we are actually adapting to a quickly rising viewers that’s ageing up. As well as, the amount of content material we reasonable has grown exponentially, due to thrilling new generative AI options and instruments that empower much more folks to simply create and talk on Roblox. These are usually not sudden challenges—our mission is to attach a billion folks with optimism and civility. We’re at all times wanting on the future to grasp what new security insurance policies and instruments we’ll want as we develop and adapt.

Lots of our security options and instruments are based mostly on revolutionary AI options that run alongside an knowledgeable staff of hundreds who’re devoted to security. This strategic mix of skilled people and clever automation is crucial as we work to scale the amount of content material we reasonable 24/7. We additionally imagine in nurturing partnerships with organizations centered on on-line security, and, when related, we assist laws that we strongly imagine will enhance the {industry} as a complete.

Main with AI to Safely Scale

The sheer scale of our platform calls for AI methods that meet or prime industry-leading benchmarks for accuracy and effectivity, permitting us to rapidly reply because the neighborhood grows, insurance policies and necessities evolve, and new challenges come up. Immediately, greater than 71 million day by day energetic customers in 190 international locations talk and share content material on Roblox. Each day, folks ship billions of chat messages to their associates on Roblox. Our Creator Retailer has tens of millions of things on the market—and creators add new avatars and objects to Market daily. And it will solely get bigger as we proceed to develop and allow new methods for folks to create and talk on Roblox.

Because the broader {industry} makes nice leaps in machine studying (ML), giant language fashions (LLMs), and multimodal AI, we make investments closely in methods to leverage these new options to make Roblox even safer. AI options already assist us reasonable textual content chat, immersive voice communication, photos, and 3D fashions and meshes. We are actually utilizing many of those similar applied sciences to make creation on Roblox quicker and simpler for our neighborhood.

Innovating with Multimodal AI Methods

By its very nature, our platform combines textual content, voice, photos, 3D fashions, and code. Multimodal AI, during which methods are educated on a number of varieties of information collectively to supply extra correct, refined outcomes than a unimodal system, presents a novel alternative for Roblox. Multimodal methods are able to detecting combos of content material varieties (corresponding to photos and textual content) that could be problematic in ways in which the person parts aren’t. To think about how this would possibly work, let’s say a child is utilizing an avatar that appears like a pig—completely superb, proper? Now think about another person sends a chat message that claims “This seems similar to you! ” That message would possibly violate our insurance policies round bullying.

A mannequin educated solely on 3D fashions would approve the avatar. And a mannequin educated solely on textual content would approve the textual content and ignore the context of the avatar. Solely one thing educated throughout textual content and 3D fashions would be capable of rapidly detect and flag the difficulty on this instance. We’re within the early days for these multimodal fashions, however we see a world, within the not too distant future, the place our system responds to an abuse report by reviewing a whole expertise. It may course of the code, the visuals, the avatars, and communications inside it as enter and decide whether or not additional investigation or consequence is warranted.

We’ve already made vital advances utilizing multimodal methods, corresponding to our mannequin that detects coverage violations in voice communications in close to actual time. We intend to share advances like these once we see the chance to extend security and civility not simply on Roblox however throughout the {industry}. Actually, we’re sharing our first open supply mannequin, a voice security classifier, with the {industry}.

Moderating Content material at Scale

At Roblox, we evaluate most content material varieties to catch important coverage violations earlier than they seem on the platform. Doing this with out inflicting noticeable delays for the folks publishing their content material requires pace in addition to accuracy. Groundbreaking AI options assist us make higher selections in actual time to assist maintain problematic content material off of Roblox—and if something does make it by to the platform, we have now methods in place to establish and take away that content material, together with our sturdy person reporting methods.

We’ve seen the accuracy of our automated moderation instruments surpass that of human moderators relating to repeatable, easy duties. By automating these less complicated circumstances, we release our human moderators to spend the majority of their time on what they do greatest—the extra complicated duties that require important pondering and deeper investigation. With regards to security, nonetheless, we all know that automation can not fully substitute human evaluate. Our human moderators are invaluable for serving to us frequently oversee and take a look at our ML fashions for high quality and consistency, and for creating high-quality labeled information units to maintain our methods present. They assist establish new slang and abbreviations in all 16 languages we assist and flag circumstances that come up continuously in order that the system might be educated to acknowledge them.

We all know that even high-quality ML methods could make errors, so we have now human moderators in our appeals course of. Our moderators assist us get it proper for the person who filed the attraction, and might flag the necessity for additional coaching on the varieties of circumstances the place errors had been made. With this, our system grows more and more correct over time, primarily studying from its errors.Most necessary, people are at all times concerned in any important investigations involving high-risk circumstances, corresponding to extremism or baby endangerment. For these circumstances, we have now a devoted inner staff working to proactively establish and take away malicious actors and to research troublesome circumstances in our most crucial areas. This staff additionally companions with our product staff, sharing insights from the work they’re doing to repeatedly enhance the protection of our platform and merchandise.

Moderating Communication

Our textual content filter has been educated on Roblox-specific language, together with slang and abbreviations. The two.5 billion chat messages despatched daily on Roblox undergo this filter, which is adept at detecting policy-violating language. This filter detects violations in all of the languages we assist, which is very necessary now that we’ve launched real-time AI chat translations.

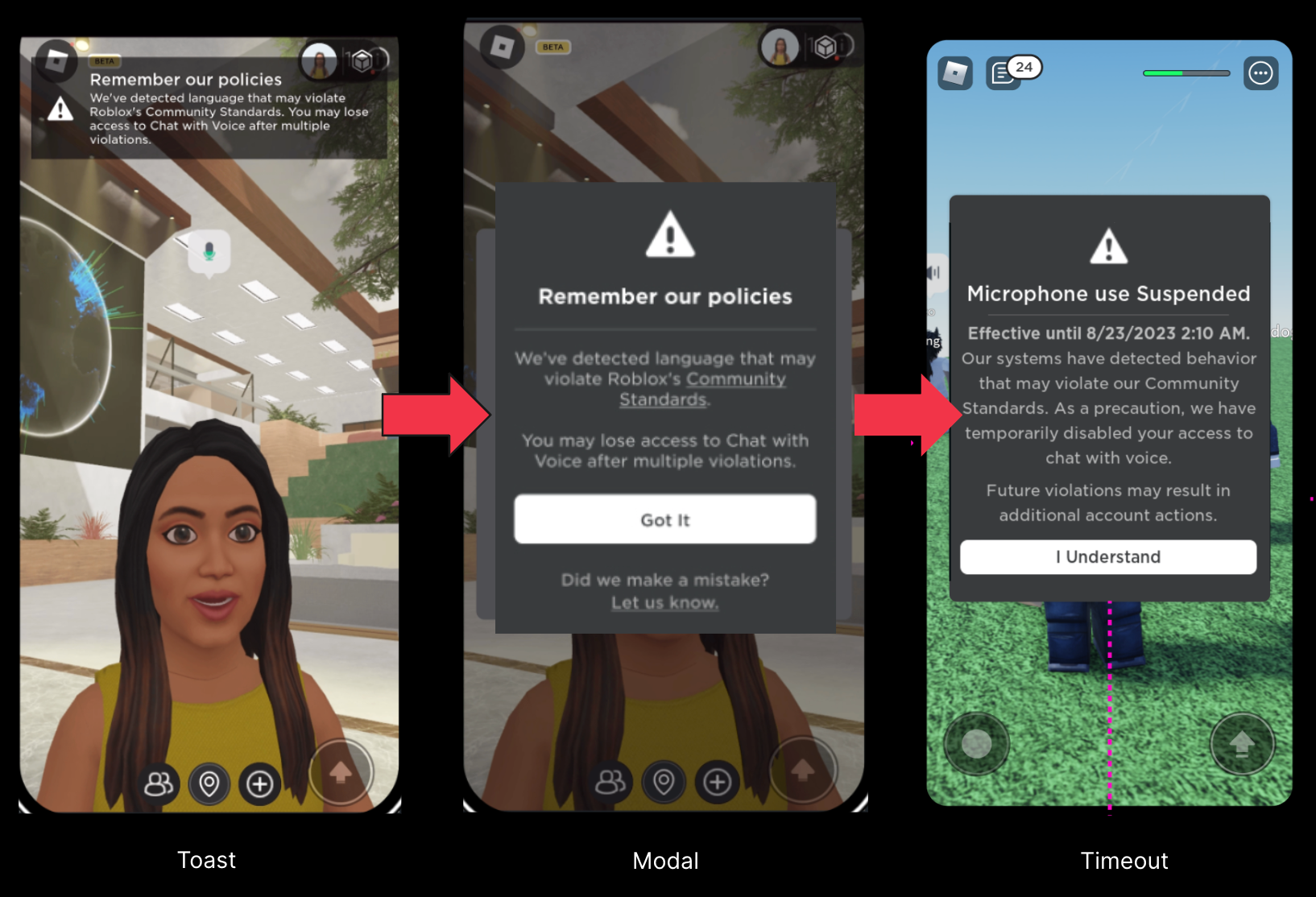

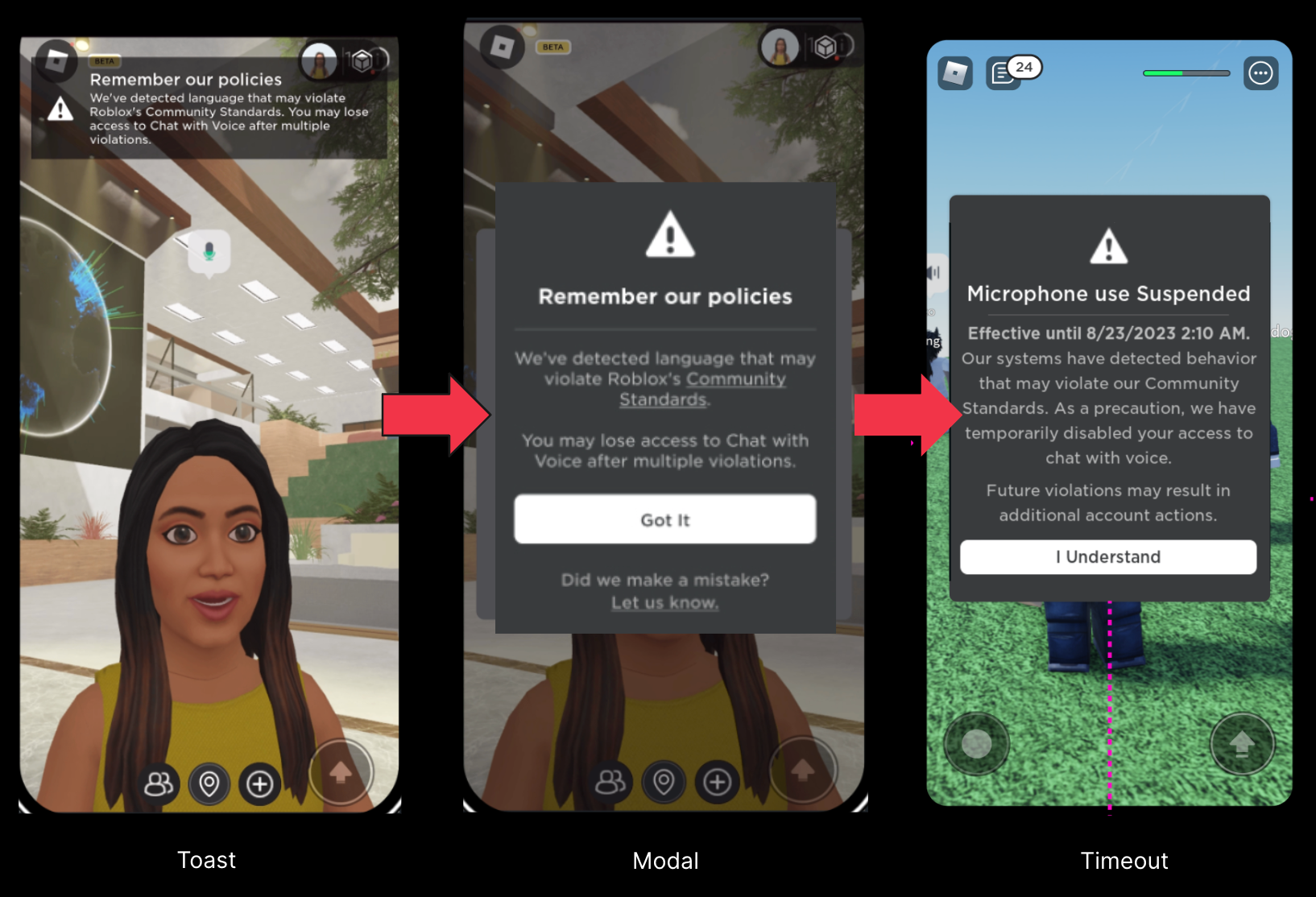

We’ve beforehand shared how we reasonable voice communication in actual time by way of an in-house customized voice detection system. The innovation right here is the power to go instantly from the reside audio to having the AI system label the audio as coverage violating or not—in a matter of seconds. As we started testing our voice moderation system, we discovered that, in lots of circumstances, folks had been unintentionally violating our insurance policies as a result of they weren’t conversant in our guidelines. We developed a real-time security system to assist notify folks when their speech violates certainly one of our insurance policies.

These notifications are an early, delicate warning, akin to being politely requested to observe your language in a public park with younger kids round. In testing, these interventions have proved profitable in reminding folks to be respectful and directing them to our insurance policies to study extra. When put next towards engagement information, the outcomes of our testing are encouraging and point out that these instruments might successfully maintain unhealthy actors off the platform whereas encouraging really engaged customers to enhance their conduct on Roblox. Since rolling out real-time security to all English-speaking customers in January, we have now seen a 53 % discount in abuse studies per day by day energetic person, when associated to voice communication.

Moderating Creation

For visible belongings, together with avatars and avatar equipment, we use laptop imaginative and prescient (CV). One method includes taking pictures of the merchandise from a number of angles. The system then critiques these pictures to find out what the subsequent step must be. If nothing appears amiss, the merchandise is authorised. If one thing is clearly violating a coverage, the merchandise is blocked and we inform the creator what we expect is mistaken. If the system shouldn’t be positive, the merchandise is distributed to a human moderator to take a more in-depth look and make the ultimate resolution.

We do a model of this similar course of for avatars, equipment, code, and full 3D fashions. For full fashions, we go a step additional and assess all of the code and different parts that make up the mannequin. If we’re assessing a automotive, we break it down into its elements—the steering wheel, seats, tires, and the code beneath all of it—to find out whether or not any is likely to be problematic. If there’s an avatar that appears like a pet, we have to assess whether or not the ears and the nostril and the tongue are problematic.

We’d like to have the ability to assess within the different course as nicely. What if the person elements are all completely superb however their total impact violates our insurance policies? A mustache, a khaki jacket, and a crimson armband, for instance, are usually not problematic on their very own. However think about these assembled collectively on somebody’s avatar, with a cross-like image on the armband and one arm raised in a Nazi salute, and an issue turns into clear.

That is the place our in-house fashions differ from the obtainable off-the-shelf CV fashions. These are usually educated on real-world objects. They’ll acknowledge a automotive or a canine however not the part elements of these issues. Our fashions have been educated and optimized to evaluate objects all the way down to the smallest part elements.

Collaborating with Companions

We use all of the instruments obtainable to us to maintain everybody on Roblox secure—however we really feel equally strongly about sharing what we study past Roblox. Actually, we’re sharing our first open supply mannequin, a voice security classifier, to assist others enhance their very own voice security methods. We additionally associate with third-party teams to share information and greatest practices because the {industry} evolves. We construct and keep shut relationships with a variety of organizations, together with parental advocacy teams, psychological well being organizations, authorities businesses, and regulation enforcement businesses. They provide us helpful insights into the issues that oldsters, policymakers, and different teams have about on-line security. In return, we’re capable of share our learnings and the expertise we use to maintain the platform secure and civil.

We’ve got a monitor document of placing the protection of the youngest and most susceptible folks on our platform first. We’ve got established packages, corresponding to our Trusted Flagger Program, to assist us scale our attain as we work to guard the folks on our platform. We collaborate with policymakers on key baby security initiatives, laws, and different efforts. For instance, we had been the primary and one of many solely firms to assist the California Age-Applicable Design Code Act, as a result of we imagine it’s in the perfect curiosity of younger folks. Once we imagine one thing will assist younger folks, we wish to propagate it to everybody. Extra lately, we signed a letter of assist for California Invoice SB 933, which updates state legal guidelines to expressly prohibit AI-generated baby sexual abuse materials.

Working Towards a Safer Future

This work is rarely completed. We’re already engaged on the subsequent era of security instruments and options, at the same time as we make it simpler for anybody to create on Roblox. As we develop and supply new methods to create and share, we are going to proceed to develop new, groundbreaking options to maintain everybody secure and civil on Roblox—and past.

- Roblox has at all times been designed to guard our youngest customers; we are actually adapting to a rising viewers of older customers.

- With textual content, voice, visuals, 3D fashions, and code, Roblox is in a novel place to succeed with multimodal AI options.

- We enhance security throughout the {industry} wherever we are able to, by way of open supply, collaboration with companions, or assist for laws.

Security and civility have been foundational to Roblox since its inception practically 20 years in the past. On day one, we dedicated to constructing security options, instruments, and moderation capabilities into the design of our merchandise. Earlier than we launch any new function, we’ve already begun serious about find out how to maintain the neighborhood secure from potential harms. This strategy of designing options for security and civility from the outset, together with early testing to see how a brand new function is likely to be misused, helps us innovate. We frequently consider the newest analysis and expertise obtainable to maintain our insurance policies, instruments, and methods as correct and environment friendly as attainable.

With regards to security, Roblox is uniquely positioned. Most platforms started as a spot for adults and are actually retroactively working to construct in protections for teenagers and kids. However our platform was developed from the start as a secure, protecting house for youngsters to create and study, and we are actually adapting to a quickly rising viewers that’s ageing up. As well as, the amount of content material we reasonable has grown exponentially, due to thrilling new generative AI options and instruments that empower much more folks to simply create and talk on Roblox. These are usually not sudden challenges—our mission is to attach a billion folks with optimism and civility. We’re at all times wanting on the future to grasp what new security insurance policies and instruments we’ll want as we develop and adapt.

Lots of our security options and instruments are based mostly on revolutionary AI options that run alongside an knowledgeable staff of hundreds who’re devoted to security. This strategic mix of skilled people and clever automation is crucial as we work to scale the amount of content material we reasonable 24/7. We additionally imagine in nurturing partnerships with organizations centered on on-line security, and, when related, we assist laws that we strongly imagine will enhance the {industry} as a complete.

Main with AI to Safely Scale

The sheer scale of our platform calls for AI methods that meet or prime industry-leading benchmarks for accuracy and effectivity, permitting us to rapidly reply because the neighborhood grows, insurance policies and necessities evolve, and new challenges come up. Immediately, greater than 71 million day by day energetic customers in 190 international locations talk and share content material on Roblox. Each day, folks ship billions of chat messages to their associates on Roblox. Our Creator Retailer has tens of millions of things on the market—and creators add new avatars and objects to Market daily. And it will solely get bigger as we proceed to develop and allow new methods for folks to create and talk on Roblox.

Because the broader {industry} makes nice leaps in machine studying (ML), giant language fashions (LLMs), and multimodal AI, we make investments closely in methods to leverage these new options to make Roblox even safer. AI options already assist us reasonable textual content chat, immersive voice communication, photos, and 3D fashions and meshes. We are actually utilizing many of those similar applied sciences to make creation on Roblox quicker and simpler for our neighborhood.

Innovating with Multimodal AI Methods

By its very nature, our platform combines textual content, voice, photos, 3D fashions, and code. Multimodal AI, during which methods are educated on a number of varieties of information collectively to supply extra correct, refined outcomes than a unimodal system, presents a novel alternative for Roblox. Multimodal methods are able to detecting combos of content material varieties (corresponding to photos and textual content) that could be problematic in ways in which the person parts aren’t. To think about how this would possibly work, let’s say a child is utilizing an avatar that appears like a pig—completely superb, proper? Now think about another person sends a chat message that claims “This seems similar to you! ” That message would possibly violate our insurance policies round bullying.

A mannequin educated solely on 3D fashions would approve the avatar. And a mannequin educated solely on textual content would approve the textual content and ignore the context of the avatar. Solely one thing educated throughout textual content and 3D fashions would be capable of rapidly detect and flag the difficulty on this instance. We’re within the early days for these multimodal fashions, however we see a world, within the not too distant future, the place our system responds to an abuse report by reviewing a whole expertise. It may course of the code, the visuals, the avatars, and communications inside it as enter and decide whether or not additional investigation or consequence is warranted.

We’ve already made vital advances utilizing multimodal methods, corresponding to our mannequin that detects coverage violations in voice communications in close to actual time. We intend to share advances like these once we see the chance to extend security and civility not simply on Roblox however throughout the {industry}. Actually, we’re sharing our first open supply mannequin, a voice security classifier, with the {industry}.

Moderating Content material at Scale

At Roblox, we evaluate most content material varieties to catch important coverage violations earlier than they seem on the platform. Doing this with out inflicting noticeable delays for the folks publishing their content material requires pace in addition to accuracy. Groundbreaking AI options assist us make higher selections in actual time to assist maintain problematic content material off of Roblox—and if something does make it by to the platform, we have now methods in place to establish and take away that content material, together with our sturdy person reporting methods.

We’ve seen the accuracy of our automated moderation instruments surpass that of human moderators relating to repeatable, easy duties. By automating these less complicated circumstances, we release our human moderators to spend the majority of their time on what they do greatest—the extra complicated duties that require important pondering and deeper investigation. With regards to security, nonetheless, we all know that automation can not fully substitute human evaluate. Our human moderators are invaluable for serving to us frequently oversee and take a look at our ML fashions for high quality and consistency, and for creating high-quality labeled information units to maintain our methods present. They assist establish new slang and abbreviations in all 16 languages we assist and flag circumstances that come up continuously in order that the system might be educated to acknowledge them.

We all know that even high-quality ML methods could make errors, so we have now human moderators in our appeals course of. Our moderators assist us get it proper for the person who filed the attraction, and might flag the necessity for additional coaching on the varieties of circumstances the place errors had been made. With this, our system grows more and more correct over time, primarily studying from its errors.Most necessary, people are at all times concerned in any important investigations involving high-risk circumstances, corresponding to extremism or baby endangerment. For these circumstances, we have now a devoted inner staff working to proactively establish and take away malicious actors and to research troublesome circumstances in our most crucial areas. This staff additionally companions with our product staff, sharing insights from the work they’re doing to repeatedly enhance the protection of our platform and merchandise.

Moderating Communication

Our textual content filter has been educated on Roblox-specific language, together with slang and abbreviations. The two.5 billion chat messages despatched daily on Roblox undergo this filter, which is adept at detecting policy-violating language. This filter detects violations in all of the languages we assist, which is very necessary now that we’ve launched real-time AI chat translations.

We’ve beforehand shared how we reasonable voice communication in actual time by way of an in-house customized voice detection system. The innovation right here is the power to go instantly from the reside audio to having the AI system label the audio as coverage violating or not—in a matter of seconds. As we started testing our voice moderation system, we discovered that, in lots of circumstances, folks had been unintentionally violating our insurance policies as a result of they weren’t conversant in our guidelines. We developed a real-time security system to assist notify folks when their speech violates certainly one of our insurance policies.

These notifications are an early, delicate warning, akin to being politely requested to observe your language in a public park with younger kids round. In testing, these interventions have proved profitable in reminding folks to be respectful and directing them to our insurance policies to study extra. When put next towards engagement information, the outcomes of our testing are encouraging and point out that these instruments might successfully maintain unhealthy actors off the platform whereas encouraging really engaged customers to enhance their conduct on Roblox. Since rolling out real-time security to all English-speaking customers in January, we have now seen a 53 % discount in abuse studies per day by day energetic person, when associated to voice communication.

Moderating Creation

For visible belongings, together with avatars and avatar equipment, we use laptop imaginative and prescient (CV). One method includes taking pictures of the merchandise from a number of angles. The system then critiques these pictures to find out what the subsequent step must be. If nothing appears amiss, the merchandise is authorised. If one thing is clearly violating a coverage, the merchandise is blocked and we inform the creator what we expect is mistaken. If the system shouldn’t be positive, the merchandise is distributed to a human moderator to take a more in-depth look and make the ultimate resolution.

We do a model of this similar course of for avatars, equipment, code, and full 3D fashions. For full fashions, we go a step additional and assess all of the code and different parts that make up the mannequin. If we’re assessing a automotive, we break it down into its elements—the steering wheel, seats, tires, and the code beneath all of it—to find out whether or not any is likely to be problematic. If there’s an avatar that appears like a pet, we have to assess whether or not the ears and the nostril and the tongue are problematic.

We’d like to have the ability to assess within the different course as nicely. What if the person elements are all completely superb however their total impact violates our insurance policies? A mustache, a khaki jacket, and a crimson armband, for instance, are usually not problematic on their very own. However think about these assembled collectively on somebody’s avatar, with a cross-like image on the armband and one arm raised in a Nazi salute, and an issue turns into clear.

That is the place our in-house fashions differ from the obtainable off-the-shelf CV fashions. These are usually educated on real-world objects. They’ll acknowledge a automotive or a canine however not the part elements of these issues. Our fashions have been educated and optimized to evaluate objects all the way down to the smallest part elements.

Collaborating with Companions

We use all of the instruments obtainable to us to maintain everybody on Roblox secure—however we really feel equally strongly about sharing what we study past Roblox. Actually, we’re sharing our first open supply mannequin, a voice security classifier, to assist others enhance their very own voice security methods. We additionally associate with third-party teams to share information and greatest practices because the {industry} evolves. We construct and keep shut relationships with a variety of organizations, together with parental advocacy teams, psychological well being organizations, authorities businesses, and regulation enforcement businesses. They provide us helpful insights into the issues that oldsters, policymakers, and different teams have about on-line security. In return, we’re capable of share our learnings and the expertise we use to maintain the platform secure and civil.

We’ve got a monitor document of placing the protection of the youngest and most susceptible folks on our platform first. We’ve got established packages, corresponding to our Trusted Flagger Program, to assist us scale our attain as we work to guard the folks on our platform. We collaborate with policymakers on key baby security initiatives, laws, and different efforts. For instance, we had been the primary and one of many solely firms to assist the California Age-Applicable Design Code Act, as a result of we imagine it’s in the perfect curiosity of younger folks. Once we imagine one thing will assist younger folks, we wish to propagate it to everybody. Extra lately, we signed a letter of assist for California Invoice SB 933, which updates state legal guidelines to expressly prohibit AI-generated baby sexual abuse materials.

Working Towards a Safer Future

This work is rarely completed. We’re already engaged on the subsequent era of security instruments and options, at the same time as we make it simpler for anybody to create on Roblox. As we develop and supply new methods to create and share, we are going to proceed to develop new, groundbreaking options to maintain everybody secure and civil on Roblox—and past.