A brand new approach to optimize the coaching of deep studying fashions, a quickly evolving instrument for powering synthetic intelligence, may slash AI’s power calls for.

Developed on the College of Michigan, the open-source optimization framework research deep studying fashions throughout coaching, pinpointing one of the best tradeoff between power consumption and the velocity of the coaching.

“At excessive scales, coaching the GPT-3 mannequin simply as soon as consumes 1,287 MWh, which is sufficient to provide a median U.S. family for 120 years,” stated Mosharaf Chowdhury, an affiliate professor {of electrical} engineering and laptop science.

With Zeus, the brand new power optimization framework developed by Chowdhury and his crew, figures like this could possibly be lowered by as much as 75% with none new {hardware}—and with solely minor impacts on the time it takes to coach a mannequin. It was offered on the 2023 USENIX Symposium on Networked Techniques Design and Implementation (NSDI), in Boston.

Mainstream makes use of for hefty deep studying fashions have exploded over the previous three years, starting from image-generation fashions and expressive chatbots to the recommender methods powering TikTok and Amazon. With cloud computing already out-emitting industrial aviation, the elevated local weather burden from synthetic intelligence is a big concern.

“Present work primarily focuses on optimizing deep studying coaching for quicker completion, typically with out contemplating the affect on power effectivity,” stated Jae-Gained Chung, a doctoral pupil in laptop science and engineering and co-first writer of the examine. “We found that the power we’re pouring into GPUs is giving diminishing returns, which permits us to scale back power consumption considerably, with comparatively little slowdown.”

Deep studying is a household of strategies making use of multilayered, synthetic neural networks to sort out a variety of widespread machine studying duties. These are often known as deep neural networks (DNNs). The fashions themselves are extraordinarily advanced, studying from a few of the most huge information units ever utilized in machine studying. Due to this, they profit vastly from the multitasking capabilities of graphical processing items (GPUs), which burn via 70% of the facility that goes into coaching certainly one of these fashions.

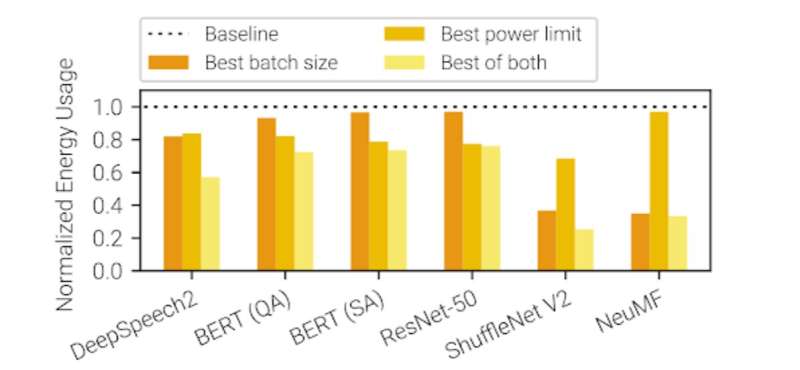

Zeus makes use of two software program knobs to scale back power consumption. One is the GPU energy restrict, which lowers a GPU’s energy use whereas slowing down the mannequin’s coaching till the setting is adjusted once more. The opposite is the deep studying mannequin’s batch dimension parameter, which controls what number of samples from the coaching information the mannequin works via earlier than updating the best way the mannequin represents the relationships it finds within the information. Increased batch sizes scale back coaching time, however with elevated power consumption.

Zeus is ready to tune every of those settings in actual time, in search of the optimum tradeoff level at which power utilization is minimized with as little affect on coaching time as attainable. In examples, the crew was in a position to visually exhibit this tradeoff level by displaying each attainable mixture of those two parameters. Whereas that stage of thoroughness will not occur in observe with a specific coaching job, Zeus will reap the benefits of the repetitive nature of machine studying to come back very shut.

“Thankfully, firms practice the identical DNN over and over on newer information, as typically as each hour. We will study how the DNN behaves by observing throughout these recurrences,” stated Jie You, a latest doctoral graduate in laptop science and engineering and co-lead writer of the examine.

Zeus is the primary framework designed to plug into present workflows for quite a lot of machine studying duties and GPUs, lowering power consumption with out requiring any modifications to a system’s {hardware} or datacenter infrastructure.

As well as, the crew has developed complementary software program that they layer on high of Zeus to scale back the carbon footprint additional. This software program, known as Chase, privileges velocity when low-carbon power is obtainable, and chooses effectivity on the expense of velocity throughout peak occasions, which usually tend to require ramping up carbon-intensive power era comparable to coal. Chase took second place finally 12 months’s CarbonHack hackathon and is to be offered Might 4 on the Worldwide Convention on Studying Representations Workshop.

“It’s not at all times attainable to readily migrate DNN coaching jobs to different areas as a result of massive dataset sizes or information rules,” stated Zhenning Yang, a grasp’s pupil in laptop science and engineering. “Deferring coaching jobs to greener time frames might not be an choice both, since DNNs have to be skilled with essentially the most up-to-date information and shortly deployed to manufacturing to attain the best accuracy.

“Our intention is to design and implement options that don’t battle with these lifelike constraints, whereas nonetheless lowering the carbon footprint of DNN coaching.”

Quotation:

Optimization may reduce the carbon footprint of AI coaching by as much as 75% (2023, April 17)

retrieved 15 Might 2023

from https://techxplore.com/information/2023-04-optimization-carbon-footprint-ai.html

This doc is topic to copyright. Other than any truthful dealing for the aim of personal examine or analysis, no

half could also be reproduced with out the written permission. The content material is offered for info functions solely.