Nvidia

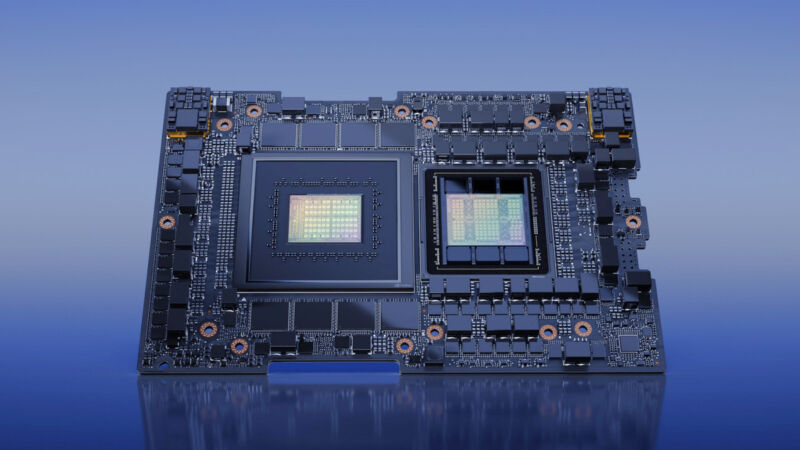

Early final week at COMPUTEX, Nvidia introduced that its new GH200 Grace Hopper “Superchip”—a mixture CPU and GPU particularly created for large-scale AI functions—has entered full manufacturing. It is a beast. It has 528 GPU tensor cores, helps as much as 480GB of CPU RAM and 96GB of GPU RAM, and boasts a GPU reminiscence bandwidth of as much as 4TB per second.

We have beforehand lined the Nvidia H100 Hopper chip, which is at the moment Nvidia’s strongest knowledge middle GPU. It powers AI fashions like OpenAI’s ChatGPT, and it marked a important improve over 2020’s A100 chip, which powered the primary spherical of coaching runs for most of the news-making generative AI chatbots and picture turbines we’re speaking about as we speak.

Quicker GPUs roughly translate into extra highly effective generative AI fashions as a result of they will run extra matrix multiplications in parallel (and do it quicker), which is critical for as we speak’s synthetic neural networks to perform.

The GH200 takes that “Hopper” basis and combines it with Nvidia’s “Grace” CPU platform (each named after laptop pioneer Grace Hopper), rolling it into one chip via Nvidia’s NVLink chip-to-chip (C2C) interconnect expertise. Nvidia expects the mixture to dramatically speed up AI and machine-learning functions in each coaching (making a mannequin) and inference (operating it).

“Generative AI is quickly reworking companies, unlocking new alternatives and accelerating discovery in healthcare, finance, enterprise providers and plenty of extra industries,” stated Ian Buck, vp of accelerated computing at Nvidia, in a press launch. “With Grace Hopper Superchips in full manufacturing, producers worldwide will quickly present the accelerated infrastructure enterprises wanted to construct and deploy generative AI functions that leverage their distinctive proprietary knowledge.”

In line with the corporate, key options of the GH200 embody a brand new 900GB/s coherent (shared) reminiscence interface, which is seven instances quicker than PCIe Gen5. The GH200 additionally affords 30 instances larger combination system reminiscence bandwidth to the GPU in comparison with the aforementioned Nvidia DGX A100. Moreover, the GH200 can run all Nvidia software program platforms, together with the Nvidia HPC SDK, Nvidia AI, and Nvidia Omniverse.

Notably, Nvidia additionally introduced that it is going to be constructing this combo CPU/GPU chip into a brand new supercomputer known as the DGX GH200, which might make the most of the mixed energy of 256 GH200 chips to carry out as a single GPU, offering 1 exaflop of efficiency and 144 terabytes of shared reminiscence, almost 500 instances extra reminiscence than the previous-generation Nvidia DGX A100.

The DGX GH200 can be able to coaching large next-generation AI fashions (GPT-6, anybody?) for generative language functions, recommender programs, and knowledge analytics. Nvidia didn’t announce pricing for the GH200, however based on Anandtech, a single DGX GH200 laptop is “simply going to value someplace within the low 8 digits.”

General, it is affordable to say that due to continued {hardware} developments from distributors like Nvidia and Cerebras, high-end cloud AI fashions will possible proceed to turn into extra succesful over time, processing extra knowledge and doing it a lot quicker than earlier than. Let’s simply hope they do not argue with tech journalists.

Nvidia

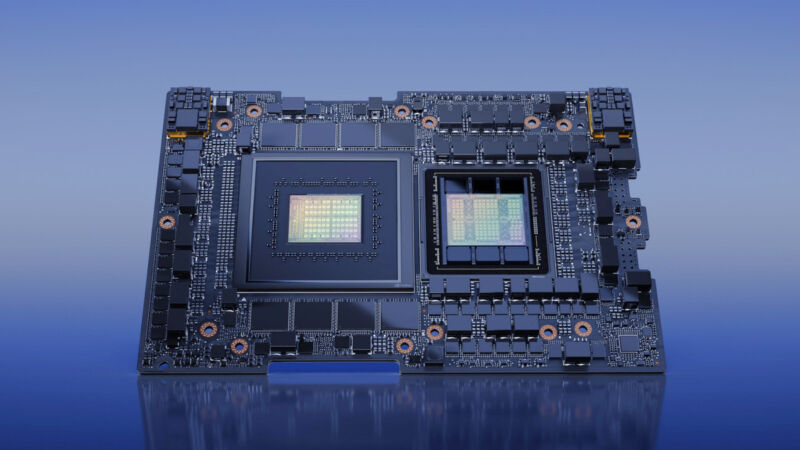

Early final week at COMPUTEX, Nvidia introduced that its new GH200 Grace Hopper “Superchip”—a mixture CPU and GPU particularly created for large-scale AI functions—has entered full manufacturing. It is a beast. It has 528 GPU tensor cores, helps as much as 480GB of CPU RAM and 96GB of GPU RAM, and boasts a GPU reminiscence bandwidth of as much as 4TB per second.

We have beforehand lined the Nvidia H100 Hopper chip, which is at the moment Nvidia’s strongest knowledge middle GPU. It powers AI fashions like OpenAI’s ChatGPT, and it marked a important improve over 2020’s A100 chip, which powered the primary spherical of coaching runs for most of the news-making generative AI chatbots and picture turbines we’re speaking about as we speak.

Quicker GPUs roughly translate into extra highly effective generative AI fashions as a result of they will run extra matrix multiplications in parallel (and do it quicker), which is critical for as we speak’s synthetic neural networks to perform.

The GH200 takes that “Hopper” basis and combines it with Nvidia’s “Grace” CPU platform (each named after laptop pioneer Grace Hopper), rolling it into one chip via Nvidia’s NVLink chip-to-chip (C2C) interconnect expertise. Nvidia expects the mixture to dramatically speed up AI and machine-learning functions in each coaching (making a mannequin) and inference (operating it).

“Generative AI is quickly reworking companies, unlocking new alternatives and accelerating discovery in healthcare, finance, enterprise providers and plenty of extra industries,” stated Ian Buck, vp of accelerated computing at Nvidia, in a press launch. “With Grace Hopper Superchips in full manufacturing, producers worldwide will quickly present the accelerated infrastructure enterprises wanted to construct and deploy generative AI functions that leverage their distinctive proprietary knowledge.”

In line with the corporate, key options of the GH200 embody a brand new 900GB/s coherent (shared) reminiscence interface, which is seven instances quicker than PCIe Gen5. The GH200 additionally affords 30 instances larger combination system reminiscence bandwidth to the GPU in comparison with the aforementioned Nvidia DGX A100. Moreover, the GH200 can run all Nvidia software program platforms, together with the Nvidia HPC SDK, Nvidia AI, and Nvidia Omniverse.

Notably, Nvidia additionally introduced that it is going to be constructing this combo CPU/GPU chip into a brand new supercomputer known as the DGX GH200, which might make the most of the mixed energy of 256 GH200 chips to carry out as a single GPU, offering 1 exaflop of efficiency and 144 terabytes of shared reminiscence, almost 500 instances extra reminiscence than the previous-generation Nvidia DGX A100.

The DGX GH200 can be able to coaching large next-generation AI fashions (GPT-6, anybody?) for generative language functions, recommender programs, and knowledge analytics. Nvidia didn’t announce pricing for the GH200, however based on Anandtech, a single DGX GH200 laptop is “simply going to value someplace within the low 8 digits.”

General, it is affordable to say that due to continued {hardware} developments from distributors like Nvidia and Cerebras, high-end cloud AI fashions will possible proceed to turn into extra succesful over time, processing extra knowledge and doing it a lot quicker than earlier than. Let’s simply hope they do not argue with tech journalists.