On Sunday, Nvidia CEO Jensen Huang reached past Blackwell and revealed the corporate’s next-generation AI-accelerating GPU platform throughout his keynote at Computex 2024 in Taiwan. Huang additionally detailed plans for an annual tick-tock-style improve cycle of its AI acceleration platforms, mentioning an upcoming Blackwell Extremely chip slated for 2025 and a subsequent platform referred to as “Rubin” set for 2026.

Nvidia’s information heart GPUs at the moment energy a big majority of cloud-based AI fashions, corresponding to ChatGPT, in each growth (coaching) and deployment (inference) phases, and buyers are protecting a detailed watch on the corporate, with expectations to maintain that run going.

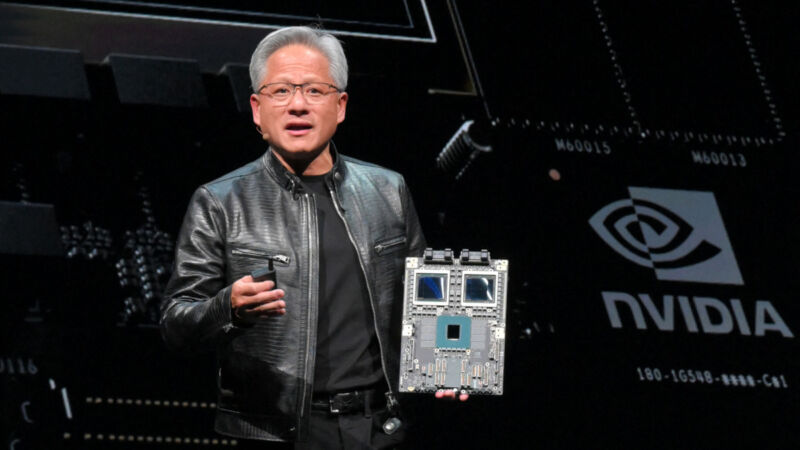

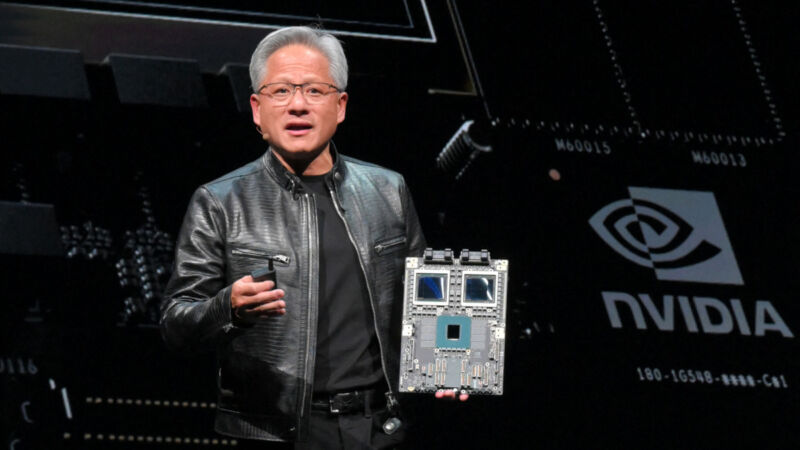

Through the keynote, Huang appeared considerably hesitant to make the Rubin announcement, maybe cautious of invoking the so-called Osborne impact, whereby an organization’s untimely announcement of the subsequent iteration of a tech product eats into the present iteration’s gross sales. “That is the very first time that this subsequent click on as been made,” Huang stated, holding up his presentation distant simply earlier than the Rubin announcement. “And I am unsure but whether or not I’ll remorse this or not.”

Nvidia Keynote at Computex 2023.

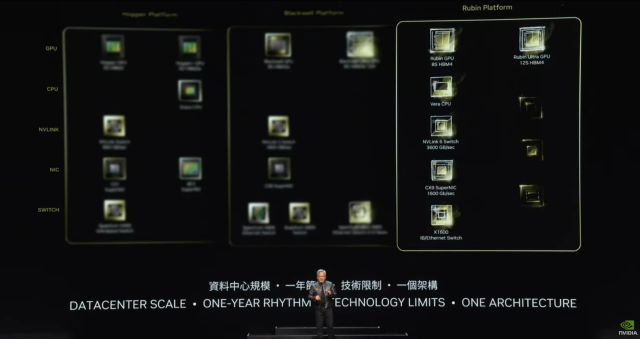

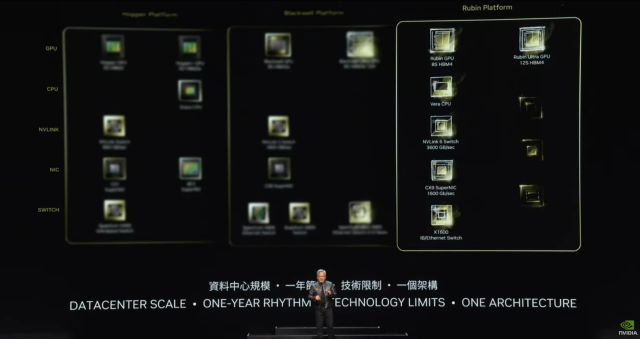

The Rubin AI platform, anticipated in 2026, will use HBM4 (a brand new type of high-bandwidth reminiscence) and NVLink 6 Change, working at 3,600GBps. Following that launch, Nvidia will launch a tick-tock iteration referred to as “Rubin Extremely.” Whereas Huang didn’t present intensive specs for the upcoming merchandise, he promised price and power financial savings associated to the brand new chipsets.

Through the keynote, Huang additionally launched a brand new ARM-based CPU referred to as “Vera,” which can be featured on a brand new accelerator board referred to as “Vera Rubin,” alongside one of many Rubin GPUs.

Very like Nvidia’s Grace Hopper structure, which mixes a “Grace” CPU and a “Hopper” GPU to pay tribute to the pioneering pc scientist of the identical title, Vera Rubin refers to Vera Florence Cooper Rubin (1928–2016), an American astronomer who made discoveries within the subject of deep area astronomy. She is greatest identified for her pioneering work on galaxy rotation charges, which supplied sturdy proof for the existence of darkish matter.

A calculated danger

Nvidia’s reveal of Rubin just isn’t a shock within the sense that the majority massive tech firms are repeatedly engaged on follow-up merchandise nicely upfront of launch, but it surely’s notable as a result of it comes simply three months after the corporate revealed Blackwell, which is barely out of the gate and never but broadly transport.

In the intervening time, the corporate appears to be snug leapfrogging itself with new bulletins and catching up later; Nvidia simply introduced that its GH200 Grace Hopper “Superchip,” unveiled one 12 months in the past at Computex 2023, is now in full manufacturing.

With Nvidia inventory rising and the corporate possessing an estimated 70–95 % of the info heart GPU market share, the Rubin reveal is a calculated danger that appears to come back from a spot of confidence. That confidence may develop into misplaced if a so-called “AI bubble” pops or if Nvidia misjudges the capabilities of its rivals. The announcement can also stem from strain to proceed Nvidia’s astronomical development in market cap with nonstop guarantees of bettering know-how.

Accordingly, Huang has been wanting to showcase the corporate’s plans to proceed pushing silicon fabrication tech to its limits and broadly broadcast that Nvidia plans to maintain releasing new AI chips at a gradual cadence.

“Our firm has a one-year rhythm. Our fundamental philosophy could be very easy: construct your entire information heart scale, disaggregate and promote to you elements on a one-year rhythm, and we push all the pieces to know-how limits,” Huang stated throughout Sunday’s Computex keynote.

Regardless of Nvidia’s current market efficiency, the corporate’s run might not proceed indefinitely. With ample cash pouring into the info heart AI area, Nvidia is not alone in growing accelerator chips. Rivals like AMD (with the Intuition collection) and Intel (with Gaudi 3) additionally need to win a slice of the info heart GPU market away from Nvidia’s present command of the AI-accelerator area. And OpenAI’s Sam Altman is attempting to encourage diversified manufacturing of GPU {hardware} that can energy the corporate’s subsequent technology of AI fashions within the years forward.

On Sunday, Nvidia CEO Jensen Huang reached past Blackwell and revealed the corporate’s next-generation AI-accelerating GPU platform throughout his keynote at Computex 2024 in Taiwan. Huang additionally detailed plans for an annual tick-tock-style improve cycle of its AI acceleration platforms, mentioning an upcoming Blackwell Extremely chip slated for 2025 and a subsequent platform referred to as “Rubin” set for 2026.

Nvidia’s information heart GPUs at the moment energy a big majority of cloud-based AI fashions, corresponding to ChatGPT, in each growth (coaching) and deployment (inference) phases, and buyers are protecting a detailed watch on the corporate, with expectations to maintain that run going.

Through the keynote, Huang appeared considerably hesitant to make the Rubin announcement, maybe cautious of invoking the so-called Osborne impact, whereby an organization’s untimely announcement of the subsequent iteration of a tech product eats into the present iteration’s gross sales. “That is the very first time that this subsequent click on as been made,” Huang stated, holding up his presentation distant simply earlier than the Rubin announcement. “And I am unsure but whether or not I’ll remorse this or not.”

Nvidia Keynote at Computex 2023.

The Rubin AI platform, anticipated in 2026, will use HBM4 (a brand new type of high-bandwidth reminiscence) and NVLink 6 Change, working at 3,600GBps. Following that launch, Nvidia will launch a tick-tock iteration referred to as “Rubin Extremely.” Whereas Huang didn’t present intensive specs for the upcoming merchandise, he promised price and power financial savings associated to the brand new chipsets.

Through the keynote, Huang additionally launched a brand new ARM-based CPU referred to as “Vera,” which can be featured on a brand new accelerator board referred to as “Vera Rubin,” alongside one of many Rubin GPUs.

Very like Nvidia’s Grace Hopper structure, which mixes a “Grace” CPU and a “Hopper” GPU to pay tribute to the pioneering pc scientist of the identical title, Vera Rubin refers to Vera Florence Cooper Rubin (1928–2016), an American astronomer who made discoveries within the subject of deep area astronomy. She is greatest identified for her pioneering work on galaxy rotation charges, which supplied sturdy proof for the existence of darkish matter.

A calculated danger

Nvidia’s reveal of Rubin just isn’t a shock within the sense that the majority massive tech firms are repeatedly engaged on follow-up merchandise nicely upfront of launch, but it surely’s notable as a result of it comes simply three months after the corporate revealed Blackwell, which is barely out of the gate and never but broadly transport.

In the intervening time, the corporate appears to be snug leapfrogging itself with new bulletins and catching up later; Nvidia simply introduced that its GH200 Grace Hopper “Superchip,” unveiled one 12 months in the past at Computex 2023, is now in full manufacturing.

With Nvidia inventory rising and the corporate possessing an estimated 70–95 % of the info heart GPU market share, the Rubin reveal is a calculated danger that appears to come back from a spot of confidence. That confidence may develop into misplaced if a so-called “AI bubble” pops or if Nvidia misjudges the capabilities of its rivals. The announcement can also stem from strain to proceed Nvidia’s astronomical development in market cap with nonstop guarantees of bettering know-how.

Accordingly, Huang has been wanting to showcase the corporate’s plans to proceed pushing silicon fabrication tech to its limits and broadly broadcast that Nvidia plans to maintain releasing new AI chips at a gradual cadence.

“Our firm has a one-year rhythm. Our fundamental philosophy could be very easy: construct your entire information heart scale, disaggregate and promote to you elements on a one-year rhythm, and we push all the pieces to know-how limits,” Huang stated throughout Sunday’s Computex keynote.

Regardless of Nvidia’s current market efficiency, the corporate’s run might not proceed indefinitely. With ample cash pouring into the info heart AI area, Nvidia is not alone in growing accelerator chips. Rivals like AMD (with the Intuition collection) and Intel (with Gaudi 3) additionally need to win a slice of the info heart GPU market away from Nvidia’s present command of the AI-accelerator area. And OpenAI’s Sam Altman is attempting to encourage diversified manufacturing of GPU {hardware} that can energy the corporate’s subsequent technology of AI fashions within the years forward.

%20Abstract%20Background%20102024%20SOURCE%20Amazon.jpg?w=120&resize=120,86&ssl=1)