Epoch AI allowed Fields Medal winners Terence Tao and Timothy Gowers to overview parts of the benchmark. “These are extraordinarily difficult,” Tao stated in suggestions supplied to Epoch. “I feel that within the close to time period principally the one strategy to resolve them, wanting having an actual area knowledgeable within the space, is by a mixture of a semi-expert like a graduate pupil in a associated subject, possibly paired with some mixture of a contemporary AI and plenty of different algebra packages.”

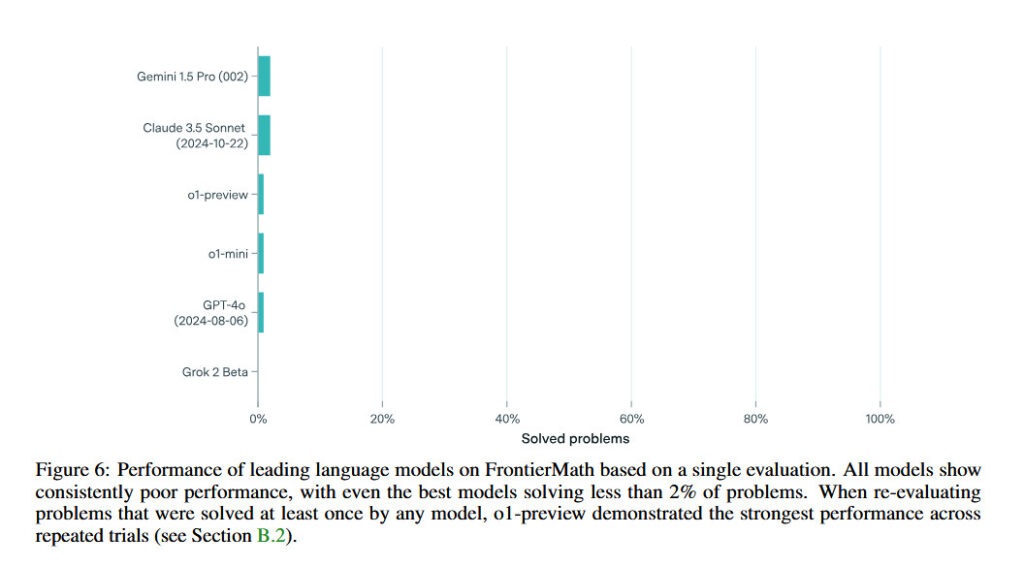

A chart displaying AI fashions’ restricted success on the FrontierMath issues, taken from Epoch AI’s analysis paper.

Credit score:

Epoch AI

To assist within the verification of right solutions throughout testing, the FrontierMath issues should have solutions that may be mechanically checked by computation, both as precise integers or mathematical objects. The designers made issues “guessproof” by requiring massive numerical solutions or complicated mathematical options, with lower than a 1 p.c probability of right random guesses.

Mathematician Evan Chen, writing on his weblog, defined how he thinks that FrontierMath differs from conventional math competitions just like the Worldwide Mathematical Olympiad (IMO). Issues in that competitors sometimes require inventive perception whereas avoiding complicated implementation and specialised data, he says. However for FrontierMath, “they preserve the primary requirement, however outright invert the second and third requirement,” Chen wrote.

Whereas IMO issues keep away from specialised data and complicated calculations, FrontierMath embraces them. “As a result of an AI system has vastly higher computational energy, it is truly doable to design issues with simply verifiable options utilizing the identical concept that IOI or Undertaking Euler does—principally, ‘write a proof’ is changed by ‘implement an algorithm in code,'” Chen defined.

The group plans common evaluations of AI fashions towards the benchmark whereas increasing its drawback set. They are saying they are going to launch further pattern issues within the coming months to assist the analysis group check their programs.

Epoch AI allowed Fields Medal winners Terence Tao and Timothy Gowers to overview parts of the benchmark. “These are extraordinarily difficult,” Tao stated in suggestions supplied to Epoch. “I feel that within the close to time period principally the one strategy to resolve them, wanting having an actual area knowledgeable within the space, is by a mixture of a semi-expert like a graduate pupil in a associated subject, possibly paired with some mixture of a contemporary AI and plenty of different algebra packages.”

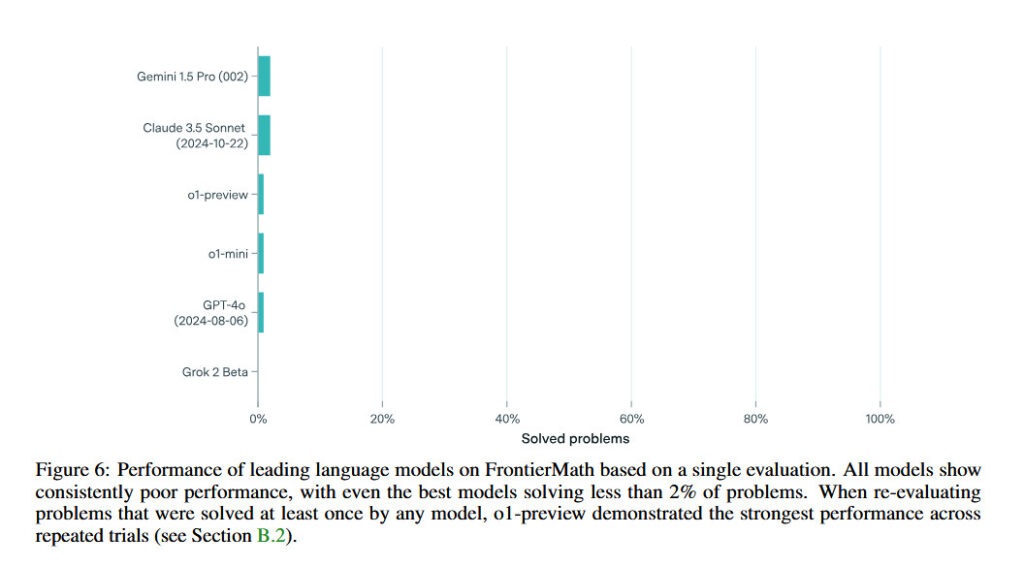

A chart displaying AI fashions’ restricted success on the FrontierMath issues, taken from Epoch AI’s analysis paper.

Credit score:

Epoch AI

To assist within the verification of right solutions throughout testing, the FrontierMath issues should have solutions that may be mechanically checked by computation, both as precise integers or mathematical objects. The designers made issues “guessproof” by requiring massive numerical solutions or complicated mathematical options, with lower than a 1 p.c probability of right random guesses.

Mathematician Evan Chen, writing on his weblog, defined how he thinks that FrontierMath differs from conventional math competitions just like the Worldwide Mathematical Olympiad (IMO). Issues in that competitors sometimes require inventive perception whereas avoiding complicated implementation and specialised data, he says. However for FrontierMath, “they preserve the primary requirement, however outright invert the second and third requirement,” Chen wrote.

Whereas IMO issues keep away from specialised data and complicated calculations, FrontierMath embraces them. “As a result of an AI system has vastly higher computational energy, it is truly doable to design issues with simply verifiable options utilizing the identical concept that IOI or Undertaking Euler does—principally, ‘write a proof’ is changed by ‘implement an algorithm in code,'” Chen defined.

The group plans common evaluations of AI fashions towards the benchmark whereas increasing its drawback set. They are saying they are going to launch further pattern issues within the coming months to assist the analysis group check their programs.