For all the advantages of the greatest AI picture turbines, many people are fearful a few torrent of misinformation and fakery. Meta, it appears, did not get the memo – in a Threads publish, it is simply beneficial that these of us who missed the latest return of the Northern Lights ought to simply faux photographs utilizing Meta AI as an alternative.

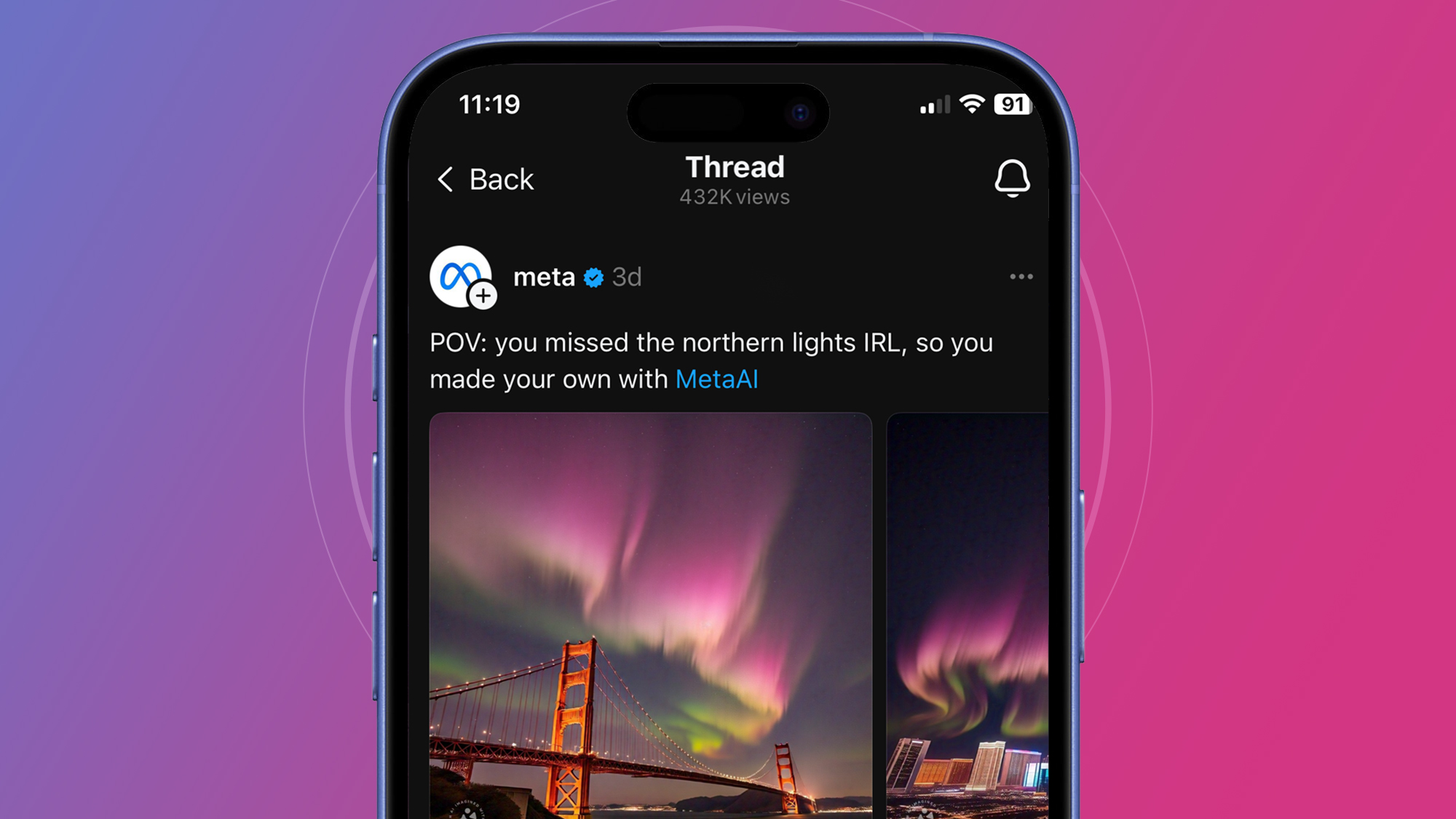

The Threads publish, noticed by The Verge, is titled “POV: you missed the northern lights IRL, so that you made your individual with Meta AI” and contains AI-generated photographs of the phenomena over landmarks just like the Golden Gate Bridge and Las Vegas.

Meta has acquired a justifiable roasting for its tone-deaf publish within the Threads feedback. “Please promote Instagram to somebody who cares about pictures” famous one response, whereas NASA software program engineer Kevin M. Gill remarked that faux photographs like Meta’s “make our cultural intelligence worse”.

It is potential that Meta’s Threads publish was simply an errant social media publish moderately than a mirrored image of the corporate’s broader view on how Meta AI’s picture generator needs to be used. And it may very well be argued that there is little mistaken with producing photographs like Meta’s examples, so long as creators are clear about their origin.

The issue is that the tone of Meta’s publish suggests folks ought to use AI to mislead their followers into pondering that they’d photographed an actual occasion.

For a lot of, that is crossing a line that might have extra critical repercussions for information occasions which can be extra consequential than the Northern Lights.

However the place is the road?

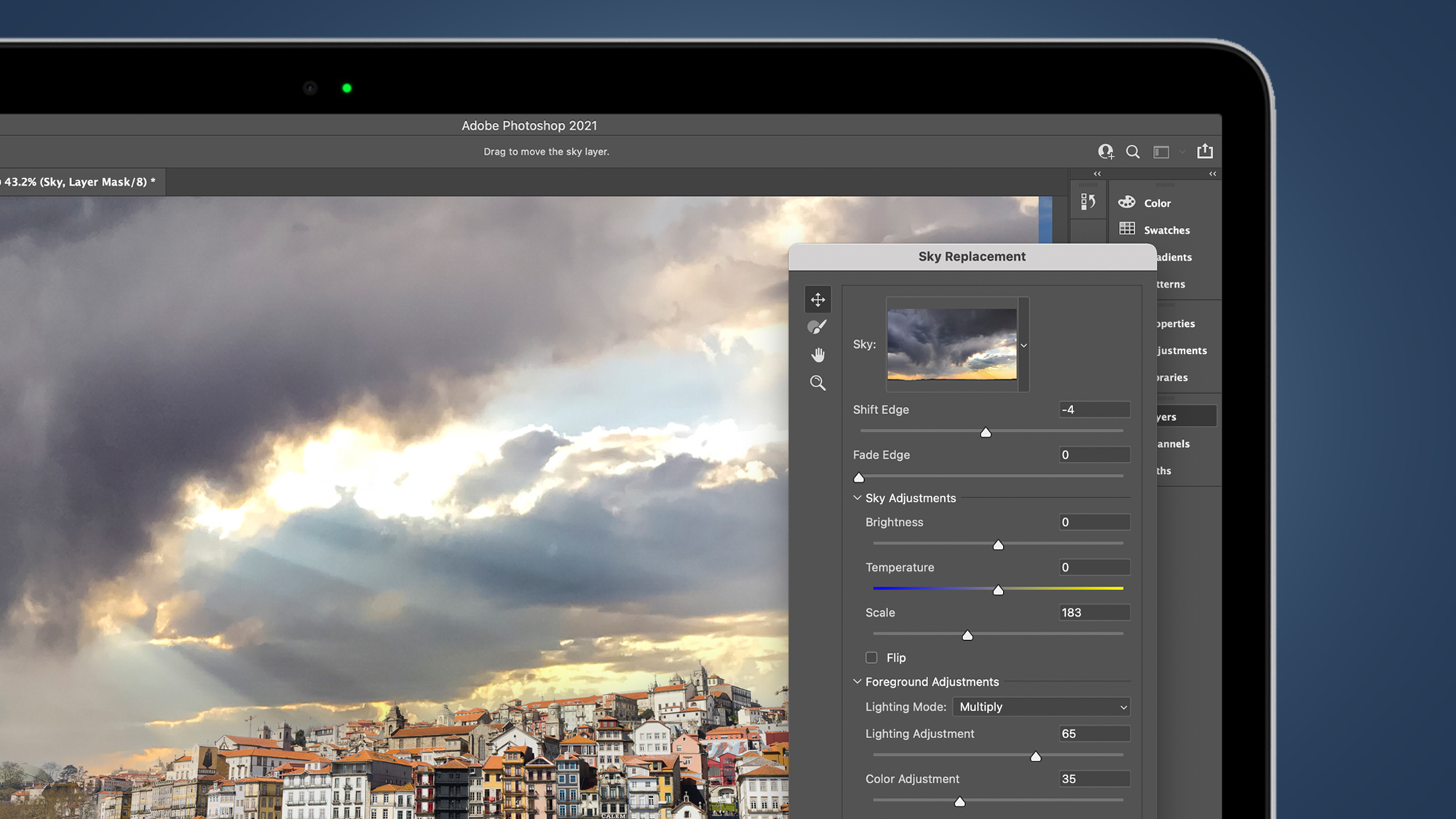

Is posting AI-generated pictures of the Northern Lights any worse than utilizing Photoshop’s Sky Alternative device (above)? Or modifying your pictures with Adobe’s Generative Fill? These are the sorts of questions that generative AI instruments are elevating each day – and this Meta misstep is an instance of how skinny the road will be.

Many would argue that it in the end comes all the way down to transparency. The problem with Meta’s publish (which remains to be stay) is not the AI-generated Northern Lights photographs, however the suggestion that you possibly can use them to easily faux witnessing an actual information occasion.

Transparency and honesty round a picture’s origins are as a lot the accountability of the tech corporations as it’s their customers. That is why Google Photographs is, in accordance with Android Authority, testing new metadata that’ll let you know whether or not or not a picture is AI-generated.

Adobe has additionally made comparable efforts with its Content material Authenticity Initiative (CAI), which has been making an attempt to battle visible misinformation with its personal metadata customary. Google lately introduced that it’s going to lastly be utilizing the CAI’s pointers to label AI photographs in Google Search outcomes. However the sluggishness in adopting a regular leaves us in a limbo state of affairs as AI picture turbines grow to be ever-more highly effective.

Let’s hope the state of affairs improves quickly – within the meantime, it appears incumbent on social media customers to be sincere when posting absolutely AI-generated photographs. And positively for tech giants to not encourage them to do the other.