On Tuesday, Google introduced Lumiere, an AI video generator that it calls “a space-time diffusion mannequin for lifelike video era” within the accompanying preprint paper. However let’s not child ourselves: It does an incredible job at creating movies of cute animals in ridiculous eventualities, akin to utilizing curler skates, driving a automobile, or enjoying a piano. Positive, it could possibly do extra, however it’s maybe essentially the most superior text-to-animal AI video generator but demonstrated.

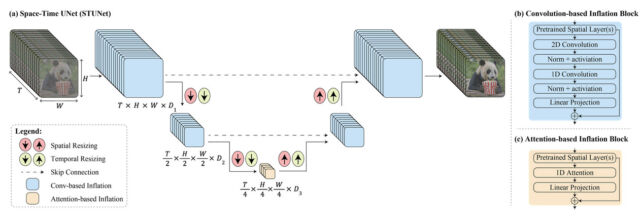

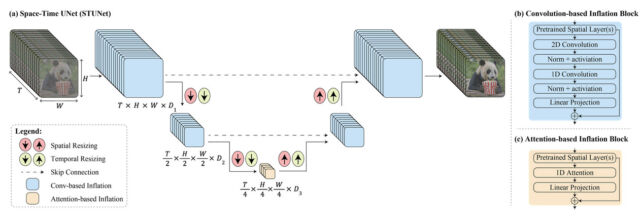

Based on Google, Lumiere makes use of distinctive structure to generate a video’s total temporal length in a single go. Or, as the corporate put it, “We introduce a Area-Time U-Internet structure that generates the complete temporal length of the video directly, by a single move within the mannequin. That is in distinction to present video fashions which synthesize distant keyframes adopted by temporal super-resolution—an method that inherently makes world temporal consistency tough to attain.”

In layperson phrases, Google’s tech is designed to deal with each the house (the place issues are within the video) and time (how issues transfer and alter all through the video) elements concurrently. So, as a substitute of constructing a video by placing collectively many small elements or frames, it could possibly create the complete video, from begin to end, in a single easy course of.

The official promotional video accompanying the paper “Lumiere: A Area-Time Diffusion Mannequin for Video Era,” launched by Google.

Lumiere may do loads of get together tips, that are laid out fairly nicely with examples on Google’s demo web page. For instance, it could possibly carry out text-to-video era (turning a written immediate right into a video), convert nonetheless pictures into movies, generate movies in particular types utilizing a reference picture, apply constant video enhancing utilizing text-based prompts, create cinemagraphs by animating particular areas of a picture, and supply video inpainting capabilities (for instance, it could possibly change the kind of gown an individual is carrying).

Within the Lumiere analysis paper, the Google researchers state that the AI mannequin outputs five-second lengthy 1024×1024 pixel movies, which they describe as “low-resolution.” Regardless of these limitations, the researchers carried out a person research and declare that Lumiere’s outputs have been most popular over present AI video synthesis fashions.

As for coaching information, Google would not say the place it bought the movies they fed into Lumiere, writing, “We practice our T2V [text to video] mannequin on a dataset containing 30M movies together with their textual content caption. [sic] The movies are 80 frames lengthy at 16 fps (5 seconds). The bottom mannequin is educated at 128×128.”

AI-generated video remains to be in a primitive state, nevertheless it’s been progressing in high quality over the previous two years. In October 2022, we coated Google’s first publicly unveiled picture synthesis mannequin, Imagen Video. It may generate quick 1280×768 video clips from a written immediate at 24 frames per second, however the outcomes weren’t all the time coherent. Earlier than that, Meta debuted its AI video generator, Make-A-Video. In June of final 12 months, Runway’s Gen2 video synthesis mannequin enabled the creation of two-second video clips from textual content prompts, fueling the creation of surrealistic parody commercials. And in November, we coated Steady Video Diffusion, which might generate quick clips from nonetheless pictures.

AI firms usually reveal video turbines with cute animals as a result of producing coherent, non-deformed people is at present tough—particularly since we, as people (you’re human, proper?), are adept at noticing any flaws in human our bodies or how they transfer. Simply have a look at AI-generated Will Smith consuming spaghetti.

Judging by Google’s examples (and never having used it ourselves), Lumiere seems to surpass these different AI video era fashions. However since Google tends to maintain its AI analysis fashions near its chest, we’re unsure when, if ever, the general public might have an opportunity to strive it for themselves.

As all the time, at any time when we see text-to-video synthesis fashions getting extra succesful, we will not assist however consider the future implications for our Web-connected society, which is centered round sharing media artifacts—and the final presumption that “lifelike” video usually represents actual objects in actual conditions captured by a digital camera. Future video synthesis instruments extra succesful than Lumiere will make misleading deepfakes trivially simple to create.

To that finish, within the “Societal Influence” part of the Lumiere paper, the researchers write, “Our major objective on this work is to allow novice customers to generate visible content material in an inventive and versatile approach. [sic] Nonetheless, there’s a threat of misuse for creating pretend or dangerous content material with our expertise, and we consider that it’s essential to develop and apply instruments for detecting biases and malicious use circumstances as a way to guarantee a secure and truthful use.”

On Tuesday, Google introduced Lumiere, an AI video generator that it calls “a space-time diffusion mannequin for lifelike video era” within the accompanying preprint paper. However let’s not child ourselves: It does an incredible job at creating movies of cute animals in ridiculous eventualities, akin to utilizing curler skates, driving a automobile, or enjoying a piano. Positive, it could possibly do extra, however it’s maybe essentially the most superior text-to-animal AI video generator but demonstrated.

Based on Google, Lumiere makes use of distinctive structure to generate a video’s total temporal length in a single go. Or, as the corporate put it, “We introduce a Area-Time U-Internet structure that generates the complete temporal length of the video directly, by a single move within the mannequin. That is in distinction to present video fashions which synthesize distant keyframes adopted by temporal super-resolution—an method that inherently makes world temporal consistency tough to attain.”

In layperson phrases, Google’s tech is designed to deal with each the house (the place issues are within the video) and time (how issues transfer and alter all through the video) elements concurrently. So, as a substitute of constructing a video by placing collectively many small elements or frames, it could possibly create the complete video, from begin to end, in a single easy course of.

The official promotional video accompanying the paper “Lumiere: A Area-Time Diffusion Mannequin for Video Era,” launched by Google.

Lumiere may do loads of get together tips, that are laid out fairly nicely with examples on Google’s demo web page. For instance, it could possibly carry out text-to-video era (turning a written immediate right into a video), convert nonetheless pictures into movies, generate movies in particular types utilizing a reference picture, apply constant video enhancing utilizing text-based prompts, create cinemagraphs by animating particular areas of a picture, and supply video inpainting capabilities (for instance, it could possibly change the kind of gown an individual is carrying).

Within the Lumiere analysis paper, the Google researchers state that the AI mannequin outputs five-second lengthy 1024×1024 pixel movies, which they describe as “low-resolution.” Regardless of these limitations, the researchers carried out a person research and declare that Lumiere’s outputs have been most popular over present AI video synthesis fashions.

As for coaching information, Google would not say the place it bought the movies they fed into Lumiere, writing, “We practice our T2V [text to video] mannequin on a dataset containing 30M movies together with their textual content caption. [sic] The movies are 80 frames lengthy at 16 fps (5 seconds). The bottom mannequin is educated at 128×128.”

AI-generated video remains to be in a primitive state, nevertheless it’s been progressing in high quality over the previous two years. In October 2022, we coated Google’s first publicly unveiled picture synthesis mannequin, Imagen Video. It may generate quick 1280×768 video clips from a written immediate at 24 frames per second, however the outcomes weren’t all the time coherent. Earlier than that, Meta debuted its AI video generator, Make-A-Video. In June of final 12 months, Runway’s Gen2 video synthesis mannequin enabled the creation of two-second video clips from textual content prompts, fueling the creation of surrealistic parody commercials. And in November, we coated Steady Video Diffusion, which might generate quick clips from nonetheless pictures.

AI firms usually reveal video turbines with cute animals as a result of producing coherent, non-deformed people is at present tough—particularly since we, as people (you’re human, proper?), are adept at noticing any flaws in human our bodies or how they transfer. Simply have a look at AI-generated Will Smith consuming spaghetti.

Judging by Google’s examples (and never having used it ourselves), Lumiere seems to surpass these different AI video era fashions. However since Google tends to maintain its AI analysis fashions near its chest, we’re unsure when, if ever, the general public might have an opportunity to strive it for themselves.

As all the time, at any time when we see text-to-video synthesis fashions getting extra succesful, we will not assist however consider the future implications for our Web-connected society, which is centered round sharing media artifacts—and the final presumption that “lifelike” video usually represents actual objects in actual conditions captured by a digital camera. Future video synthesis instruments extra succesful than Lumiere will make misleading deepfakes trivially simple to create.

To that finish, within the “Societal Influence” part of the Lumiere paper, the researchers write, “Our major objective on this work is to allow novice customers to generate visible content material in an inventive and versatile approach. [sic] Nonetheless, there’s a threat of misuse for creating pretend or dangerous content material with our expertise, and we consider that it’s essential to develop and apply instruments for detecting biases and malicious use circumstances as a way to guarantee a secure and truthful use.”