Elastic has introduced that it will be donating its Common Profiling agent to the OpenTelemetry mission, setting the stage for profiling to grow to be a fourth core telemetry sign along with logs, metrics, and tracing.

This follows OpenTelemetry’s announcement in March that it will be supporting profiling and was working in direction of having a steady spec and implementation someday this yr.

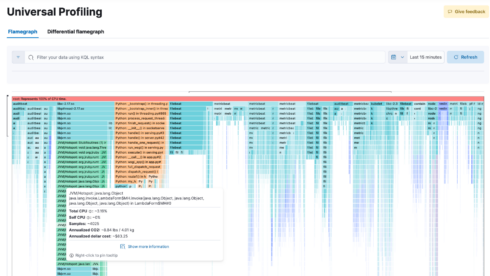

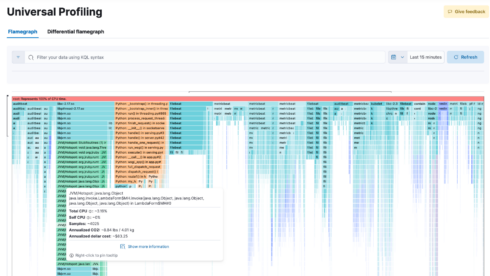

Elastic’s agent profiles each line of code working on an organization’s machines, together with utility code, kernels, and third-party libraries. It’s all the time working within the background and may acquire knowledge about an utility over time.

It measures code effectivity throughout three classes: CPU utilization, CO2, and cloud value. In keeping with Elastic, this helps firms establish areas the place waste could be decreased or eradicated in order that they’ll optimize their techniques.

Common Profiling at present helps various runtimes and languages, together with C/C++, Rust, Zig, Go, Java, Python, Ruby, PHP, Node.js, V8, Perl, and .NET.

“This contribution not solely boosts the standardization of steady profiling for observability but in addition accelerates the sensible adoption of profiling because the fourth key sign in OTel. Clients get a vendor-agnostic manner of accumulating profiling knowledge and enabling correlation with current alerts, like tracing, metrics, and logs, opening new potential for observability insights and a extra environment friendly troubleshooting expertise,” Elastic wrote in a weblog submit.

OpenTelemetry echoed these sentiments, saying: “This marks a major milestone in establishing profiling as a core telemetry sign in OpenTelemetry. Elastic’s eBPF based mostly profiling agent observes code throughout totally different programming languages and runtimes, third-party libraries, kernel operations, and system sources with low CPU and reminiscence overhead in manufacturing. Each, SREs and builders can now profit from these capabilities: rapidly figuring out efficiency bottlenecks, maximizing useful resource utilization, decreasing carbon footprint, and optimizing cloud spend.”

You may additionally like…