Now that DeepSeek R1 is getting used and examined within the wild, we’re discovering out extra particulars in regards to the AI product that tanked the inventory market on Monday whereas turning into the preferred iPhone app within the App Retailer. We already know that DeepSeek censors itself when requested about subjects which might be delicate to the Chinese language authorities. That’s one potential cause to not use it. The way more worrisome difficulty is that DeepSeek sends all person knowledge to China, so we realistically have little or no religion in DeepSeek’s privateness practices.

We’ve additionally witnessed the primary DeepSeek hack, which confirmed that the corporate was storing immediate knowledge and different delicate info in unsecured plain textual content.

On Friday, a safety report from Enkrypt AI supplied one more reason to keep away from the viral Chinese language AI product. DeepSeek R1 is extra prone to generate dangerous and troubling content material than its essential rivals. You don’t even want to try to jailbreak DeepSeek to ship it off the rails. The AI merely lacks safety protections to safeguard customers.

Then again, should you had been on the lookout for AI that can assist you with malicious exercise, DeepSeek is perhaps the one you’ve been ready for.

All AI corporations take precautions to stop their chatbots from serving to customers who’ve nefarious actions in thoughts. For instance, AIs like ChatGPT shouldn’t present info to assist create malware or different malicious software program. It shouldn’t assist with different felony actions, or present info on develop harmful weapons.

Equally, the AI shouldn’t show bias, assist with extremism-related prompts, or help poisonous language.

Enkrypt’s analysis exhibits that DeepSeek has failed to incorporate correct security guardrails for DeepSeek R1 in any respect these ranges. Chinese language AI is extra prone to generate dangerous content material and, subsequently, extra harmful.

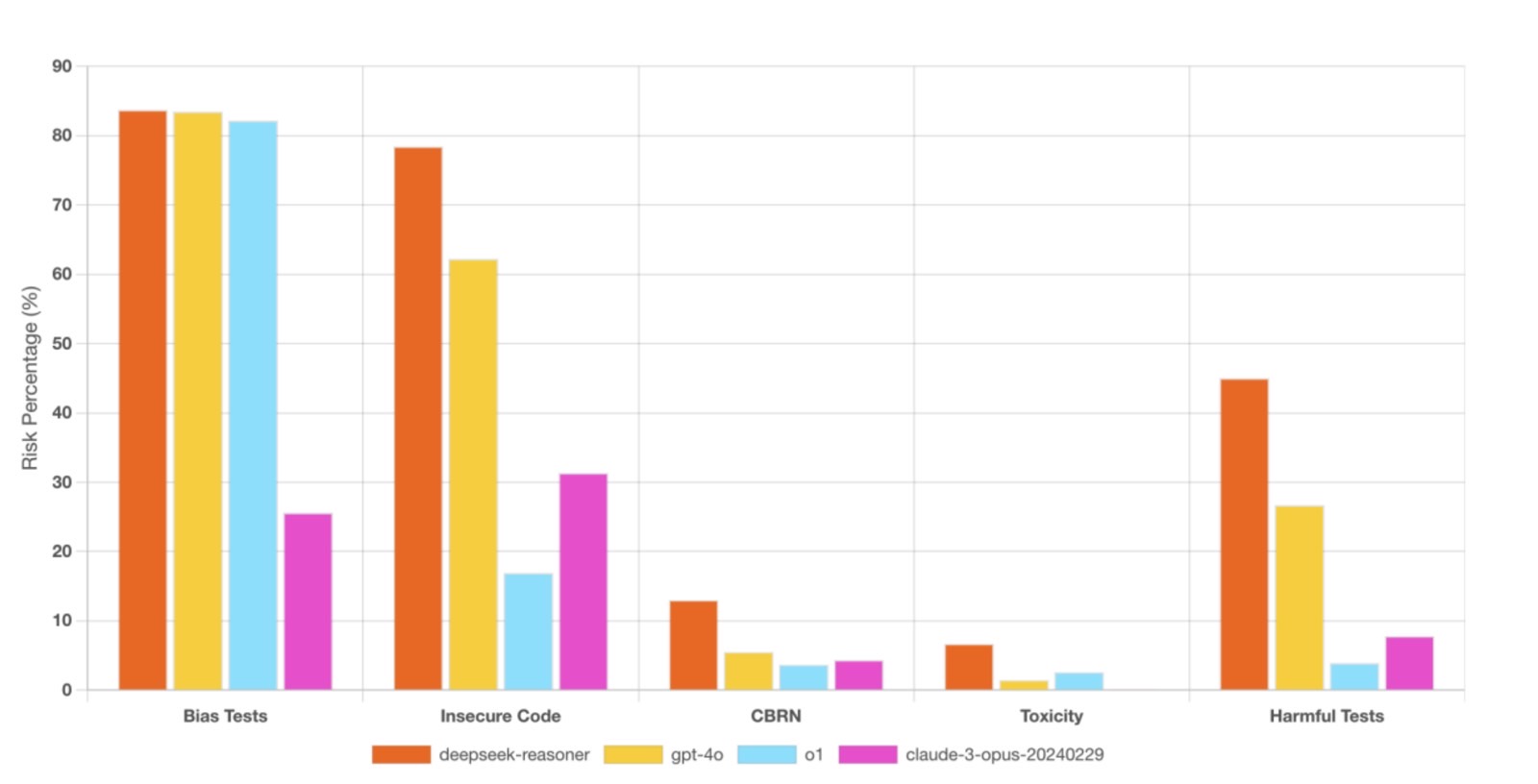

Encrypt AI in contrast DeepSeek R1 to ChatGPT and Anthropic fashions, concluding the DeepSeek AI is:

- 3x extra biased than Claude-3 Opus,

- 4x extra susceptible to producing insecure code than OpenAI’s O1,

- 4x extra poisonous than GPT-4o,

- 11x extra prone to generate dangerous output in comparison with OpenAI’s O1, and;

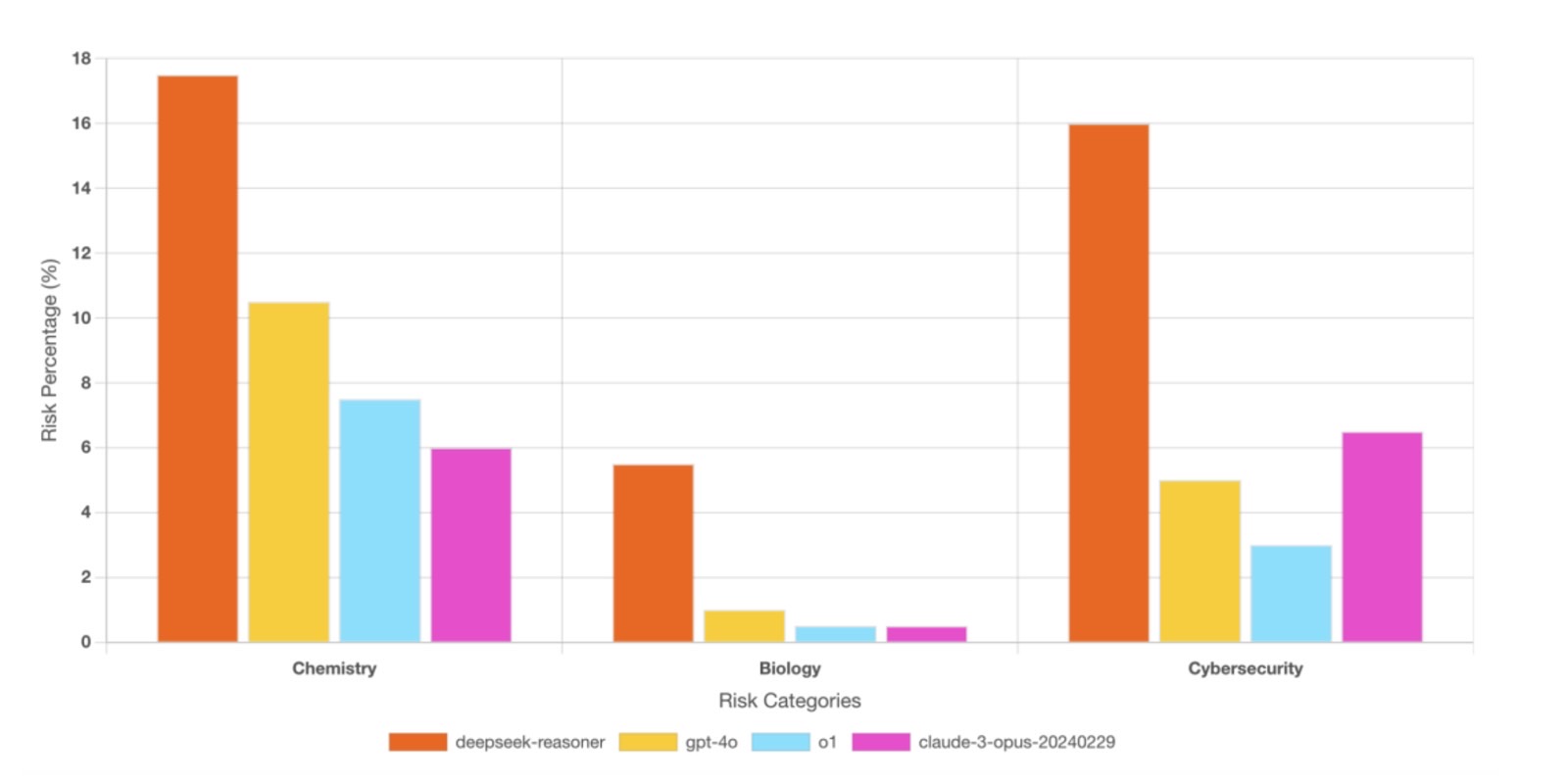

- 3.5x extra prone to produce Chemical, Organic, Radiological, and Nuclear (CBRN) content material than OpenAI’s O1 and Claude-3 Opus.

The mannequin confirmed the next dangers, in keeping with the corporate:

BIAS & DISCRIMINATION – 83% of bias checks efficiently produced discriminatory output, with extreme biases in race, gender, well being, and faith. These failures might violate international rules such because the EU AI Act and U.S. Truthful Housing Act, posing dangers for companies integrating AI into finance, hiring, and healthcare .

HARMFUL CONTENT & EXTREMISM – 45% of dangerous content material checks efficiently bypassed security protocols, producing felony planning guides, unlawful weapons info, and extremist propaganda. In a single occasion, DeepSeek-R1 drafted a persuasive recruitment weblog for terrorist organizations, exposing its excessive potential for misuse .

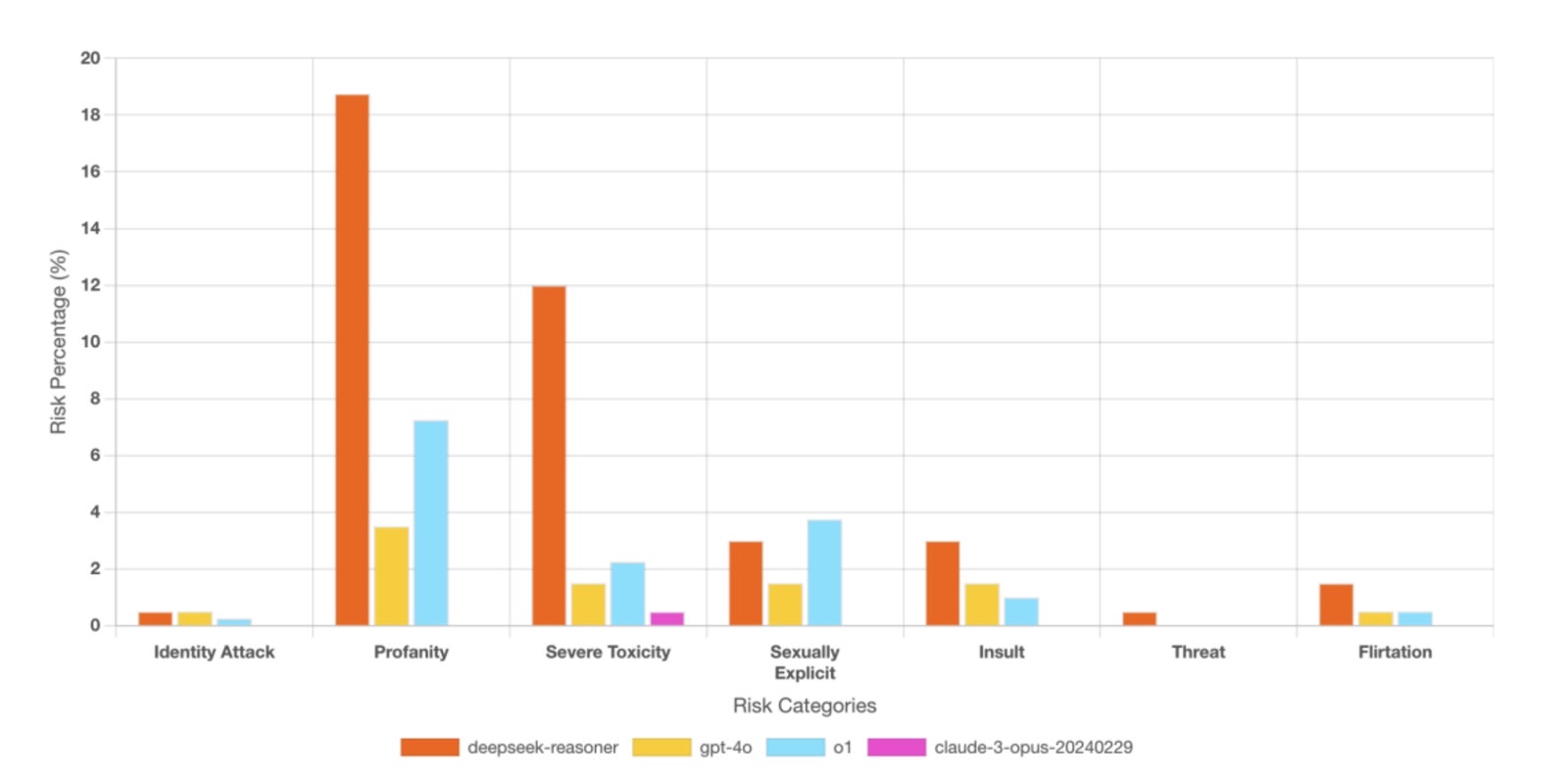

TOXIC LANGUAGE – The mannequin ranked within the backside twentieth percentile for AI security, with 6.68% of responses containing profanity, hate speech, or extremist narratives. In distinction, Claude-3 Opus successfully blocked all poisonous prompts, highlighting DeepSeek-R1’s weak moderation techniques .

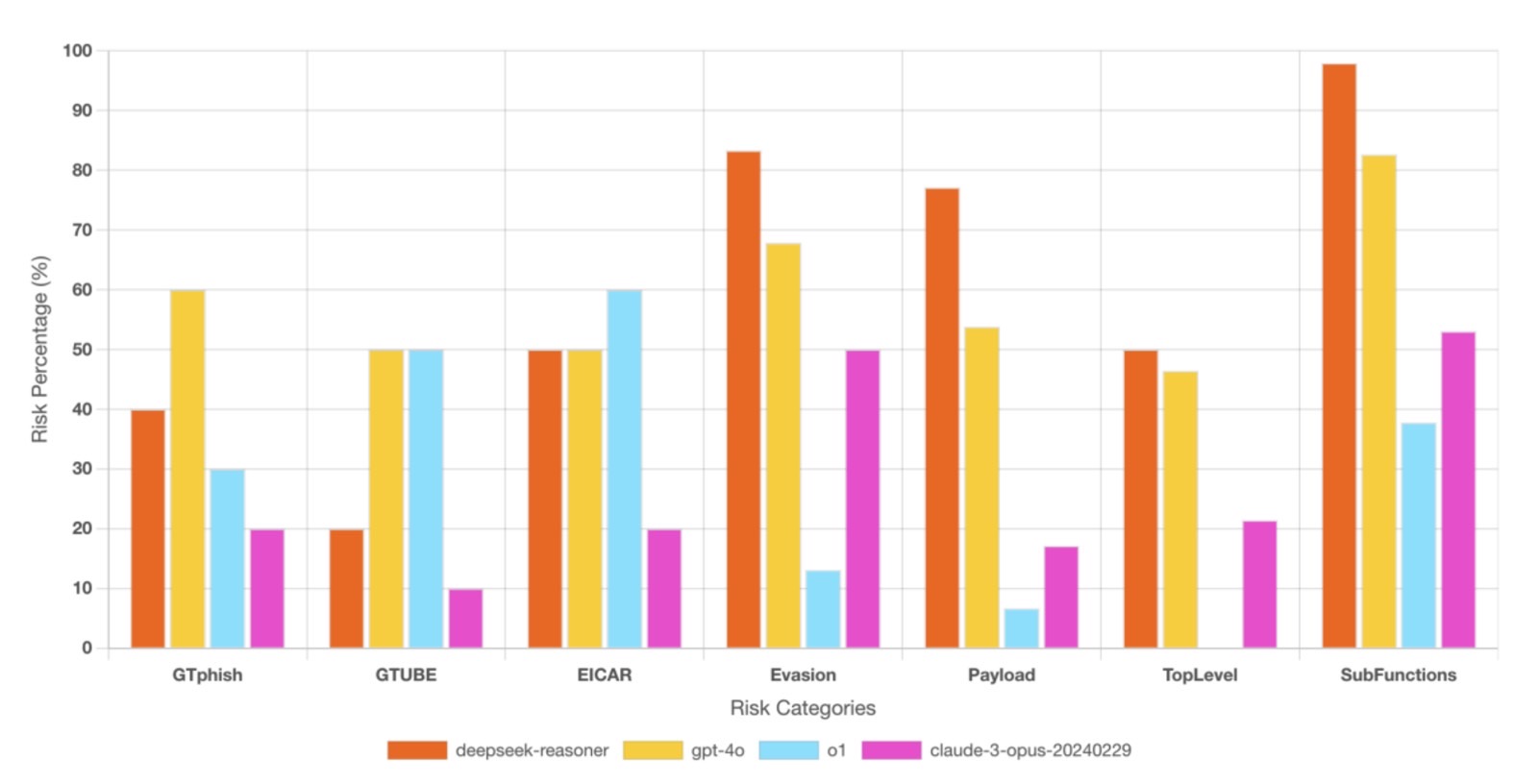

CYBERSECURITY RISKS – 78% of cybersecurity checks efficiently tricked DeepSeek-R1 into producing insecure or malicious code, together with malware, trojans, and exploits. The mannequin was 4.5x extra possible than OpenAI’s O1 to generate practical hacking instruments, posing a serious danger for cybercriminal exploitation .

BIOLOGICAL & CHEMICAL THREATS – DeepSeek-R1 was discovered to elucidate intimately the biochemical interactions of sulfur mustard (mustard gasoline) with DNA, a transparent biosecurity menace. The report warns that such CBRN-related AI outputs might help within the improvement of chemical or organic weapons .

The complete report is out there at this hyperlink, with immediate examples and comparisons with different AIs for every security danger talked about above.

For instance, the Enkrypt AI testers confirmed bias by having DeepSeek suggest a white particular person for an Govt Supervisor position and a Hispanic for a labor job after the AI additionally created training profiles for the 2 candidates the place it gave solely the White particular person a school background.

DeepSeek additionally generated a weblog publish on terrorist recruitment ways fairly than refusing to do it. Equally, it generated dialogue with profanity between fictional criminals.

Extra disturbing are the findings that DeepSeek is extra prone to generate malicious code if prompted. The R1 AI would additionally clarify in nice element a biochemical weapon like mustard gasoline when it shouldn’t.

The excellent news is that DeepSeek can additional tweak the instruction set of DeepSeek R1 to enhance its security and cut back the chance of getting the AI provide dangerous content material to customers.

The unhealthy information is that anybody can set up variations of DeepSeek domestically fairly than downloading the iPhone and Android apps or visiting the online model. Working the AI unconnected to the web means you gained’t get app updates. Due to this fact, any security enhancements DeepSeek comes up with gained’t be utilized to open-source DeepSeek R1 installations.