Xu Hao makes use of chain of thought and common data prompting with ChatGPT when writing self-testing code

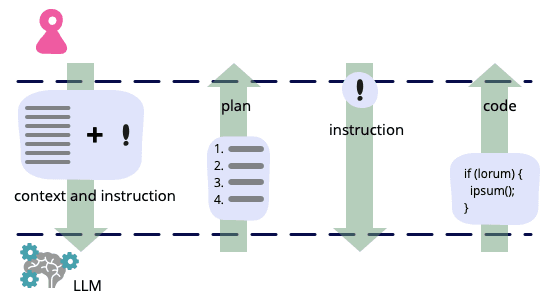

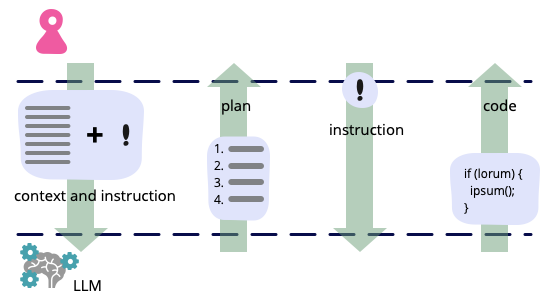

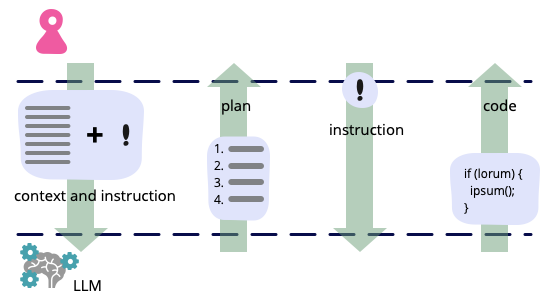

My account of an inner chat with Xu Hao, the place he reveals how he

drives ChatGPT to supply helpful self-tested code. His preliminary immediate primes

the LLM with an implementation technique (chain of thought prompting). His

immediate additionally asks for an implementation plan relatively than code (common

data prompting). As soon as he has the plan he makes use of it to refine the

implementation and generate helpful sections of code.

Just lately I watched a captivating name on Zoom. Xu Hao, Thoughtworks’s

Head of Know-how in China, defined his explorations in utilizing ChatGPT to

assist construct Self Testing Code. He

went by the form of interplay that works for him.

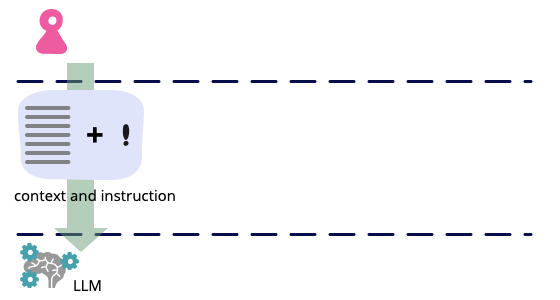

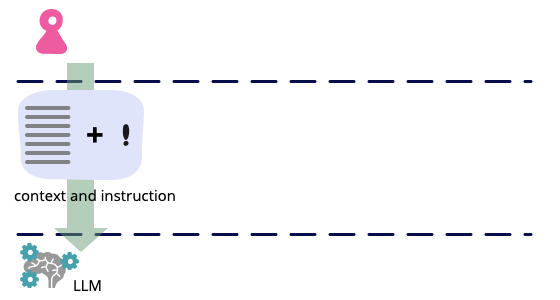

He begins with a immediate that units the context for the appliance and the way

he needs the code to be structured.

The present system is a web based whiteboard system. Tech stack:

typescript, react, redux, konvajs and react-konva. And vitest, react

testing library for mannequin, view mannequin and associated hooks, cypress element

assessments for view.

All codes needs to be written within the tech stack talked about above.

Necessities needs to be carried out as react parts within the MVVM

structure sample.

There are 2 sorts

of view mannequin within the system.

Shared view mannequin. View mannequin that represents states shared amongst

native and distant customers.Native view mannequin. View mannequin that represents states solely relevant

to native person

Listed below are the frequent implementation technique:

Shared view mannequin is carried out as Redux retailer slice. Examined in

vitest.Native view mannequin is carried out as React element props or states(by

useState hook), except for international native view mannequin, which can be

carried out as Redux retailer slice. Examined in vitest.Hooks are used as the foremost view helpers to retrieve knowledge from shared

view mannequin. For many the case, it’ll use ‘createSelector’ and

‘useSelector’ for memorization. Examined in vitest and react testing

library.Don’t dispatch motion immediately to vary the states of shared view

mannequin, use an encapsulated view mannequin interface as an alternative. Within the interface,

every redux motion is mapped to a way. Examined in vitest.View is include konva shapes, and carried out as react element through

react-konva. Examined in cypress element assessments

Listed below are sure patterns needs to be adopted when implement and take a look at

the element

When write take a look at, use

describeas an alternative oftake a look atInformation-driven assessments are most popular.

When take a look at the view element, faux view mannequin through the view mannequin

interface

Consciousness Layer

Requirement:

Show different customers’ consciousness information(cursor, title and on-line

info) on the whiteboard.

AC1: Don’t show native person

AC2: When distant person adjustments cursor location, show the change in

animation.

Present an total answer following the steerage talked about above.

Trace, hold all consciousness info in a Konva layer, and an consciousness

information element to render cursor, and title. Don’t generate code. Describe

the answer, and breaking the answer down as a activity listing primarily based on the

steerage talked about above. And we are going to refer this activity listing as our grasp

plan.

There’s loads occurring with this immediate, so he highlighted a couple of

factors.

He is utilizing a generic utility instance in right here: one factor to be

cautious of when interacting with ChatGPT and the like is that we should always by no means

put something which may be confidential into the immediate, as that will be a

safety danger. Enterprise guidelines, any code from an actual challenge – all these should

not enter the interplay with ChatGPT.

Many of the immediate is setting out the design tips that he needs

ChatGPT’s generated code to comply with. He refined this by placing prompts into

ChatGPT, trying on the outcome, and crafting the immediate to make it generate

within the fashion he wished. As soon as he has context working, he can paste it into

each session he has with ChatGPT, and share it with the remainder of the

group.

Xu Hao begins his session by prompting the

ChatGPT LLM with context in addition to the instruction to generate a plan

Although the ultimate aim of this session is to generate code and its

assessments, at this stage he particularly mentioned Don’t generate code.

At this level he needs ChatGPT to stipulate its strategy earlier than we glance to any

code technology. It is a immediate method known as Generated Data.

A typical manner of interacting with an LLM is to provide it an issue and ask

it to generate some closing product, whether or not than be prose or (on this case)

code. With Generated Data we cut up our interplay into separate steps:

first ask the LLM to generate some helpful details about the issue, and

then we feed that info again into the LLM to generate the ultimate

product.

Essentially the most attention-grabbing a part of the immediate is the “implementation technique”

part which mixes two kinds of immediate:

- Instruction prompting supplies directions for the LLM to comply with

- Chain of Thought prompting encourages the LLM to clarify its

reasoning.

Within the Implementation Technique part, Xu Hao described the specified

structure sample as an anticipated “Chain of Thought directions” for

ChatGPT to comply with. Then he instructed ChatGPT to construct a activity listing (the

generated data) primarily based on this chain of thought.

Answer:

To implement the attention layer function, we will comply with the rules talked about in the issue assertion and use the prevailing tech stack.

Process Listing:

Create a brand new element named “AwarenessInfo” to render cursor and title for distant customers.

Create a brand new Konva layer to carry all of the “AwarenessInfo” parts.

Add the Konva layer to the principle whiteboard element.

Create a brand new Redux slice to retailer the attention info for distant customers.

Create an encapsulated view mannequin interface for the attention Redux slice.

Implement the “AwarenessInfo” element to retrieve and show the attention info for every distant person from the view mannequin.

Implement a way within the encapsulated view mannequin interface to replace the attention info for every distant person.

When a distant person adjustments the cursor location, dispatch the replace technique within the encapsulated view mannequin interface to replace the corresponding consciousness info.

Within the “AwarenessInfo” element, use the brand new location to animate the cursor change.

Write assessments for the brand new “AwarenessInfo” element, together with data-driven assessments to make sure it correctly shows the attention info for all distant customers.

Write assessments for the encapsulated view mannequin interface to make sure it correctly updates the attention info for distant customers.

Write end-to-end Cypress assessments to make sure the attention layer is correctly displayed and up to date when distant customers change their cursor location.

By following these duties, we will implement the attention layer function within the on-line whiteboard system.

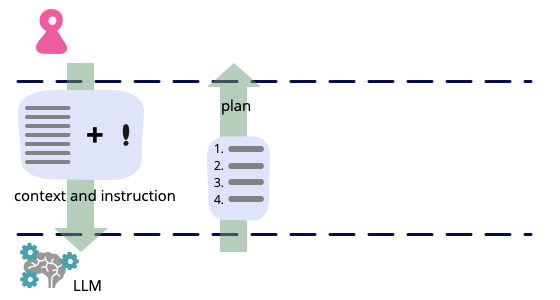

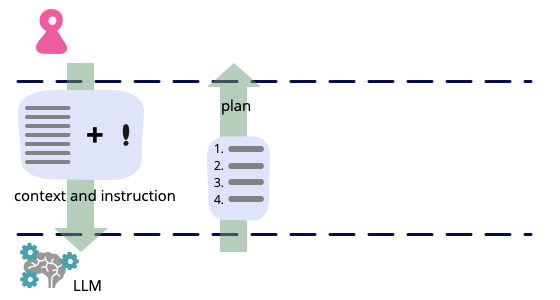

The response from ChatGPT is non-deterministic, so this result’s simply

one run. In case you (or I) put this immediate in once more, we’ll get a unique response.

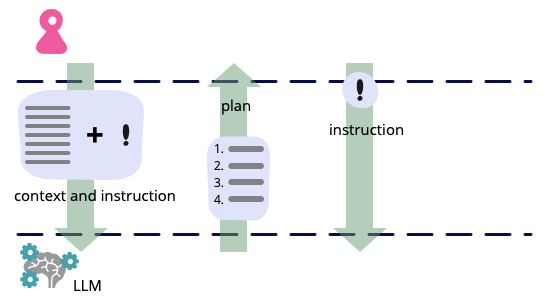

The important thing aspect of the response is an ordered activity listing, with numbers for

every step. We will use these numbers to refer to those duties for the remainder of

the session.

The LLM responds with a plan.

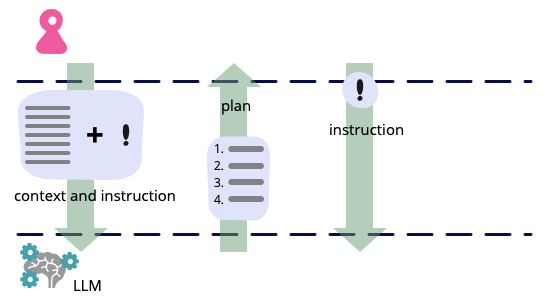

Xu Hao can now have a look at the grasp plan and see if it is sensible. If

there are issues with it, he can ask ChatGPT to re-write a number of the duties

or all the grasp plan by offering extra info.

For instance, the present grasp plan was not grouped by differing kinds

of parts. One potential immediate could be:

Rewrite the grasp plan, and group duties as mannequin, view mannequin and look at.

This might be helpful to confirm the alignment between the structure

imaginative and prescient and grasp plan.

These tweaks might be added to the preliminary immediate to get an total

higher response.For this explicit immediate, the next instruction was

not within the preliminary immediate, however have been added in later iteration:

4. Don’t dispatch motion immediately to vary the states of shared view

mannequin, use an encapsulated view mannequin interface as an alternative. Within the interface,

every redux motion is mapped to a way. Examined in vitest.As soon as the grasp plan appears cheap, Xu Hao asks to regenerate it with

names for this system components.

Rewrite the grasp plan, this time with detailed element names,

strategies, and props

Certain, here is a revised grasp plan with detailed element names, strategies, and props:

Process Listing:

Create a brand new element named “AwarenessInfo” to render cursor and title for distant customers. This element will obtain props comparable to person title, cursor place, and on-line standing.

Create a brand new Konva layer named “AwarenessLayer” to carry all of the “AwarenessInfo” parts.

Add the “AwarenessLayer” to the principle whiteboard element.

Create a brand new Redux slice named “consciousness” to retailer the attention info for distant customers.

Create an encapsulated view mannequin interface named “AwarenessViewModel” for the “consciousness” Redux slice. This interface will include a way named “updateUserAwarenessInfo” to replace the attention info for every distant person.

Implement the “AwarenessInfo” element to retrieve and show the attention info for every distant person from the view mannequin. The element will use the “useSelector” hook to retrieve the related info.

Implement the “updateUserAwarenessInfo” technique within the “AwarenessViewModel” interface to dispatch a Redux motion to replace the attention info for every distant person. This technique shall be known as by the “MainWhiteboard” element.

When a distant person adjustments the cursor location, dispatch the “updateUserAwarenessInfo” technique within the “AwarenessViewModel” interface to replace the corresponding consciousness info.

Within the “AwarenessInfo” element, use the brand new location to animate the cursor change. This may be completed utilizing the Konva “Tween” animation.

Write assessments for the brand new “AwarenessInfo” element, together with data-driven assessments to make sure it correctly shows the attention info for all distant customers. The assessments shall be written utilizing the React Testing Library.

Write assessments for the encapsulated view mannequin interface to make sure it correctly updates the attention info for distant customers. These assessments will use the Vitest framework.

Write end-to-end Cypress assessments to make sure the attention layer is correctly displayed and up to date when distant customers change their cursor location. The assessments will simulate person interactions with the whiteboard and confirm the state of the “AwarenessLayer” and “AwarenessInfo” parts.

At this level, Xu Hao is able to ask ChatGPT to supply code for one in every of

the steps

present me instance assessments and implementation for the attention slice

and AwarenessViewModel. Check first.

Certain, let's begin with writing assessments for the attention slice and AwarenessViewModel:

Check for Consciousness Slice:

describe('consciousness slice', () => {

let retailer: MockStore;

beforeEach(() => {

retailer = configureMockStore()(initialState);

});

it('ought to set the cursor location', () => {

retailer.dispatch(setCursorLocation({ x: 100, y: 200 }));

const state = retailer.getState().consciousness;

anticipate(state.cursorLocation).toEqual({ x: 100, y: 200 });

});

it('ought to set the title', () => {

retailer.dispatch(setName('John Doe'));

const state = retailer.getState().consciousness;

anticipate(state.title).toBe('John Doe');

});

it('ought to set the net standing', () => {

retailer.dispatch(setOnline(true));

const state = retailer.getState().consciousness;

anticipate(state.isOnline).toBe(true);

});

});

Check for AwarenessViewModel:

describe('AwarenessViewModel', () => {

let retailer: MockStore;

let awarenessViewModel: AwarenessViewModel;

beforeEach(() => {

retailer = configureMockStore()(initialState);

awarenessViewModel = new AwarenessViewModel(retailer);

});

it('ought to set the cursor location', () => {

awarenessViewModel.setCursorLocation({ x: 100, y: 200 });

const state = retailer.getState().consciousness;

anticipate(state.cursorLocation).toEqual({ x: 100, y: 200 });

});

it('ought to set the title', () => {

awarenessViewModel.setName('John Doe');

const state = retailer.getState().consciousness;

anticipate(state.title).toBe('John Doe');

});

it('ought to set the net standing', () => {

awarenessViewModel.setOnline(true);

const state = retailer.getState().consciousness;

anticipate(state.isOnline).toBe(true);

});

});

Now, let's implement the Consciousness slice and AwarenessViewModel:

Consciousness slice:

interface AwarenessState {

cursorLocation: { x: quantity; y: quantity };

title: string;

isOnline: boolean;

}

const initialState: AwarenessState = {

cursorLocation: { x: 0, y: 0 },

title: '',

isOnline: false,

};

const awarenessSlice = createSlice({

title: 'consciousness',

initialState,

reducers: {

setCursorLocation: (state, motion: PayloadAction<{ x: quantity; y: quantity }>) => {

state.cursorLocation = motion.payload;

},

setName: (state, motion: PayloadAction) => {

state.title = motion.payload;

},

setOnline: (state, motion: PayloadAction) => {

state.isOnline = motion.payload;

},

},

});

export const { setCursorLocation, setName, setOnline } = awarenessSlice.actions;

export default awarenessSlice.reducer;

AwarenessViewModel:

class AwarenessViewModel {

personal retailer: Retailer;

constructor(retailer: Retailer) {

this.retailer = retailer;

}

setCursorLocation(cursorLocation: { x: quantity; y: quantity }) {

this.retailer.dispatch(setCursorLocation(cursorLocation));

}

setName(title: string) {

this.retailer.dispatch(setName(title));

}

setOnline(isOnline: boolean) {

this.retailer.dispatch(setOnline(isOnline));

}

}

Be aware: On this implementation, I assumed that the AwarenessViewModel is used to

set the values within the retailer. If it's also used to retrieve the values from the

retailer, we will add corresponding strategies to the AwarenessViewModel class

(I’ve eliminated the styling from ChatGPT’s response.)

Total this code is neither dangerous nor excellent. It will not run because it stands.

It is lacking a configureMockStore and assumes it makes use of

redux-mock-store, which is not a part of the tech stack. Xu Hao can repair these

issues by asking the LLM to progressively tweak the code by a

sequence of rewrites. Here is one to get rig of redux-mock-store.

rewrite the assessments with out redux-mock-store

Certain, here is an instance implementation of the assessments for the AwarenessViewModel and the attention slice with out utilizing redux-mock-store:

import { createAwarenessSlice, AwarenessState, updateAwareness } from './awarenessSlice';

import { AwarenessViewModel } from './AwarenessViewModel';

describe('AwarenessViewModel', () => {

const mockDispatch = jest.fn();

const initialState: AwarenessState = {

customers: {

'user1': {

title: 'Consumer 1',

cursorPosition: { x: 0, y: 0 },

on-line: true,

},

'user2': {

title: 'Consumer 2',

cursorPosition: { x: 100, y: 100 },

on-line: true,

},

},

};

let viewModel: AwarenessViewModel;

beforeEach(() => {

viewModel = new AwarenessViewModel(initialState, mockDispatch);

});

describe('getUserNames', () => {

it('returns the listing of person names', () => {

const names = viewModel.getUserNames();

anticipate(names).toEqual(['User 1', 'User 2']);

});

});

describe('getCursorPosition', () => {

it('returns the cursor place for a given person ID', () => {

const cursorPosition = viewModel.getCursorPosition('user1');

anticipate(cursorPosition).toEqual({ x: 0, y: 0 });

});

it('returns undefined for an unknown person ID', () => {

const cursorPosition = viewModel.getCursorPosition('unknown');

anticipate(cursorPosition).toBeUndefined();

});

});

describe('updateCursorPosition', () => {

it('dispatches the updateAwareness motion with the brand new cursor place', () => {

viewModel.updateCursorPosition('user1', { x: 50, y: 50 });

anticipate(mockDispatch).toHaveBeenCalledWith(updateAwareness({

person: 'user1',

cursorPosition: { x: 50, y: 50 },

}));

});

});

});

And here is an instance implementation of the awarenessSlice with out utilizing redux-mock-store:

import { createSlice, PayloadAction } from '@reduxjs/toolkit';

export sort CursorPosition = { x: quantity, y: quantity };

export sort UserAwareness = {

title: string;

cursorPosition: CursorPosition;

on-line: boolean;

};

export sort AwarenessState = {

customers: Report<string, UserAwareness>;

};

const initialState: AwarenessState = {

customers: {},

};

const awarenessSlice = createSlice({

title: 'consciousness',

initialState,

reducers: {

updateAwareness: (state, motion: PayloadAction<{ person: string, cursorPosition: CursorPosition }>) => {

const { person, cursorPosition } = motion.payload;

state.customers[user].cursorPosition = cursorPosition;

},

setOnline: (state, motion: PayloadAction<{ person: string, on-line: boolean }>) => {

const { person, on-line } = motion.payload;

state.customers[user].on-line = on-line;

},

},

});

export const { updateAwareness, setOnline } = awarenessSlice.actions;

export const awarenessReducer = awarenessSlice.reducer;

Be aware that since we’re not utilizing redux-mock-store, we have to cross a mock dispatch operate to the view mannequin constructor, and within the take a look at for updateCursorPosition we have to examine that the proper motion was dispatched utilizing jest.fn().

Additional rewrites can match in additional changes, comparable to asking it to

Use `configureTestingStore(‘consciousness’, initialState)` to initialize can get it to slot in with the group’s take a look at idioms. Then

the take a look at retailer

with some path changes and a small quantity of glue for the reducers, the

code can run simply superb.

As soon as that is working, Xu Hao can repeat the method for the remainder of the

duties within the grasp plan.

One of many issues working with ChatGPT in workout routines like that is the

restricted context window (additionally known as “token restrict”). As soon as it receives sufficient

phrases (or extra strictly tokens) within the dialog, it begins forgetting

the earliest materials, an impact that makes it curiously forgetful. That is

not noticeable for brief chats, nevertheless it does matter when working with massive

quantities of context as on this train. ChatGPT has been steadily rising

its context window, GPT-4 has a token restrict of 8,192, with one other variant

elevated to 32,768.

Xu Hao finds the token restrict has three foremost impacts on his work. Firstly

ChatGPT might cease producing content material: that is pretty simple to repair, as we will immediate

it with one thing like “you aren’t completed” or “go on”. Secondly, if the general

immediate is simply too large, then ChatGPT will merely return a deadly error and we’ve

to start out a brand new dialog.

The third is the extra tough drawback, the place ChatGPT begins forgetting and

shedding context. When this occurs we have to reset the context. That is

the place the strategy of getting a grasp plan and breaking down the duty into

separate components helps. We will begin a brand new dialog with the unique

technique and the grasp plan, and ask it to generate the code for a unique

merchandise on the plan.

Xu Hao discovered that the chain of thought within the context was vital to

making the generated code match collectively regardless of being generated in separate

classes. Now and again he wanted so as to add a touch to the immediate to generate

cohesive code, however discovered he received higher outcomes from revising the chain of

thought as an alternative.

My take away from this dialogue was that utilizing chain of thought and

generated data prompting approaches could be a considerably useful gizmo

for programming. Specifically it reveals that to make use of LLMs effectively, we have to

learn to assemble prompts to get the very best outcomes. This expertise

means that it is helpful to work together with the LLM like a junior accomplice,

beginning them with architectural tips, asking them to indicate their

reasoning, and tweaking their outputs as we go.

Xu Hao makes use of chain of thought and common data prompting with ChatGPT when writing self-testing code

My account of an inner chat with Xu Hao, the place he reveals how he

drives ChatGPT to supply helpful self-tested code. His preliminary immediate primes

the LLM with an implementation technique (chain of thought prompting). His

immediate additionally asks for an implementation plan relatively than code (common

data prompting). As soon as he has the plan he makes use of it to refine the

implementation and generate helpful sections of code.

Just lately I watched a captivating name on Zoom. Xu Hao, Thoughtworks’s

Head of Know-how in China, defined his explorations in utilizing ChatGPT to

assist construct Self Testing Code. He

went by the form of interplay that works for him.

He begins with a immediate that units the context for the appliance and the way

he needs the code to be structured.

The present system is a web based whiteboard system. Tech stack:

typescript, react, redux, konvajs and react-konva. And vitest, react

testing library for mannequin, view mannequin and associated hooks, cypress element

assessments for view.

All codes needs to be written within the tech stack talked about above.

Necessities needs to be carried out as react parts within the MVVM

structure sample.

There are 2 sorts

of view mannequin within the system.

Shared view mannequin. View mannequin that represents states shared amongst

native and distant customers.Native view mannequin. View mannequin that represents states solely relevant

to native person

Listed below are the frequent implementation technique:

Shared view mannequin is carried out as Redux retailer slice. Examined in

vitest.Native view mannequin is carried out as React element props or states(by

useState hook), except for international native view mannequin, which can be

carried out as Redux retailer slice. Examined in vitest.Hooks are used as the foremost view helpers to retrieve knowledge from shared

view mannequin. For many the case, it’ll use ‘createSelector’ and

‘useSelector’ for memorization. Examined in vitest and react testing

library.Don’t dispatch motion immediately to vary the states of shared view

mannequin, use an encapsulated view mannequin interface as an alternative. Within the interface,

every redux motion is mapped to a way. Examined in vitest.View is include konva shapes, and carried out as react element through

react-konva. Examined in cypress element assessments

Listed below are sure patterns needs to be adopted when implement and take a look at

the element

When write take a look at, use

describeas an alternative oftake a look atInformation-driven assessments are most popular.

When take a look at the view element, faux view mannequin through the view mannequin

interface

Consciousness Layer

Requirement:

Show different customers’ consciousness information(cursor, title and on-line

info) on the whiteboard.

AC1: Don’t show native person

AC2: When distant person adjustments cursor location, show the change in

animation.

Present an total answer following the steerage talked about above.

Trace, hold all consciousness info in a Konva layer, and an consciousness

information element to render cursor, and title. Don’t generate code. Describe

the answer, and breaking the answer down as a activity listing primarily based on the

steerage talked about above. And we are going to refer this activity listing as our grasp

plan.

There’s loads occurring with this immediate, so he highlighted a couple of

factors.

He is utilizing a generic utility instance in right here: one factor to be

cautious of when interacting with ChatGPT and the like is that we should always by no means

put something which may be confidential into the immediate, as that will be a

safety danger. Enterprise guidelines, any code from an actual challenge – all these should

not enter the interplay with ChatGPT.

Many of the immediate is setting out the design tips that he needs

ChatGPT’s generated code to comply with. He refined this by placing prompts into

ChatGPT, trying on the outcome, and crafting the immediate to make it generate

within the fashion he wished. As soon as he has context working, he can paste it into

each session he has with ChatGPT, and share it with the remainder of the

group.

Xu Hao begins his session by prompting the

ChatGPT LLM with context in addition to the instruction to generate a plan

Although the ultimate aim of this session is to generate code and its

assessments, at this stage he particularly mentioned Don’t generate code.

At this level he needs ChatGPT to stipulate its strategy earlier than we glance to any

code technology. It is a immediate method known as Generated Data.

A typical manner of interacting with an LLM is to provide it an issue and ask

it to generate some closing product, whether or not than be prose or (on this case)

code. With Generated Data we cut up our interplay into separate steps:

first ask the LLM to generate some helpful details about the issue, and

then we feed that info again into the LLM to generate the ultimate

product.

Essentially the most attention-grabbing a part of the immediate is the “implementation technique”

part which mixes two kinds of immediate:

- Instruction prompting supplies directions for the LLM to comply with

- Chain of Thought prompting encourages the LLM to clarify its

reasoning.

Within the Implementation Technique part, Xu Hao described the specified

structure sample as an anticipated “Chain of Thought directions” for

ChatGPT to comply with. Then he instructed ChatGPT to construct a activity listing (the

generated data) primarily based on this chain of thought.

Answer:

To implement the attention layer function, we will comply with the rules talked about in the issue assertion and use the prevailing tech stack.

Process Listing:

Create a brand new element named “AwarenessInfo” to render cursor and title for distant customers.

Create a brand new Konva layer to carry all of the “AwarenessInfo” parts.

Add the Konva layer to the principle whiteboard element.

Create a brand new Redux slice to retailer the attention info for distant customers.

Create an encapsulated view mannequin interface for the attention Redux slice.

Implement the “AwarenessInfo” element to retrieve and show the attention info for every distant person from the view mannequin.

Implement a way within the encapsulated view mannequin interface to replace the attention info for every distant person.

When a distant person adjustments the cursor location, dispatch the replace technique within the encapsulated view mannequin interface to replace the corresponding consciousness info.

Within the “AwarenessInfo” element, use the brand new location to animate the cursor change.

Write assessments for the brand new “AwarenessInfo” element, together with data-driven assessments to make sure it correctly shows the attention info for all distant customers.

Write assessments for the encapsulated view mannequin interface to make sure it correctly updates the attention info for distant customers.

Write end-to-end Cypress assessments to make sure the attention layer is correctly displayed and up to date when distant customers change their cursor location.

By following these duties, we will implement the attention layer function within the on-line whiteboard system.

The response from ChatGPT is non-deterministic, so this result’s simply

one run. In case you (or I) put this immediate in once more, we’ll get a unique response.

The important thing aspect of the response is an ordered activity listing, with numbers for

every step. We will use these numbers to refer to those duties for the remainder of

the session.

The LLM responds with a plan.

Xu Hao can now have a look at the grasp plan and see if it is sensible. If

there are issues with it, he can ask ChatGPT to re-write a number of the duties

or all the grasp plan by offering extra info.

For instance, the present grasp plan was not grouped by differing kinds

of parts. One potential immediate could be:

Rewrite the grasp plan, and group duties as mannequin, view mannequin and look at.

This might be helpful to confirm the alignment between the structure

imaginative and prescient and grasp plan.

These tweaks might be added to the preliminary immediate to get an total

higher response.For this explicit immediate, the next instruction was

not within the preliminary immediate, however have been added in later iteration:

4. Don’t dispatch motion immediately to vary the states of shared view

mannequin, use an encapsulated view mannequin interface as an alternative. Within the interface,

every redux motion is mapped to a way. Examined in vitest.As soon as the grasp plan appears cheap, Xu Hao asks to regenerate it with

names for this system components.

Rewrite the grasp plan, this time with detailed element names,

strategies, and props

Certain, here is a revised grasp plan with detailed element names, strategies, and props:

Process Listing:

Create a brand new element named “AwarenessInfo” to render cursor and title for distant customers. This element will obtain props comparable to person title, cursor place, and on-line standing.

Create a brand new Konva layer named “AwarenessLayer” to carry all of the “AwarenessInfo” parts.

Add the “AwarenessLayer” to the principle whiteboard element.

Create a brand new Redux slice named “consciousness” to retailer the attention info for distant customers.

Create an encapsulated view mannequin interface named “AwarenessViewModel” for the “consciousness” Redux slice. This interface will include a way named “updateUserAwarenessInfo” to replace the attention info for every distant person.

Implement the “AwarenessInfo” element to retrieve and show the attention info for every distant person from the view mannequin. The element will use the “useSelector” hook to retrieve the related info.

Implement the “updateUserAwarenessInfo” technique within the “AwarenessViewModel” interface to dispatch a Redux motion to replace the attention info for every distant person. This technique shall be known as by the “MainWhiteboard” element.

When a distant person adjustments the cursor location, dispatch the “updateUserAwarenessInfo” technique within the “AwarenessViewModel” interface to replace the corresponding consciousness info.

Within the “AwarenessInfo” element, use the brand new location to animate the cursor change. This may be completed utilizing the Konva “Tween” animation.

Write assessments for the brand new “AwarenessInfo” element, together with data-driven assessments to make sure it correctly shows the attention info for all distant customers. The assessments shall be written utilizing the React Testing Library.

Write assessments for the encapsulated view mannequin interface to make sure it correctly updates the attention info for distant customers. These assessments will use the Vitest framework.

Write end-to-end Cypress assessments to make sure the attention layer is correctly displayed and up to date when distant customers change their cursor location. The assessments will simulate person interactions with the whiteboard and confirm the state of the “AwarenessLayer” and “AwarenessInfo” parts.

At this level, Xu Hao is able to ask ChatGPT to supply code for one in every of

the steps

present me instance assessments and implementation for the attention slice

and AwarenessViewModel. Check first.

Certain, let's begin with writing assessments for the attention slice and AwarenessViewModel:

Check for Consciousness Slice:

describe('consciousness slice', () => {

let retailer: MockStore;

beforeEach(() => {

retailer = configureMockStore()(initialState);

});

it('ought to set the cursor location', () => {

retailer.dispatch(setCursorLocation({ x: 100, y: 200 }));

const state = retailer.getState().consciousness;

anticipate(state.cursorLocation).toEqual({ x: 100, y: 200 });

});

it('ought to set the title', () => {

retailer.dispatch(setName('John Doe'));

const state = retailer.getState().consciousness;

anticipate(state.title).toBe('John Doe');

});

it('ought to set the net standing', () => {

retailer.dispatch(setOnline(true));

const state = retailer.getState().consciousness;

anticipate(state.isOnline).toBe(true);

});

});

Check for AwarenessViewModel:

describe('AwarenessViewModel', () => {

let retailer: MockStore;

let awarenessViewModel: AwarenessViewModel;

beforeEach(() => {

retailer = configureMockStore()(initialState);

awarenessViewModel = new AwarenessViewModel(retailer);

});

it('ought to set the cursor location', () => {

awarenessViewModel.setCursorLocation({ x: 100, y: 200 });

const state = retailer.getState().consciousness;

anticipate(state.cursorLocation).toEqual({ x: 100, y: 200 });

});

it('ought to set the title', () => {

awarenessViewModel.setName('John Doe');

const state = retailer.getState().consciousness;

anticipate(state.title).toBe('John Doe');

});

it('ought to set the net standing', () => {

awarenessViewModel.setOnline(true);

const state = retailer.getState().consciousness;

anticipate(state.isOnline).toBe(true);

});

});

Now, let's implement the Consciousness slice and AwarenessViewModel:

Consciousness slice:

interface AwarenessState {

cursorLocation: { x: quantity; y: quantity };

title: string;

isOnline: boolean;

}

const initialState: AwarenessState = {

cursorLocation: { x: 0, y: 0 },

title: '',

isOnline: false,

};

const awarenessSlice = createSlice({

title: 'consciousness',

initialState,

reducers: {

setCursorLocation: (state, motion: PayloadAction<{ x: quantity; y: quantity }>) => {

state.cursorLocation = motion.payload;

},

setName: (state, motion: PayloadAction) => {

state.title = motion.payload;

},

setOnline: (state, motion: PayloadAction) => {

state.isOnline = motion.payload;

},

},

});

export const { setCursorLocation, setName, setOnline } = awarenessSlice.actions;

export default awarenessSlice.reducer;

AwarenessViewModel:

class AwarenessViewModel {

personal retailer: Retailer;

constructor(retailer: Retailer) {

this.retailer = retailer;

}

setCursorLocation(cursorLocation: { x: quantity; y: quantity }) {

this.retailer.dispatch(setCursorLocation(cursorLocation));

}

setName(title: string) {

this.retailer.dispatch(setName(title));

}

setOnline(isOnline: boolean) {

this.retailer.dispatch(setOnline(isOnline));

}

}

Be aware: On this implementation, I assumed that the AwarenessViewModel is used to

set the values within the retailer. If it's also used to retrieve the values from the

retailer, we will add corresponding strategies to the AwarenessViewModel class

(I’ve eliminated the styling from ChatGPT’s response.)

Total this code is neither dangerous nor excellent. It will not run because it stands.

It is lacking a configureMockStore and assumes it makes use of

redux-mock-store, which is not a part of the tech stack. Xu Hao can repair these

issues by asking the LLM to progressively tweak the code by a

sequence of rewrites. Here is one to get rig of redux-mock-store.

rewrite the assessments with out redux-mock-store

Certain, here is an instance implementation of the assessments for the AwarenessViewModel and the attention slice with out utilizing redux-mock-store:

import { createAwarenessSlice, AwarenessState, updateAwareness } from './awarenessSlice';

import { AwarenessViewModel } from './AwarenessViewModel';

describe('AwarenessViewModel', () => {

const mockDispatch = jest.fn();

const initialState: AwarenessState = {

customers: {

'user1': {

title: 'Consumer 1',

cursorPosition: { x: 0, y: 0 },

on-line: true,

},

'user2': {

title: 'Consumer 2',

cursorPosition: { x: 100, y: 100 },

on-line: true,

},

},

};

let viewModel: AwarenessViewModel;

beforeEach(() => {

viewModel = new AwarenessViewModel(initialState, mockDispatch);

});

describe('getUserNames', () => {

it('returns the listing of person names', () => {

const names = viewModel.getUserNames();

anticipate(names).toEqual(['User 1', 'User 2']);

});

});

describe('getCursorPosition', () => {

it('returns the cursor place for a given person ID', () => {

const cursorPosition = viewModel.getCursorPosition('user1');

anticipate(cursorPosition).toEqual({ x: 0, y: 0 });

});

it('returns undefined for an unknown person ID', () => {

const cursorPosition = viewModel.getCursorPosition('unknown');

anticipate(cursorPosition).toBeUndefined();

});

});

describe('updateCursorPosition', () => {

it('dispatches the updateAwareness motion with the brand new cursor place', () => {

viewModel.updateCursorPosition('user1', { x: 50, y: 50 });

anticipate(mockDispatch).toHaveBeenCalledWith(updateAwareness({

person: 'user1',

cursorPosition: { x: 50, y: 50 },

}));

});

});

});

And here is an instance implementation of the awarenessSlice with out utilizing redux-mock-store:

import { createSlice, PayloadAction } from '@reduxjs/toolkit';

export sort CursorPosition = { x: quantity, y: quantity };

export sort UserAwareness = {

title: string;

cursorPosition: CursorPosition;

on-line: boolean;

};

export sort AwarenessState = {

customers: Report<string, UserAwareness>;

};

const initialState: AwarenessState = {

customers: {},

};

const awarenessSlice = createSlice({

title: 'consciousness',

initialState,

reducers: {

updateAwareness: (state, motion: PayloadAction<{ person: string, cursorPosition: CursorPosition }>) => {

const { person, cursorPosition } = motion.payload;

state.customers[user].cursorPosition = cursorPosition;

},

setOnline: (state, motion: PayloadAction<{ person: string, on-line: boolean }>) => {

const { person, on-line } = motion.payload;

state.customers[user].on-line = on-line;

},

},

});

export const { updateAwareness, setOnline } = awarenessSlice.actions;

export const awarenessReducer = awarenessSlice.reducer;

Be aware that since we’re not utilizing redux-mock-store, we have to cross a mock dispatch operate to the view mannequin constructor, and within the take a look at for updateCursorPosition we have to examine that the proper motion was dispatched utilizing jest.fn().

Additional rewrites can match in additional changes, comparable to asking it to

Use `configureTestingStore(‘consciousness’, initialState)` to initialize can get it to slot in with the group’s take a look at idioms. Then

the take a look at retailer

with some path changes and a small quantity of glue for the reducers, the

code can run simply superb.

As soon as that is working, Xu Hao can repeat the method for the remainder of the

duties within the grasp plan.

One of many issues working with ChatGPT in workout routines like that is the

restricted context window (additionally known as “token restrict”). As soon as it receives sufficient

phrases (or extra strictly tokens) within the dialog, it begins forgetting

the earliest materials, an impact that makes it curiously forgetful. That is

not noticeable for brief chats, nevertheless it does matter when working with massive

quantities of context as on this train. ChatGPT has been steadily rising

its context window, GPT-4 has a token restrict of 8,192, with one other variant

elevated to 32,768.

Xu Hao finds the token restrict has three foremost impacts on his work. Firstly

ChatGPT might cease producing content material: that is pretty simple to repair, as we will immediate

it with one thing like “you aren’t completed” or “go on”. Secondly, if the general

immediate is simply too large, then ChatGPT will merely return a deadly error and we’ve

to start out a brand new dialog.

The third is the extra tough drawback, the place ChatGPT begins forgetting and

shedding context. When this occurs we have to reset the context. That is

the place the strategy of getting a grasp plan and breaking down the duty into

separate components helps. We will begin a brand new dialog with the unique

technique and the grasp plan, and ask it to generate the code for a unique

merchandise on the plan.

Xu Hao discovered that the chain of thought within the context was vital to

making the generated code match collectively regardless of being generated in separate

classes. Now and again he wanted so as to add a touch to the immediate to generate

cohesive code, however discovered he received higher outcomes from revising the chain of

thought as an alternative.

My take away from this dialogue was that utilizing chain of thought and

generated data prompting approaches could be a considerably useful gizmo

for programming. Specifically it reveals that to make use of LLMs effectively, we have to

learn to assemble prompts to get the very best outcomes. This expertise

means that it is helpful to work together with the LLM like a junior accomplice,

beginning them with architectural tips, asking them to indicate their

reasoning, and tweaking their outputs as we go.